Security experts have long opined that one way to make software more secure is to hold software makers liable for vulnerabilities in their products. This idea is often dismissed as unrealistic and one that would stifle innovation in an industry that has been a major driver of commercial growth and productivity over the years. But a new study released this week presents perhaps the clearest economic case yet for compelling companies to pay for information about security vulnerabilities in their products.

Before I delve into this modest proposal, let’s postulate a few assumptions that hopefully aren’t terribly divisive:

- Modern societies are becoming increasingly dependent on software and computer programs.

- After decades of designing software, human beings still build imperfect, buggy, and insecure programs.

- Estimates of the global damage from cybercrime ranges from the low billions to hundreds of billions of dollars annually.

- The market for finding, stockpiling and hoarding (keeping secret) software flaws is expanding rapidly.

- Vendor-driven “bug bounty” programs which reward researchers for reporting and coordinating the patching of flaws are expanding, but currently do not offer anywhere near the prices offered in the underground or by private buyers.

- Software security is a “negative externality”: like environmental pollution, vulnerabilities in software impose costs on users and on society as a whole, while software vendors internalize profits and externalize costs. Thus, absent any demand from their shareholders or customers, profit-driven businesses tend not to invest in eliminating negative externalities.

Earlier this month, I published a piece called How Many Zero-Days Hit You Today, which examined a study by vulnerability researcher Stefan Frei about the bustling market for “zero-day” flaws — security holes in software that not even the makers of those products know about. These vulnerabilities — particularly zero-days found in widely-used software like Flash and Java — are extremely valuable because attackers can use them to slip past security defenses unnoticed.

Frei’s analysis conservatively estimated that private companies which purchase software vulnerabilities for use by nation states and other practitioners of cyber espionage provide access to at least 85 zero-day exploits on any given day of the year. That estimate doesn’t even consider the number of zero-day bugs that may be sold or traded each day in the cybercrime underground.

At the end of that post, I asked readers whether it was possible and/or desirable to create a truly global, independent bug bounty program that would help level the playing field in favor of the defenders and independent security researchers. Frei’s latest paper outlines one possible answer.

BUYING ALL BUGS AT ABOVE BLACK-MARKET PRICES

Frei proposes creating a multi-tiered, “international vulnerability purchase program” (IVPP), in which the major software vendors would be induced to purchase all of the available and known vulnerabilities at prices well above what even the black market is willing to pay for them. But more on that in a bit.

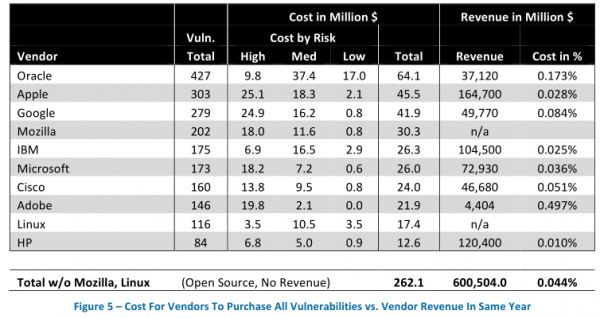

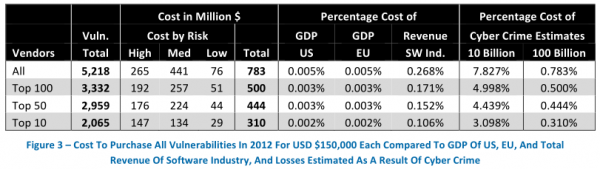

The director of research for Austin, Texas-based NSS Labs, Frei examined all of the software vulnerabilities reported in 2012, and found that the top 10 software makers were responsible for more than 30 percent of all flaws fixed. Frei estimates that if these vendors were to have purchased information on all of those flaws at a steep price of $150,000 per vulnerability — an amount that is well above what cybercriminals or vulnerability brokers typically offer for such bugs — this would still come to less than one percent of the annual revenues for these software firms.

Frei points out that the cost of purchasing all vulnerabilities for all products would be considerably lower than the savings that would occur as a result of the expected reduction in losses occurring as a result of cyber crime — even under the conservative estimate that these losses would be reduced by only 10 percent.

In the above chart, for example, we can see Oracle — the software vendor responsible for Java and a whole heap of database software code that is found in thousands of organizations — fixed more than 427 vulnerabilities last year. It also brought in more than $37 billion in revenues that year. If Oracle were to pay researchers top dollar ($150,000) for each vulnerability, that would still come to less than two-tenths of one percent of the company’s annual revenues (USD $67 million).

Frei posits that if vendors were required to internalize the cost of such a program, they would likely be far more motivated to review and/or enhance the security of their software development processes.

Likewise, Frei said, such a lucrative bug bounty system would virtually ensure that every release of commercial software products would be scrutinized by legions of security experts.

“In the short term, it would hit the vendors very badly,” Frei said in a phone interview with KrebsOnSecurity. “But in the long term, this would produce much more secure software.”

“When you look at new innovations like cars, airplanes and electricity, we see that security and reliability was enhanced tremendously with each as soon as there was independent testing,” said Frei, an experienced helicopter pilot. “I was recently reading a book about the history of aviation, and [it noted that in] the first iteration of the NTSB [National Transportation Safety Board] it was explicitly stated that when they investigate an accident, if they could not find a mechanical failure, they blamed the pilot. This is what we do now with software: We blame the user. We say, you should have installed antivirus, or done this and that.”

HOW WOULD IT WORK?

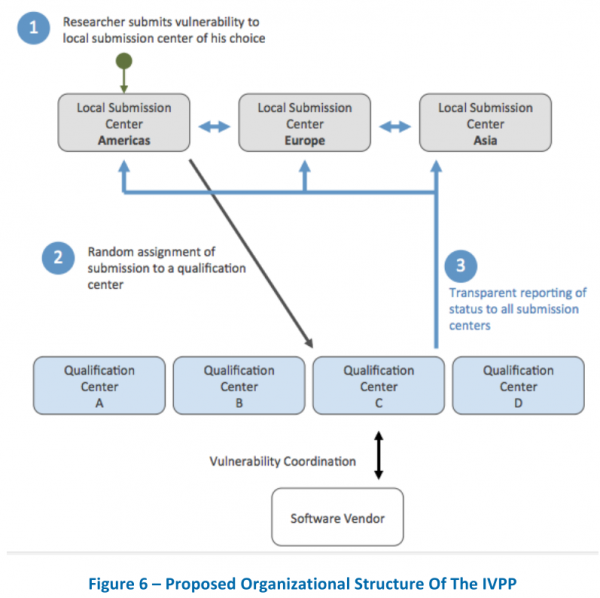

In my challenge to readers, I asked for thoughts on how a global bug bounty program might maintain its independence and not be overly influenced by one national government or another. To combat this potential threat, Frei suggests creating a multi-tiered organization that would consist of several regional or local vulnerability submission centers — perhaps one for the Americas, another for Europe, and a third for Asia.

Those submission centers would then contract with “technical qualification centers” (the second tier) to qualify the submissions, and to work with researchers and the affected software vendors.

“Most critical is that the IVPP employs an organizational structure with multiple entities at each tier,” wrote Frei and fellow NSS researcher Francisco Artes. “This will ensure the automatic and consistent sharing of all relevant process information with all local submission centers, thus guaranteeing that the IVPP operates independently and is trustworthy.”

According to Frei and Artes, this structure would allow researchers to check the status of a submission with any submission center, and would allow each submission center to verify that it possesses all information — including submissions from other centers.

“Because the IVPP would be handling highly sensitive information, checks and balances are critical,” the two wrote. “They would make it difficult for any party to circumvent the published policy of vulnerability handling. A multi-tiered structure prevents any part of the organization, or legal entity within which it is operating, from monopolizing the process or the information being analyzed. Governments could still share vulnerabilities with their agencies, but they would no longer have exclusive access to this information and for extended periods of time.”

IS IT A GOOD IDEA?

Frei’s elaborate system is well thought-out, but it glosses over the most important catalyst: The need for government intervention. While indeed an increasing number of software and Internet companies have begun offering bug bounties (Google and Mozilla have for some time, and Microsoft began offering a limited bounty earlier this year), few of them pay anywhere near what private vulnerability brokers can offer, and would be unlikely to up the ante much absent a legal requirement to do so.

Robert Graham, CEO of Errata Security, said he strongly supports the idea of companies offering bug bounties, so long as they’re not forced to do so by government fiat.

“The amount we’re losing from malicious hacking is a lot less than what we gain from the free and open nature of Internet,” Graham said. “And that includes the ability of companies to quickly evolve their products because they don’t have to second-guess every decision just so they can make things more secure.”

Graham said he takes issue with the notion that most of the losses from criminal hacking and cybercrime are the direct result of insecure software. On the contrary, he said, most of the attacks that result in costly data breaches for companies these days stem from poorly secured Web applications. He pointed to Verizon‘s annual Data Breach Investigations Report, which demonstrates year after year that most data breaches stem not from software vulnerabilities, but rather from a combination of factors including weak or stolen credentials, social engineering, and poorly configured servers and Web applications.

“Commercial software is a tiny part of the whole vulnerability problem,” Graham said.

Graham acknowledged that the mere threat of governments imposing some kind of requirement is often enough to induce businesses and entire industries to self-regulate and take affirmative steps to avoid getting tangled in more bureaucratic red tape. And he said if ideas like Frei’s prompt more companies to offer bug bounties on their own, that’s ultimately a good thing, noting that he often steers clients toward software vendors that offer bug bounties.

“Software that [is backed by a bug] bounty you can trust more, because it’s more likely that people are looking for and fixing vulnerabilities in the code,” Graham said. “So, it’s a good thing if customers demand it, but not such a good thing if governments were to impose it.”

Where do you come down on this topic, dear readers? Feel free to sound off in the comments below. A copy of Frei’s full paper is available here (PDF).

Excellent idea, but I guess as much as you raise the price of bugs, underground people will always pay more…This is driven by the millions they’re making out of it…

At 150k per vulnerability the problem then becomes finding anyone willing to perform penetration tests of any value. All the good pentesters will be off getting rich cashing in on the gold rush of vulnerabilities. Pentesting companies would be unable to keep testers on staff for a salary a fraction of what they can make finding a single bug in any commercial software ever made. Even standard programmers with a basic understanding of secure coding practices would be tempted to quit their jobs and go bug hunting instead. You’d have to make pentesting companies financially liable for missed vulnerabilities or they (or their employees) would be tempted to charge for assessments and then turn around and submit found but unreported bugs for big paydays.

I believe the biggest issue that needs to be addressed is the price difference. Closing or matching the gap of what’s being offered on the black market is essential if these larger companies really want to tackle this.

I recall the man who figured out how to gain access to any Facebook account and was awarded a mere $20,000: http://www.bbc.co.uk/news/technology-23097404

You’ll always have people who will find bugs and for ethical reasons not deal with any underground markets but it’s those people who you ultimately want to attract.

Only by pulling away members from that demographic can you hope to change the status quo.

While i support the overall idea, the revenue for HP in the tables is the overall revenue of the corporation, including MSP, printers, Pcs, servers and such. HP Softwares revenue is more in the area of 28Bn rather than the quoted 120Bn. See http://www.forbes.com/sites/greatspeculations/2013/11/25/hp-earnings-preview-decline-in-revenue-is-likely-abating/

Good catch, and I’m guessing that mistake has been made in all those figures for the other companies as well.

I was thinking the same thing. For example Java is oracle owned and freely distributed, however its the bulk of vulnerability costs of oracle.

If Oracle were forced to pay for Java vulnerabilities they might just stop supporting Java and hold on to any patents. (Most security professionals would probably open a bottle of nice scotch, but realistically that would be an unfortunate loss for people who actually do stuff with computers…..)

I think the answer is indeed a central vulnerability repository where people are paid for software vulnerabilities, and I think pentesters should be able to purchase exploits there too, however those vulnerabilities should be disclosed freely to the impacted vendors. This would be the idea balance between full and responsible disclosure because it allows researchers to earn a legitimate income while providing a public service and it allows vendors to respond.

Asking vendors to pay black market prices is a bit knee jerk… how much does a good security researcher need to make a year off bug bounties to be willing to do that instead of some other job… 100k 200k? I’m sure if you ask them they won’t complain if you give them 65 million, but didn’t the creator of blackhole exploit kit only make 500k a year? And isn’t he in Jail now? 1/5th the income and being on the right side of the law sounds pretty fair deal for most people….

After thinking about this some more the best bet might be an exploit auction house that collects weponized exploits, discloses them to the vendor immediately and begins selling them at a flat rate to pentesters, security vendors and nation states for $500 – $1500 USD depending on its effectiveness. The proceeds(after a small percentage), would go to the first researcher to report/provide the exploit.

If you consider that there are at least 180 countries in the world and 3/4 of those have or will have an offensive cyber security program a research could make 67,000 – 202,000 on purchases from nation states alone.

You may ask why a researcher would go this road instead of the black market… but I think the clever researcher might just sell to both 😉 Also over time this makes exploits a open market commodity and the black market would be forced to adjust prices down to compete….

If you consider the price of a good pentest, how many pentesters would be willing to just price the cost of a few zero days into the cost of their pentest services.

The best part about this vs a government led approach is that when the market will no longer support the researchers this might just fade away… unlike some crazy tax that will eventually outlive its usefulness.

The only downside I see is that it makes companies very conservative in their release cycles. If you can’t release until every bug is fixed, well, that would require being able to mathematically prove the software is free of bugs. Which is an O(log(infinity)) process.

There are three kinds of security bugs:

1) known security flaws which the company intentionally ignores to meet an arbitrary release date,

2) flaws which should have been uncovered by exhaustive testing, and

3) flaws which were totally unexpected.

#1 is fraud; companies should be hammered for these. #2 is negligence; companies should be lightly slapped and required to update their testing procedures ASAP. #3 might be something completely new; companies might be given a pass on these as long as they fix them ASAP.

An independent arbitration body, e.g. IEEE, should devise the rules for the three categories.

Also, who pays for security vulnerabilities in open source software? Will you have to be a licensed developer in order to release any software?

Open source software is free, so there is no implied warranty; caveat emptor and all that.

However, if a company bundles a flavor of Linux in a retail product, e.g. a smartphone, that company assumes liability and must test appropriately.

Honestly, I think that it is a matter of awareness and values. If people don’t mind (yes, many people don’t care at all) about their own government breaking software as a shortcut to spy on their enemies (even assuming that they are being honest…), it is helpless to aim for mechanisms to make software more secure. So I find this proposal useless. Just keep on reporting krebs…. This is the only thing that can make people aware of the fact that if government has such resources, the underground criminals usually end up having double as much. It seems obvious to me but apparently many people don’t get it. Good software design is possible if people behind it have good values but one has the impression that good projects are systematically sabotaged in one way or the other (see Firefox, MacOSX, OpenBSD, Ubuntu for a few case-studies).

Defective products in every other industry are recalled and the manufacturer held liable.

Is software like a book or a toaster? Can toasters be sold with a shrink-wrap EULA that says there is no liability, and requires arbitration?

Simply make companies liable, and they can decide what combination – better tools, people, or bounties will work.

Note all the companies have very smart people. Will employees get even one more penny in the paycheck if they find a serious vulnerability? What are the incentives?

One thing that could also occur would be the old ‘Write A Mini Van’ problem from a Dilbert strip some years ago: http://dilbert.com/strips/comic/1995-11-13

The flip side to this is that you’ll see fewer commercial software houses, longer release cycles, and developers that are reluctant to add new features. There is no good answer here. If the bugs have value, a bounty will only help drive up the black market cost. The big software houses have the resources, now, to hire the best – and they’re still putting out bug filled software. At $150,000 per bug, how many developers would quit their day jobs at the big software houses and just focus on bug discovery?

I hope some of you taking the time to comment will read the research paper linked at the bottom of this story. Frei makes a decent point in response to the comment you offer here, Andy, noting that there is an upper limit on how much traditional cybercrooks are willing to pay to buy zero-days. From the paper:

“Some argue that with an IVPP offering competitive rewards for vulnerabilities, cyber criminals will raise the price that they offer for vulnerabilities. However, cyber criminals cannot afford to offer more for vulnerabilities than the return they expect from their investment. The IVPP on the other hand cannot offer more than the expected reduction of the losses.

The huge collateral losses that are induced by cyber crime far exceed the criminal’s return, typically by orders of

magnitude. An IVPP therefore could systematically outbid cyber criminals and still be economically sound because

the cost of the losses that are prevented would outweigh the return for cyber criminals. “

I would argue the problem here is the calculation of the “overall cost to society”. Compare it to something like the cap & trade system. It would take quite a significant government intervention to charge that full cost of losses to the source. Just because the “collateral losses” are large, doesn’t mean that they can all be borne by this theoretical program to drive up the value of the bounties.

(different andy 🙂 )

The part of Frei’s position that scares me the most is the idea that gov’t should be involved in the software bug business and he laid out a possible solution that involves taxing software sales to pay for the IVPP. This is not a gov’t problem. In fact, if you look at the U.S. gov’t you’ll see plenty of examples of how bad it is at the IT game – the only exception is the NSA, but, of course we don’t know the NSA budget.

I think an even bigger problem would be that often when you add in expensive penalties it becomes cheaper to simply go after the messenger. After all in order to cash in on such a thing, you would have to prove you found it, prove you found it first, and prove that it really is an issue. At any of those stages a company with a vested interest in not paying out could cause difficulties.

Just look at the lengths vendors go to sweeping problems under the rug as it is, and those are just for PR reasons. Imagine what they would do if they also had to pay people.

You may be right, but did you read the section in the story about how Frei proposes setting up the vulnerability submission centers?

My comment on the submission centers is this: How would they make operational revenue? Would they get a share of the bounty, or would bug-hunters be required to pay to play?

It seems to me that each tier would need to have a source of revenue in order to function, and this would never get off the ground if it had to be funded by the government(s).

There would naturally have to be some checks and balances in here, and you do run the risk of some unscrupulous coders building vulnerabilities into the software to sell the information to someone else (the write me a minivan example), but you already have that, technically. An unscrupulous coder could write in vulnerabilities now and sell them on the black market, resulting in the same thing.

As a matter of fact, I’ll bet there are already some folks like this out there who build in something like this and then turn around and sell the zero-day on one of the forums.

I think the prices on this would self-regulate eventually, as well. The balance would not be through “collateral” costs, though. It would be cost to the business, but it would not be limited to lost revenue or potential litigation damages, either. The companies would have to also factor in reputation cost and opportunity costs, as well.

Honestly, I think every software vendor should begin offering this, anyway. In part to take away some government’s incentive to do it for them.

Simple, just follow ebay system. Time limit on auction 48 hours. This will wake up companies like Yahoo – pronto.

First, let’s be crystal clear on who pays for cyber-crime: consumers. Businesses are allowed to outsource the costs of failing to secure their systems to their customers. That’s the state of American capitalism today. I say this to forestall the inane comments of teabaggers who will claim that any intervention will cost jobs.

Making businesses responsible for cyber-mistakes will have major impacts on how software is developed:

– Incompetent managers will no longer be able to demand that some arbitrary date must be met, forcing developers to take shortcuts and unintentionally include vulnerabilities. Companies will voluntarily create an internal ombudsman who will mandate good software quality because the fines will be bad publicity.

– Outsourcing will be forced to change. No longer will companies send work to India without testing the results thoroughly.

– Given that almost all PC hardware is manufactured in China, companies will be forced to reassess their policy of allowing Chinese companies to manufacture parts without testing the results thoroughly to ensure that hardware back-doors have not been added.

Anyone who has worked in the software business will appreciate the above. Those who have not, won’t.

Bravos.

“At 150k per vulnerability the problem then becomes finding anyone willing to perform penetration tests of any value.”

At 150k per vulnerability, if the money has to come from the company making the software you are killing a ton of free software or software made by small shops. Imagine what this kind of idea would have done during the early Mac and DOS era.

This is a good point. You’d almost have to tier it by size of the vendor, as well, or exempt all vendors under a certain size.

What it would have done is prevent the age of cybercrime we now live in, by improving software coding and review standards dramatically at a point in time where it would have been the easiest and cheapest to do so.

Nicely put.

It is like saying that a small winery should be excused if it occasionally produces a chardonnay which contains methyl alcohol.

I don’t see a need for this to be enforced.

I do see a possibility to correlate the hight of a bug-bounty for a software to the amount the company would have to pay for an insurance against security flaws and how much maximum damage the insurance would cover.

With the possibility to offer an insurance against damage caused by security flaws, there would be a big incentive for software companys to get software more secure and offer a reasonably high bug-bounty.

My opinion is to better spend the money to train and educate internet users better about internet security issues so that they don’t open up malware attachments or surf the wrong websites using unsecured software.

The problem is that to many people are using the internet and don’t understand the risk in what they are doing because of a lack of knowledge This is what cyber-criminals are preying on , they are just using security flaws in software to perpetrate their abilities to manipulate and con unsuspecting users .

Good point. Everyday I see computers infected by fake installers from Google Earth, Skype, OpenOffice, etc. Users continue to use search engines to locate free software and Google allows the purveyors of this crapware to advertise. Today, a Google search for Skype still returns two paid ads for fake installers from “wwwappsforcomputernet” and “downloadinfoco”.

Bug bounties can possibly fix the automated scanning for vulnerabilities but it won’t fix stupid.

The internet has now become so ubiquitous for a wide range of users that it is up to the software companies to ensure that their products are safe. What other industry can get away with issuing faulty products and then blame the user for not being careful?

+ 1

Come on, we all know companies like Oracle and Apple will do absolutely nothing unless forced to by either legislation or in the face of a successful and massive lawsuit.

They simply have no skin in the game at present, and so have nothing to gain by agreeing to this.

Question – what about smartphone apps? Will these also be subjected to the same scrutiny?

“Come on, we all know companies like Oracle and Apple will do absolutely nothing unless forced to by either legislation or in the face of a successful and massive lawsuit.”

You forgot Facebook.

“what about smartphone apps? Will these also be subjected to the same scrutiny?”

They had better, given that sales of PCs are dropping fast. Microsoft’s introduction of Windows 8 was a tacit acknowledgment that smartphones and tablets are the future of personal computing.

Someone’s passing an excerpt of this off as their own over at slashdot.

http://it.slashdot.org/story/13/12/17/1332212/the-case-for-a-global-compulsory-bug-bounty?utm_source=rss1.0moreanon&utm_medium=feed

“Security experts have long opined that one way to make software more secure is to hold software makers liable for vulnerabilities in their products. This idea is often dismissed as unrealistic and one that would stifle innovation in an industry that has been a major driver of commercial growth and productivity over the years. But a new study released this week presents perhaps the clearest economic case yet for compelling companies to pay for information about security vulnerabilities in their products. Stefan Frei, director of research at NSS Labs, suggests compelling companies to purchase all available vulnerabilities at above black-market prices, arguing that even if vendors were required to pay $150,000 per bug, it would still come to less than two-tenths of one percent of these companies’ annual revenue. To ensure that submitted bugs get addressed and not hijacked by regional interests, Frei also proposes building multi-tiered, multi-region vulnerability submission centers that would validate bugs and work with the vendor and researchers. The questions is, would this result in a reduction in cybercrime overall, or would it simply hamper innovation? As one person quoted in the article points out, a majority of data breaches that cost companies tens of millions of dollars have far more to do with other factors unrelated to software flaws, such as social engineering, weak and stolen credentials, and sloppy server configurations.”

Can’t complain about that, Andrew; it links back to this site and is driving traffic here. 🙂

Ah. My bad, didn’t follow the link.

In general, I tend to agree, however:

1: Government. While they are likely the only ones with a big enough hammer to bonk big companies over the head, by involving the gov’t, the purity of the idea will be hopelessly corrupted, manipulated, and destroyed as it’s implemented.

2: Flexibility/speed. The IVPP *must* stay streamlined to quickly and efficiently receive, process, validate, and forward on the bugs that are found. This is another reason putting the gov’t in charge is a bad idea. Name one streamlined gov’t agency!

3: Jobs. Many here have commented on jobs being lost for those who will just find flaws all day – I for one doubt it. Yes, the prize is there, but look at the competition!

4: Fraud. Dilbert writes code, knows it’s flawed, and colludes with a friend to “find” the flaw.. profit. It could and would happen, but would certainly be a career-limiting move as companies crack down on those who consistently write junk code.

I like the idea, but looking at the history of government trying to mess with things they don’t understand, it’s never pretty.

Rather than “Bug Bounties”, I’d much rather see requirements for software testing and certification through third parties – an “Underwriter’s Laboratories” or “Insurance Institute for Highway Safety”, if you will.

We crash-test automobiles to ensure they’re safe – perhaps similar scrutiny is needed for modern software.

With eCurmudgeon, the way for government to do this is via purchasing power.

Western governments have software testing capabilities, either in-house, or outsourced. They use these to evaluate products for inclusion in secure environments.

They merely need to tweak their own purchasing, and IT rules, to broaden the scope of where these rules apply, and the world will change to comply since they represent the largest coherent group of consumers.

First time one of these turns to a vendor and says “we aren’t using that till your apps pass our fuzz test” and suddenly that vendor will start doing fuzz testing (or become irrelevant).

Two things need to change, education of big purchasers, AND availability of testers. Bounty hunting is usually restricted to post sale, which is simply too late.

The pressure in software is always to deliver on time and too budget, and generally even in quite sensitive environments, the budget and mandate for doing it right, or imposing quality standards is limited or non-existent.

Use the same standards for software companies as for civil engineers. This will work well (imo).

Although 150k is a decent price for vulns, it won’t entice those capable of writing and selling finished exploits for >$500k a pop.

After thinking about it for a while and reading NSS Labs’ report, here is my proposal.

All users of the Internet benefit from botnet take-downs and vendor payments for exploits in the same sense that even those imbeciles who refuse to vaccinate themselves or their children benefit from those who do via herd immunity. We need to make all users pay.

We need a mix of government intervention and private industry control. The government will enact a tax of some sort to pay for this scheme. We can argue as to the form of the tax — penny per email, fraction of a penny per packet transmitted, $X per hour of Internet use, whatever — but this money will be used to fund an industry group. By the way, if our dysfunctional government fails to pass this tax as they probably would do, the ISPs could enact it by themselves, with foreign ISPs blocked from the West until they join the party.

This group cannot allow itself to be controlled by selfish, greedy corporations, e.g. Google and Facebook, or dangerous and/or criminal groups, e.g. Huawei, any Chinese government agency, or Vladimir Putin’s thugs, but rather by interested parties who do not have a personal axe to grind. Companies like the anti-virus vendors, Microsoft, Cisco, Fedora Linux, Mozilla, HP, IBM, and the like are prime candidates. Neutral people like Brian should sit on the board, but people like Bruce Schneier who blame all of the world’s problems on the NSA should not. I am certainly not saying that the NSA is benevolent, however, just that a sense of balance and sanity must be maintained.

This group will become a corporation in itself. It will hire software engineers and IT personnel to become the world’s exploit detective. Their salaries will be paid via the aforementioned tax. This will not be a bureaucratic company where people can never be fired. This group must not be outsourced to India or China for many obvious reasons. Its employees should be a mix of nationalities, but certain people, e.g. Chinese nationals, should be excluded (read Reuters’ article “How China’s weapon snatchers penetrate American defenses” if you disagree). Offices in North America, Europe, and Asia would all be maintained to allow for 24-hour exploit work. Some form of a security clearance would need to be run for each employee to reduce the likelihood of a Russian mafia insider from gaining entrance. One way to be hired would be to find an unknown exploit and present it to the gang.

I eagerly await the horseshoes which will be thrown at me.

This is much more likely to work as an industry consortium or non-profit NGO than a government-run program, IMO. The big software players would fight the idea of a government-run program, but might support a self-regulated method such as the author suggests in passing on p.15.

Such an org would need to be able to 1) Determine the going price of different types of vulnerability, 2) Independently verify vulnerabilities, perhaps through third party contractors, and 3) Notify vendors and broker transactions, presumably taking a small cut to finance the non-profit’s continued operation.

If a large company is notified by a legitimate source that a vulnerability in their software is available on the market and likely to sell for $x based on severity, they’re likely to take that seriously… if for no other reason that their clients’ lawyers can come after them for it later if they don’t act.

After reading the report it seems to me that this process would work very well. The numbers are there to back it up. Why should we settle for products that are inferior or have defects. We don’t settle for that in our consumer goods and are protected by our governments from malicious products and failed products that cause human destruction.

Why should software companies be any different?

They should not be exempt from the same laws that govern our autos and airplanes.

We should have legal recourse to recover our losses if we are left damaged by a flaw in a purchased product.

The law should be changed. If they had to be responsible for leaving a gaping hole in a product that can be used for criminal’s to make money they would be more interested in producing a better product.

The method for encouraging reporting of flaws for money would help remove the edge that nation states and criminal’s have today.

It is true that humans will continue to produce flawed code and flawed electronics, but if we have a system like this there would be a lot less of it.

It is also true that humans will continue to be the one weakness in any security system. Con men have been around for years and they are going to be fooling trusting humans forever.

No point in trying to fix it all at once. This is a fabulous start!

“Come senators, congressmen

Please heed the call

Don’t stand in the doorway

Don’t block up the hall

For he that gets hurt

Will be he who has stalled

There’s a battle outside

And it is ragin’

It’ll soon shake your windows

And rattle your walls

For the times they are a-changin’.” Bob Dylan’s song “The Times They Are A-Changin'” seems like a perfect description of the general situation. Now for the boring details. The problem is one of crime. “Estimates of the global damage from cybercrime ranges from the low billions to hundreds of billions of dollars annually.” The word billions usually gets people’s attention and fully justifies immediate action. Regretfully, society only changes after huge problems have hit. The Crash of 1929 lead to the creation of the SEC and other institutions that we now consider absolute necessities. Pollution related problems lead the creation of the EPA to guard our environment. Quality problems in Japanese manufacturing were severe in the 1950s. Enter Edwards Deming and Six Sigma. We have fixed problems before and now we need new institutions based on an international system to fix the security problems with the Internet.

Addressing ” Frei suggests creating a multi-tiered organization that would consist of several regional or local vulnerability submission centers — perhaps one for the Americas, another for Europe, and a third for Asia.”, I would add some auditing structure to prevent government tampering or undue influence for the area serviced. I am trying to avoid saying the word “China”.

“Security experts have long opined that one way to make software more secure is to hold software makers liable for vulnerabilities in their products. This idea is often dismissed as unrealistic and one that would stifle innovation in an industry that has been a major driver of commercial growth and productivity over the years.” Please consider UL (Underwriters Laboratories). They have been around since the nineteenth century testing all kinds of things. Who would be crazy enough to buy an appliance that is not UL approved? Consider the new system to be a “UL” for the Internet.

Lastly, while trying to wrestle free a cart from the cart corral in the local grocery store, I overheard two gentlemen discussing the disaster that had just befallen one of them. The man’s PC had just been encrypted by ransomware and the ransom was $300.

I see one huge problem with the global bounty program.

Let’s say you find a security hole. And around the same time three more people find the same bug. Who would get paid? What stops someone from entering the bounty program and turning around and selling it on the black market to make more money?

Also, on another note, unless the bounty program is run efficiently, it can waste money. The argument that it’s only .1% of the GDP sounds great to run a program until you want to run 10^1000000 programs.

>I see one huge problem with the global bounty program.

>Let’s say you find a security hole. And around the same time three more people find the same >bug. Who would get paid?

Thats an easy one, first come, first serve (UTC timestamp dd.mm.yy.hh.mm.ss)

The odds of having more then 1 submission at a time would be zero.

If 2 bugs were submitted at the very same time, it would very likely have been an automated submission and foul play.

>What stops someone from entering the bounty program and turning around and selling it on the black market to make more money?

Nothing will stop someone from selling a bug twice,

however once the bug has been submitted, mitigation is already possible and the creation of a well functional exploit probably no longer worth the effort – plus there will be an audit trail since submissions will unlikely be anonymous / paid in bitcoin.

This raises the question how researchers will register to such a programm, and what technological hurdles will have to be overcome to make it fly from an administrative standpoint.

The information would be very sensitive and if on another note Dr. Frei recommends assumption that networks always being compromised, it would be interesting to learn on how this problem could be dealt with.

>Also, on another note, unless the bounty program is run efficiently, it can waste money. The argument that it’s only .1% of the GDP sounds great to run a program until you want to run 10^1000000 programs.

Please look at the numbers again carefully – I consider this objection invalid.

> Frei suggests creating a multi-tiered organization that would consist of several regional or local vulnerability submission centers — perhaps one for the Americas, another for Europe, and a third for Asia.

Yay, more supranational organizations. Maybe we can call it the WbTO — World bug Tracking Organization.

The idea of internalizing costs is great, but mandatory bug bounties won’t accomplish that. Not all zero-days are created equal, and the best 5% are worth MUCH MUCH more than $150k. Your scheme merely ensures that the crappy zero-days will get fixed.

At the end of the day, tort liability and (ugh) lawsuits are the only scheme for product liability that has a track record of even partially working. It’s great to try to improve on that, but don’t fall for the hubris of thinking the IT security industry is going to come up with some great legal/policy breakthrough that the rest of the world somehow just overlooked for the last 50-odd years.

> the best 5% are worth MUCH MUCH more than $150k. Your scheme merely ensures that the crappy zero-days will get fixed.

I should add that the hard part — from the perspective of a regulatory organization — is assigning a value to a zero-day. Flat-rate won’t work, and any sort of committee/advisory-board scheme will suffer from regulatory capture… there are just too many lobbying dollars in the software industry for that to not happen.

We’ve got these…..

http://www.cert.org/vuls/

What we don’t have is the coordination of sites. Nothing does it all. Nothing can do it all. There is a TON of software produced every year. Go look at places like CNET. They have software that is rated gold to commode level software.

The problem with all of this is that the solution to the problem is NOT thought of first. the creation of an idea comes out. Everyone things since it’s new, its not flawed. Oh, its flawed – its just a new world of discovery, and people are curious and anxious – much like a gazer at Time Square – trying to take it all in. In the mean time the train of progress is already speeding down the track of progress, and change management or its successor is miserably falling behind alittle each day.

Start all of this when the Operating Software giants come out with the next new big software system(s), and you have a baseline. Simply do not let the software run unless it has been graded/approved by the ” software organization”. Should a person want to use crappy software, make them jump through hoops in order to use every piece of…..crappy software that has not been tested.

Then you get in to testing – and every version that comes out – its a near overwhelming – and overwhelmed staff at the :software organizaton” to keep up with what is submitted. People don’t like corner marketing of things, but in some cases, if controlled, it would work alot better than it is currently.

In My opinion, the Blackhats have the bounty program going, but it is to a small population of devices and/or software.

This would all be fixed if any software that was produced was tested, much like the common criteria is done. It would assign a report card style grade and then, its up to the people to decide whether the software is worth thier money. Yeah I know. People can read and pay attention, but for the most part, some act as if they are back in the caveman days and operate as such. They don’t pay attention and thus, add to the pile of problems.

Another thing. Its a trendy idea. With trends come the miscreants and thier bandwagon advertisements filled with evil software.

Its quite simple. actually. If people would show alittle pride in ownership and do the fuzzing and SQL injection prevention ( like at this site; https://www.owasp.org/index.php/SQL_Injection_Prevention_Cheat_Sheet ) then practice makes things better.

Until you get people to pay attention, until you get the government to get off its hind quarters and become proactive, this is something that will be addressed again, probably almost in the same context in about another 3-5 years.

Nothing is going to change unless the people decide to accept change.

I think tz hit upon a big factor in the whole issue, the EULA. Liability avoidance is only a part of it. Clauses banning reverse engineering, benchmarking, or any other activity potentially detrimental to the image of the software or vendor including security testing.

Lawsuits against vendors for liabilities will not gain much momentum until these and other clauses in EULAs are put to judicial review.

Absolutely agree. These EULA’s put liability so far out of arms reach for these companies, it’s time for judicial review of them. I read somewhere a while back that there were stirrings in Congress about these EULA’s, prompted by a few cases where a judge ruled a user couldn’t be responsible because the nomenclature used in the EULA couldn’t be understood by the average layperson. Time to take it further and eliminate this insulation from liability. That said, Frei’s idea sounds nice, but I keep telling myself, we wouldn’t need an elaborate tiered organization like this if software vendors themselves audited their own products before being sold/released – I will sell no software before its time. Then a vendor wouldn’t have to worry about any penalties from liabilities from bugs that were overlooked. Right?

Must be the most stupid idea of 2013 🙂

First sell it to the underground then sell it to the “government ” . double the money Dudes 🙂

Especially if governments all ready know about at least 85 zero-day exploits on any given day of the year ? but nobody gives a F……

System will be abused .