Roughly two years ago, I began an investigation that sought to chart the baddest places on the Internet, the red light districts of the Web, if you will. What I found in the process was that many security experts, companies and private researchers also were gathering this intelligence, but that few were publishing it. Working with several other researchers, I collected and correlated mounds of data, and published what I could verify in The Washington Post. The subsequent unplugging of malware and spammer-friendly ISPs Atrivo and then McColo in late 2008 showed what can happen when the Internet community collectively highlights centers of badness online.

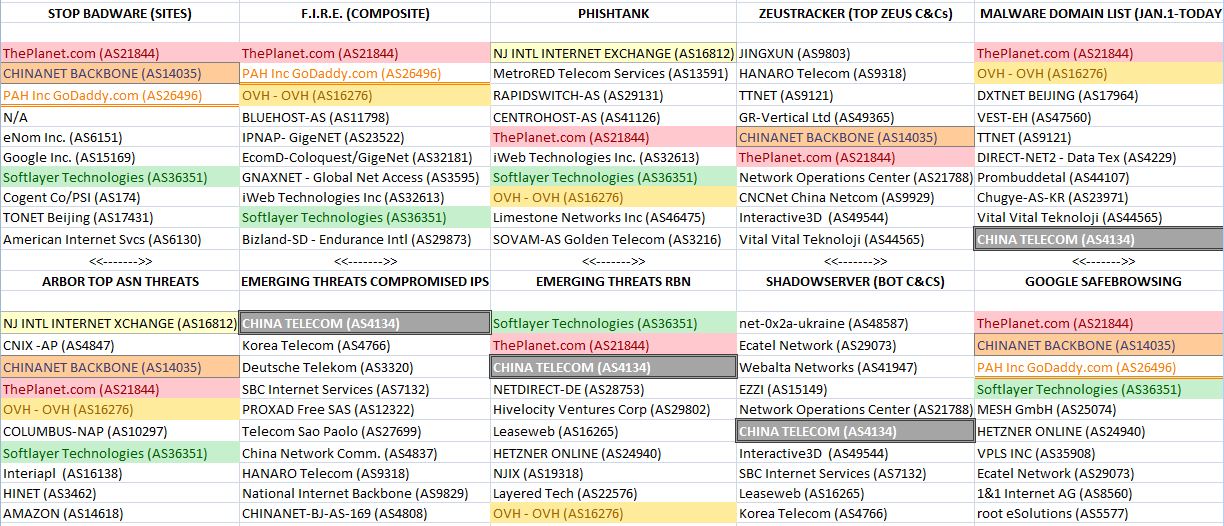

Fast-forward to today, and we can see that there are a large number of organizations publishing data on the Internet’s top trouble spots. I polled some of the most vigilant sources of this information for their recent data, and put together a rough chart indicating the Top Ten most prevalent ISPs from each of their vantage points. [A few notes about the graphic below: The ISPs or hosts that show up more frequently than others on these lists are color-coded to illustrate consistency of findings. The ISPs at the top of each list are the “worst,” or have the most number of outstanding abuse issues. “AS” stands for “autonomous system” and is mainly a numerical way of keeping track of ISPs and hosting providers. Click the image to enlarge it.]

What you find when you start digging through these various community watch efforts is not that the networks named are entirely or even mostly bad, but that they do tend to have more than their share of neighborhoods that have been overrun by the online equivalent of street gangs. The trouble is, all of these individual efforts tend to map ISP reputation from just one or a handful of perspectives, each of which may be limited in some way by particular biases, such as the type of threats that they monitor. For example, some measure only phishing attacks, while others concentrate on charting networks that play host to malicious software and botnet controllers. Some only take snapshots of badness, as opposed to measuring badness that persists at a given host for a sizable period of time.

What you find when you start digging through these various community watch efforts is not that the networks named are entirely or even mostly bad, but that they do tend to have more than their share of neighborhoods that have been overrun by the online equivalent of street gangs. The trouble is, all of these individual efforts tend to map ISP reputation from just one or a handful of perspectives, each of which may be limited in some way by particular biases, such as the type of threats that they monitor. For example, some measure only phishing attacks, while others concentrate on charting networks that play host to malicious software and botnet controllers. Some only take snapshots of badness, as opposed to measuring badness that persists at a given host for a sizable period of time.

Also, some organizations that measure badness are limited by their relative level of visibility or by simple geography. That is to say, while the Internet is truly a global network, any one watcher’s view of things may be colored by where they are situated in the world geographically, or where they most often encounter threats, as well as their level of visibility beyond their immediate horizon.

In February 2009, I gave the keynote address at a Messaging Anti-Abuse Working Group (MAAWG) conference in San Francisco, where I was invited to talk about research that preceded the Atrivo and McColo takedowns. The biggest point I tried to hammer home in my talk was that there was a clear need for an entity whose organizing principle was to collate and publish near real-time information on the Web’s most hazardous networks. Instead of having 15 or 20 different organizations independently mapping ISP reputation, I said, why not create one entity that does this full-time?

Unfortunately, some of the most clear-cut nests of badness online — the Troyaks of the world and other networks that appear to designed from the ground up for cyber criminals — are obscured for the most part from surface data collation efforts such as my simplistic attempt above. For a variety of reasons, unearthing and confirming that level of badness requires a far deeper dive. But even at its most basic, an ongoing, public project that cross-correlates ISP reputation data from a multiplicity of vantage points could persuade legitimate ISPs — particularly major carriers here in the United States — to do a better job of cleaning up their networks.

What follows is the first in what I hope will be a series of stories on different, ongoing efforts to measure ISP reputation, and to hold Internet providers and Web hosts more accountable for the badness on their networks.

PLAYING WITH FIRE

I first encountered the Web reputation approach created by the researchers from the University of California Santa Barbara after reading a paper they wrote last year about the results of their having hijacked a network of drive-by download sites that is typically rented out to cyber criminals. Rob Lemos wrote about their work for MIT Technology Review last fall.

Shortly after the Atrivo and McColo disconnections, the UCSB guys started their own Web reputation mapping project called FInding RoguE Networks, or FIRE.

Shortly after the Atrivo and McColo disconnections, the UCSB guys started their own Web reputation mapping project called FInding RoguE Networks, or FIRE.

Brett Stone-Gross, a PhD candidate in UCSB’s Department of Computer Science, said he and two fellow researchers there sought to locate ISPs that exhibited a consistently bad reputation.

“The networks you find in the FIRE rankings are those that show persistent and long-lived malicious behavior,” Stone-Gross said.

The data that informs FIRE’s Top 20 comes from several anti-spam feeds, such as Spamcop, Phishtank, and includes data on malware-laden sites from Anubis and Wepawet, open-source tools that let users scan suspicious files and Web sites. Stone-Gross said the scoring is computed based on how many botnet command and control centers, phishing and malware exploit servers for drive-by downloads are at those ISPs, but only when those have been hosted at a given ISP over a certain number of days.

“The threshold is about a week. Basically you get points for each bad server you have, and then it’s scaled according to size,” he said. “We take the inverse of the network size (the approximate number of hosts) and multiple it by the aggregate sum of the network’s malicious activities.”

Stone-Gross said about half of the Top 20 are fairly static. “GigeNET, for example, seem to be leaders in hosting IRC botnets, and this has roughly been the case as long as we’ve been keeping track.” GigeNET did not return calls seeking comment.

Even compensating for size, FIRE lists some of the world’s largest ISPs and hosts conspicuously at the top (worst) of its badness index. However, FIRE’s findings are consistent with those that measure badness from other perspectives, and two major US-based networks show up time and again on most of these lists: Houston-based ThePlanet.com, and Plano, Texas based Softlayer Technologies.

Stone-Gross said a major contributor to the badness problem at many big hosts is the fact that most of their tenants are absentee landlords, some of whom have rented and sub-let their places out to itinerant riff-raff.

“What happens is they’ll have maybe a few hundred or even thousand resellers, and those resellers often sell to other resellers, and so on,” he said. “The upstream providers don’t like to shut them off right away, because that reseller might have one bad client out of 50, and they’re not law enforcement, and they don’t feel it’s their job to enforce these kinds of things.”

Sam Fleitman, chief operating officer at Softlayer, said the company has been trying to become more proactive in dealing with abuse issues on its network. Fleitman said his abuse team has been reaching out to a number of groups that measure Web reputation to see about getting direct feeds of their data.

“Most hosting companies are reactive…finding and getting rid of problems that are reported to them,” Fleitman said. “We want to be proactive, our goals are aligned, and so we’ve been trying to get that information in an automated way so we can take care of these things quicker.”

Indeed, not long after the UCSB team posted their FIRE statistics online, Softlayer approached the group to hear suggestions for reducing their ranking, Stone-Gross said.

“They came to us and said, ‘We’d like to improve that,’ so we had a talk with them and gave them a whole bunch of suggestions,” Stone-Gross said. “That was about three weeks ago, and they’ve since gone from being consistently in the Top 3 worst to usually much lower on the list.”

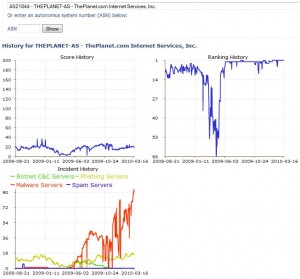

What’s probably most unique about FIRE’s approach is it allows users to view not just the volume of reported abuse issues at a given network, but also to drill down into specific examples and even chart the life of said abuse examples over time.

What’s probably most unique about FIRE’s approach is it allows users to view not just the volume of reported abuse issues at a given network, but also to drill down into specific examples and even chart the life of said abuse examples over time.

For instance, if you click this link you will see the reputation history for ThePlanet.com. The graphic in the upper right indicates that, aside from a brief period in the middle of 2009, ThePlanet has been at or near the top of the FIRE list for most of the last 18 months. Stone-Gross said that one gap corresponds to a time last April when the university’s servers crashed and stopped recording data for a few days.

Click on any historic points in the multicolored line graphs in the bottom left and you can view the IP addresses of phishing Web sites, malware and botnet servers on ThePlanet.com over that same time period, as recorded by UCSB.

ThePlanet’s Yvonne Donaldson declined to discuss FIRE numbers, the abuse longevity claims, or the company’s prevalence on eight out of ten of the reputation lists that flagged it as problematic. In a statement e-mailed to Krebs on Security, she said only that the company takes security very seriously, and that it takes action against customers that violate its acceptable use policies.

“When we find issues of a credible threat, we notify the appropriate authorities,” Donaldson wrote. “We may also take action by disabling or removing the site, and also notify customers if a specific site they are hosting is in violation.”

Once again, an excellent article Brian. I feel like you gave us all a rifle scope to focus in of the problem. Very interesting!!!

I’m sure most of us can’t tell you how much we appreciate your efforts here!

Brian;

Very interesting article on a very important subject. I would like to ask you this: Have you considered that some of these ISP’s that you have named here, especially in the U.S., are quietly working with LEO’s and that is why they allow the “badness” to continue on in their networks?

Yep. I’ve definitely considered that, and even heard that as a factual statement from some sources re: some names on the list(s).

I also have gotten a lot of feedback from people saying, “congratulations, you’ve got a nice list of the largest ISPs there, what do you expect?”

My first response is, I heard this a lot about McColo and Atrivo; you can’t name/shame these guys: there are too many law enforcement investigations going on there. True, the large networks named over and over again on these various lists are not even on the same…er…planet, in terms of badness concentrations as McColo, 3fn, et. al. But then again, maybe that tells us we are measuring the wrong things if they lead to such obvious results?

For that matter, perhaps we shouldn’t be surprised at the results. After all, where are the biggest centers of crime in the real world? They’re at the biggest population centers of the world, usually. That’s a somewhat obvious conclusion, but it doesn’t make the crime problem in those cities any less of a reality.

I’ve been checking FiRE on a daily basis for the last several months after I discovered their wesite while looking for information on Softlayer. I work with an online bill pay company and Softlayer has many proxy servers that are used to try and perpetuate fraud. So not only are these ISP’s hosting malware, but they are being used in the other main focus of your research; bank fraud. I won’t go into detail about how much I’ve seen come from Softlayer, but there have been several cases. In fact, I monitor their entire block for suspicious behaviour along with FDCservers who is not mentioned in this article, but shows in FiRE’s top 20 often and also supplies apparent proxies that are used in fraud cases.

I’ve long suspected bank fraud and web crime go hand in hand. The criminals pay for their services with ill gotten cracked account information, from merchants mostly, I suspect. And rob their bank accounts to pay for web services. It just makes sense that they use this strategy to cover their tracks.

I have personal experience in this. I still haven’t ID’ed the merchant responsible for leaking my data, but I’m working on it.

And in an ironic twist, I just checked todays numbers and Softlayer is back in their usual 3rd spot.

Nice writeup as usual Brian :o)

SoftLayer, FDCServers, NetDirect, Hetzner, and many more I could name (and plan on shaming), have had “issues” (to put it politely) with badness for a number of years, and whilst I’ve heard their cries of “It’s not our fault, really, we do deal with this stuff”, have consistently ignored almost every single abuse report I’ve sent to them.

Interestingly, though not surprisingly, the only time we do ever seem to hear from these ISP’s, is when they are actually named and shamed – and then of course, they pull out the PR rubbish we’re all familiar with.

I’m not buying it.

> have consistently ignored almost every single abuse report I’ve sent to them.

May it be, then, that abuse reports are not to be private thing ?

Make some issue-tracker like service, with peer-reviewed karma (like on slashdot) to filter out false positives, make some tools that would forward report to proper ISP channels (old good abuse@ not always working or considered best channel) and maybe with some semi-automatic tools, that would aid web citizens in investigating/identifing malicious behaiours (simplistic is just checklist+suggestions, like ” run whois domain.tld , look for line like “xxxx” ” – so users would now how to get basic info)

Then in some time that “PR Buulshiot” would be confronted with statistics of sent (and ignored) reports

Excelent Brian !

cause of this crap on the net are companies out there not investing in a functional abuse@desk infrastructure.

Some Hosters like hosteurope or domainfactory have a zero tolerance closing arising cases in nearly zero-time…

others, Steven posteted this prior to me, have a lack of reaction like netdirect, godaddy for example….

you may complete your research with our data.

have a look at our stats (page may load slow because all is computed on the fly …)

http://support.clean-mx.de/clean-mx/virusesstats

If you have any questions feel free to contact me by mail…

— Gerhard

… just forgot to mention FIRE…

I dropped several mails to them for exchanging data but got no response at all.

you may have a look at their report for eg. AS9318

http://maliciousnetworks.org/chart.php?as=9318

and our facts:

http://support.clean-mx.de/clean-mx/asweather?as=as9318

so FIRE as not enough data in my opinion

— gerhard

Thanks Gerhard:

For that support.clean-mx link. It looks like mitigating iFrame exploits would take a huge bite out of crime, by looking at the pie charts.

So by folks disabling iFrame in the Internet Explorer browser, they could close a big vector – I gather.

I suppose this would limit one’s functionality on many sites, but I’ve never tested it. I’m also not sure which one of my in-depth defenses is mitigating this problem. When I go to IT security test sites to see how vulnerable I am to iFrame attacks, the vector doesn’t work. However, I don’t disable iFrame in my IE 8 browser. I do this because it is a honeypot anyway, and my clients will not, or cannot disable iFrame either.

Perhaps the medium security setting on IE is doing this?

Hi Gerhard,

FIRE does not display as much malicious activities, because we remove many of the compromised servers. We did respond to your emails, so feel free to drop me another email if you would like to collaborate.

Hi Brett,

I droped you a note by email, thx for quick response here !

— gerhard

Brian,

The fact that you call attention to on-going security problems and providing insightful analysis is why I love reading your blog! I’m glad for today’s article, but I think perhaps the best conclusion we can draw from your assembled charts might be “we’re currently measuring the wrong things.”

New York City has more crime than Birmingham (where I live), because it has eight times as many people as we do. So it wouldn’t be especially useful to show a chart showing the number of robberies in Birmingham and New York. That’s why crime is not reported by number of incidents, but by number PER CAPITA.

Its a lot more interesting that “HiVelocity Ventures” or “Vital Vital Teknoloji” has a lot of badness than “GoDaddy”. That would be like pointing out that a town of 20,000 had the same number of car thefts as Chicago. That is something that needs to be probed more deeply!

The challenge for all of your contributing list makers is “how do we show ‘badness per capita'” on these large networks? Until we can address that challenge, these charts are only useful as scare tactics. If you can tell me a network is “50% malicious” or even “25% malicious”, I’ll block it. But, when you say networks with 10 million IP addresses have more crime than networks with 10 thousand, I don’t know that I have learned anything actionable.

Thanks for listening!. Keep bringing up issues that we need to discuss as a community! I look forward to seeing how the community responds!

Gary Warner

Director of Research in Computer Forensics

The University of Alabama at Birmingham

@Gary Warner

something else to think about – all these providers have the equal of “check-points” on the edge of their networks – routers, firewalls and IDS’s. It would be akin to a wall around NYC with selective entry and exit points. There is no possible way to track all fictitious users that registrars allow on the net, hence other folks saying that we should hold some rogue registrars accountable for. If there is no monetary reward for Big ISP to keep his network space clean, he has little incentive to do so. Perhaps monetary penalties would.

You are one of the few people here who are looking at this and making any sense of it. I’d be very careful about blocking based on it until you gave me a very specific situation though. If you are another ISP it would be unethical to start blocking. You can’t know what your customers might or might not want to connect to. They’ve paid you for the right to connect to the Internet. Not to secure them from it even if that is something that you’ve advertised heavily.

On the other hand, bigger players should have much more resources to systematically deal with botnets and the like. Therefore, I think it’s quite all right to block the likes of The Planet and Softlayer, as they really have no excuses that I can accept.

A lot of these are in the blocking hosts file that I use religiously and highly recommend to every home or small business user (larger enterprises use other methods to block this stuff). It’s updated at least monthly to keep up with the changing landscape. The blocking hosts file prevents your computer from connecting to these known bad sites regardless of what application you use to access the Internet. I strongly believe the hosts file alone has kept malware from even getting to my systems as I’ve never had a malware infection. I still use a layered defense (defense in depth) should something get past the hosts file.

MVPS Blocking Hosts file

http://www.mvps.org/winhelp2002/hosts.htm

A great tool for Internet Explorer users, for those who need active X blocking SpywareBlaster provides a smaller host file that is nearly as affective. I think Javacools is trying to give the advertising industry a break, if they behave. They know that is what is paying for all the nice sites we all like to go to for free!

However, you are right, my laptop hasn’t been drive by attacked in years since I put MVPS on it! I haven’t read it for a long time, but it used to be more granular than blocking whole domains like these.

I’d say it is at least as good as Adblock Plus on FireFox! Maybe better!

Your chart is hugely misleading Brian. I mean, you’re highlighting China Backbone as one of the worst hosts on the basis that it has such a massive address space, and provides internet to most of China; of course it’s going to have more instances than most other hosts. There are much more accurate measures of internet badness available than you have mentioned.

Will — Your comment would seem to indicate that these are my figures. They’re not. I’m just collating information gathered by lots of different reputation groups.

The statement I *think* I hear you making is that we’re measuring the wrong things, and on that I agree (see my previous comment above).

I realise that they are not your figures, but by selectively highlighting certain hosts it suggests that those are the hosts you personally are “naming and shaming”.

But I agree that more crime is more crime, and it isn’t excused on the basis of them being too big to take down, but everything requires context.

Will — Only one of the hosts was “Selectively” chosen was NJ Intl Internet Exchange, basically because it was #1 on two lists. The rest were highlighted because they appeared on four or more lists.

The only way we’re ever going to be able to reliably and accurately determine the “real” data as far as who’s the worst per capita, is by vendors stopping concentrating on $$, and collaborating (i.e. combining ALL of the vendors “lists”/databases (or as Brian put it, one entity instead of x seperate ones), and cross referencing the data once combined), until that happens (and basing it on attitudes I’ve seen from several vendors, it’s highly unlikely), it’s always going to be inaccurate in one form or another.

Hello,

Re. the comparison between big and small ISPs, it is indeed possible to compute some kind of percentage of bad sites per 1000 of hosted sites.

However, one thing is fairly independant : the livetime of a site from the first request to remove it for badness reasons…

If this value is collected somewhere, then it would be interesting to know it, because it is quite a strong hint on the real willingness of the ISP to act against bad sites.

There needs to be some sort of focus on domain name registrars as well, not just ISP’s. eName.com and the infamous Xin Net Technology (http://xinnet.com/) are churning out tens of thousands of spam domains a week. Taking action against some of the ISP’s on the above lists will not dent the flow of spam from those sources.

Microsoft’s cooperation with DOJ on this war, and the other efforts alluded to here have already had an effect on my spam box filter, I’m getting less and less every day.

Either that or the providers are finding ways to improve filtering in less than two weeks. This I would believe with Live Hotmail, but not Postini. Postini has been slow as molasses in reacting to the scourge.

I think that was a good first step.

A recent blog post linked to the Microsoft Digital Crimes Unit facebook page, its an interesting way to keep an eye on what MS is up to:

http://www.facebook.com/#!/pages/Microsoft-Digital-Crimes-Unit/224054150085?ref=ts

Really liked your article, Brian, very nice work as always.

I did want to take issue with one comment that has been offered in response to it, and that is the argument that abuse rates should be normalized against total customer counts.

Sites which are being targeted for abuse on the Internet can’t tell that the hundred (or thousand!) abuse sources hitting them from a given ASN represent only a fraction of that ASN’s total customer population, and, even if they could, it doesn’t really change the reality that the targeted site is being abused from a hundred (or a thousand!) abuse

sources site (large or small).

If a site *is* particularly large, that very largeness implies a special responsibility to use some of the associated cashflow to provision an abuse staff that gets adequately scaled up along with their customer base. If a large provider has only a single abuse handler, for example, that just isn’t going to be enough to handle the constant stream of complaints they will receive (heck, even abuse handlers need to get a

little sleep now and again).

So, if you wanted to normalize statistics according to customer count, one statistic which I *would* support normalizing that way is the number of operational abuse staff per 10,000 customers.

I think that would make a wonderful followup article to this piece, if you can get ISPs to agree to disclose that information.

Joe, I’m assuming you are responding to me there. I argued that malicious instances should be normalized against address space, which is what FiRE and other respected academic efforts attempt to do. This is totally different to normalizing abuse rates against customer counts.

China Backbone is an exceptional case and the only host of its kind in the world, I just didn’t think it was right to highlight it in any fashion.

No, it needs to be highlight and recognized. It my book it is the worst offender. this is due to the sophisticated, systematic and very persistant rouge bots coming from there. all you can do is block them, which I do 100%

Jack – sounds like you’re talking from the point of view of a webmaster/sysadmin. Certainly, from that point of view, Chinanet is one of the “worst” ASes. But when talking about where the blame lies, it is a different matter.

Excellent report.

I look forward to the next related article which would be entitled –

Naming and Shaming ‘Bad’ Registrars

A good starting point for your research would be

http://rss.uribl.com/nic which ‘ranks’ the worst already.

So by online equivalent of street gangs does that mean they murder people? Good article though.

I’m shocked I have been working with ISp’s for ten years now and I had no idea how bad theplanet and godaddy was. Although I have heard too many complaints on godaddy to list.

This is the best article I have read in a long time and comes at exactly the right moment. Just last week my antivirus program blocked a backdoor trojan from softpedia which my link scanners had cleared.

Your site has already been bookmarked for me to study.

You probably saved me a lot of grief because I am planning on starting a website.

My question is which hosting service would you recommend? When highly advertised sites like Host Gator are unreliable people with less experience like myself need guidance from individuals such as yourself to help us with our decisions.

Thanks again and I look forward to more of your input.

Richard

A great source for checking out hosts/registrars is http://sitevet.com/. It says it’s in beta stage at the moment so there should be lots more to come.

I’ve been following the team behind this, HostExploit, http://www.hostexploit.com/, for some time now. They publish lots of good reports, Atrivo, McColo, etc; and their latest one was on the Top 50 Bad Hosts. Brian’s blog supports their findings too, so all the better. He has blogged about them before so we know they are reliable. Let’s get these bad guys exposed.

You know you are also publishing a list that provides people who are interested in getting a reliable host too you know? It just segregates the internet into the “good” and “bad”. It doesn’t have the effect you want.

This page lists registrars (the people who sell you the domain name for your website), not hosts, but you may want to check out the registrar you plan to use to see if they are associated with a high percentage of spammed/criminal domains:

http://rss.uribl.com/nic/

The problem with reliability ratings is that the ISPs that host criminals tend to be very reliable until they are shut down completely the way Troyak was.

Good thing to know. A domain should never be shut down. It is just a name!

From a source who worked at EV1/ThePlanet, FBI agents can walk into the TP datacenter, swipe their own key card, have access granted, grab a server they want to investigate since they know the DC so well and on the way out, throw the subpoena on the desk

AboutThePlanet:

This article is not about how Law Enforcement can intrude on your rights, it’s about how BAD security is on the internet.

I think whats going on here is Brian K is trying to help shape public policy so that some of these constant “bad actors” get see in the light for what they are. Unfortunately – it’s all just whack-a-mole. If there is another dark spot to hide in, they’ll find it.

If your computer was bot’d and collecting financial info, I’m sure you would hope LE had something to work with other than suspect you of the activity. A lot of these ISP’s didn’t keep decent logs, which lead to governmental prodding.

There should be other metrics as well. Not only from this perspective but also from customer’s perspective.

For example, SoftLayer is the very best provider i’ve used to date. The Planet is liked by a lot of people as well.

But take Leaseweb: Their customer service is absolutely terrible! They think resolution to reboot request and checking it comes online means: It’s rebooted under 8hrs (this is a crashed server), no one checks it boots up. And they think that’s excellency in customer service. Needless to say, you can forget even 3 nines uptime with Leaseweb if your server crashes more often than once in a year.

But even worse is a french provider Digicube. Deliveries are slow, all hardware when delivered is unstable and you got to figure out yourself how it works. Network has atleast 2% packet loss at best, averages 20% and peaks at over 30% packet loss. Servers within their DC cannot connect each other etc.

They sell servers with 1Gbps unlimited but hidden in their TOS is limit of 1Tb per week. Doesn’t sound 1Gbps unlimited? Well that’s not even the top of the iceberg: You never achieve more than 300Mbps peaks, those even are very lucky.

Better yet, another DC very close in France (100km or less), pinging it from Digicube results 90-100ms latency. Even some French people aren’t able to connect the server from France.

Opening support tickets about the network is likely to result no response, or limiting the server bandwidth even further. This despite their SLA of 99,99% for network. You can forget refunds as well, without a huge hassle.

So that is truly terrible DC. Soft Layer i’d take anytime, any day, and almost any price.

So in conclusion, i think the metrics are completely wrong here. Customer service is what *truly* matters, and customer happiness is the most important factor in the end.

SoftLayer probably gets so many, and have more bureacracy involved in it, they are following all laws precisely and their TOS not to cause a unneeded law suit, so solving abuse issues probably takes longer, than a smaller provider which couldn’t give rat’s a** about their customers.

You should see ShopOrNot.com and people can rate the companies there, by customer satisfaction etc.

I find these articles very interesting, as well as disturbing in the sense that they reveal the growing troubles of the Internet. My problem is understanding the structure of the ‘net, in terms of “rogue IP’s”, VPN’s, “upstream providers”, etc. Is there a source I can look at to understand this terminology and see how they all relate to each other and the ‘net as I, a simple user, can understand?

I remember when I could protect myself by carrying a little floppy disk around with me. Now I need to learn a mountain of information, use a ton of tools (most of which I’m currently unaware of) and educate myself on the possible hazards ‘out there’, where they come from, how they operate, etc.

Mind you, I do try to pass what I know unto others, most of whom simply don’t care. Until their PC goes rampant and then it’s “Ray, can you come over?”

Whenever I come across a technical term I want to understand better, I look for it in Wikipedia. This gets info faster than Google.

People who think the Internet should be cleaned up make me sick. They have no understanding of what an impossible undertaking it would be. Nor do they have a respect for free speech. You have to understand that right now the technology used to send spam is not well developed. Botnets are not generally decentralized distributed applications and have weak points that are easily attackable.

When someone says they want to clean up the Internet and has a clue as to what they are talking about conceptually they mean they want to make it more expensive to operative a botnet. If ISPs and hosting providers worked really fast it might be feasible to take out botnets and reduce space slightly for a short while. The problem is that doesn’t work long enough to be worth while. By short while I mean months not years and it would probably take years to organize any serious worldwide initiative to shut off botnet control points.

So to sum this up this is a disgusting waste of energy since it can’t possibly work and is a danger to free speech since it can still make it more costly by putting in a legal method to shut down operations faster of content that MIGHT be questionable somewhere. That thus can and would be abused. The internet is only dangerous to those in charge (illegal regimes, unpopular etc) and those who aren’t competent to be on it. You can’t clean it up.

That doesn’t mean there is not a solution to the problem described here. What people need to do is fix the problems sitting in front of them. Not “clean up the Internet”. The problem here is really that people are running Microsoft Windows & other non-free & proprietary software. Non-free / proprietary software is riddled with bugs that can’t be fixed in a timely fashion and/or aren’t then applied to your system in a streamlined fashion effortlessly. In the free and open source world everything is integrated into a package management system where software is streamlined and security updates occur regularly for any applications you’ve installed. Fixes are applied without much effort.

This stop the nasties on the Internet from harming you. The ting is the nastities on the Internet aren’t just coming at you from the Internet. They are also coming at you from other sources. Like USB Flash drives, cameras, and other devices you’ve purchased. The problem is Microsoft Windows. Not the Internet.

The problem has never been the Internet. So stop trying to clean up the Internet when the real problem is in front of you. Anti-virus is not the answer either. It is just another fraud to take your money-although one that works 1/10 the time instead of 0/10 times like fake-anti-virus applications you may have encountered.

Start getting rid of Microsoft’s proprietary solutions- and switch to open source.

Here are a few places you can get user friendly open source GNU/Linux systems:

http://thinkpenguin.com

http://commodoreusa.net

When people talk about “cleaning up the internet” they aren’t necessarily talking about ISPs having more control over what their customers can and can’t do. When I talk about “cleaning up the internet” I am talking about there being a better structure even higher than ISPs – ICANN and the regional authorities. It is not censorship we need, but better regulation.

It’s not about getting ISPs to instantly shut domains down that serve malware, because as you say Joe that has only a temporary effect. It is about not allowing obvious crime servers to be set up as easily as they are at the moment.

Of course you can never eradicate malicious activity on the internet, but at the moment the criminals have an exceptionally easy time.

Don’t get me started on a Windows/open-source debate – I can tell you now that if as many people used Linux as Windows, Linux would be far more vulnerable than Windows. It’s purely economics on behalf of the cybercriminals.

Pro forma, unsubstantiated dismissal of Linux.

Apple has much higher market share than Linux. Enough to draw the attention of malware writers. Yet, it suffers less predation proportionately than Microsloth.

Perhaps I know a particularly lucky sample of Apple users, but I’ve never heard a malware horror story from one. Almost every Microsloth user, present company included, has a trouble story.

It wasn’t a dismissal of Linux itself (personally I love Linux) but was a statement that the Windows approach to security fixes isn’t the reason why it suffers so much with malware, but purely the number of users. In fact, what is now very interesting is that Microsoft are beginning to take an active stance against internet crime, because the cost it has on their business has overtaken the cost it takes for them do something about it. This is why they have begun significant work on a NAT system for the internet; whereby if a 3rd party deems you infected, it won’t allow you to connect to the internet and infect others.

I would have to disagree that Apple has enough of a market share to be worth the time of malware authors. See http://www.w3schools.com/browsers/browsers_os.asp – 7.1% Mac users vs 58.4% for WinXP alone. Spam, malware and phishing run on very precisely calculated economics; and reducing your target audience tenfold makes zero sense.

The only platform malware authors will soon be concentrating on other than Windows is the smartphone platform since the number of smartphone users is set to overhaul that of PC users by 2012. We’ll see how well open-source fares then, seeing as most of those will probably be on Android.

I would tend to agree on the market share audience for Mac, but their are inconvenient facts associated with that. With the popularity of the iPod and iPhone, and Apple music store; Apple has been forced to provide downloads for at least two serious malware variants.

Plus, you will see on ZDNet a new story every month on the latest Mac infections making the rounds on torrent, and pirated media, the same thing that vexes Microsoft users. The only problem is, how are you going to mitigate spyware and such on a system that doesn’t have sub-kernel level protections available like you do with Windows systems?

You could be infected right now and not know it. It is unlikely if you did not buy the model number notebooks that recently made headlines with infected firmware built into the keyboard of a production run of Macs, or you don’t do P2P, or play with suspect malware, but if you share files with anyone that has an iPod or iPhone, who knows? Even email would be a vector in that situation.

Now of course, you wouldn’t click on anything that would give serious malware permission to take control of your computer, but what about your applications? Secunia has put advisories on iTunes and QuickTime before, and even if they are patched in good order, some of your other applications may not.

True total security in anything hooked to the internet or to a phone or iPod is but a dream in my not so humble opinion. Your odds are good you will not get hit, but that is all.

What you said could be vaguely true…

I believe that if “self-policing” does not occur soonest, then the various Governments will become heavily involved “in order to protect us all”. Then what you said will be tragically true! Keep the hands of Governments off the ‘net by self-governing is the answer. I believe it is the only answer that will circumvent Governments’ intrusive (& inevitably repressive) “help”!

Your views (to me) seem short-sighted, narrowly conceived, lacking comprehension of the impact of the problem, and displaying ignorance of the existing technology (or of the capabilities of the concerned techies who CAN fix this problem). Sorry for the tactless bluntness displayed in my critique of your views. I do not intend to cast any shadow (or light) upon your personality or person in this post.

I believe we all work together, in like-mind, each doing what each can, to “fix” this “annoyance”, or we will all be soon “enjoying the features and the benefits” of having Governments step-in to “protect” us!

justjoseph commenting on justjoseph’s post…

That “critique” part of my previous post was in direct reply to a post by “Joe” (apparently an unpopular one – “hidden”).

As to MR. Krebs blog and other articles (and his depth of concern and knowledge), I hold only respect (perhaps awe) for the intense research leading to sharply accurate presentations of complete sets of facts. Your conclusions seem to “spot-on”.

An excellent article Brian!!! I feel it focuses on a core problem.

I can’t tell you how much I appreciate your efforts to shine a light on security issues.

-F

PS Congrats on the award!

Russia now requires a passport, or for businesses legal registration papers, to register a .ru domain name.

http://www.computerworld.com/s/article/9173778/To_fight_scammers_Russia_cracks_down_on_.ru_domain

I wonder what would happen to spam levels if China did the same thing with .cn names.

That article cites a new Chinese law from February that requires a personal photo to register a domain name is not nearly going far enough.

Allegedly 130,000 sites have been shut down for not having required information, but that is coming from Chinese state-run media.

I received a pretty interesting spam today.

It seems I won a lottery sponsored by the “Royal Bank of Scotland”. The Scots are a generous people, and that part is par at St. Andrew’s.

The interesting thing technically, was that the email originated in ccTLD(.sg) = Singapore, and the reply was ccTLD(.cn) = China. I myself would not want to be caught screwing around in either one of those jurisdictions, but it does highlight the enforcement problems involved here.

For every spamserver, there’s lots of compromised home computers acting as proxies, so this shows only a fraction of the problem.

Your list is almost a direct reflection of the size of these companies. Which means it is useless. I am sure you realize that, but where are the percentage stats.

Ryan — you would benefit from reading some of the comments, particularly the ones left by Yours Truly, above.

To which list are you referring? The one that lists Bluehost, maybe? I really don’t know. There are plenty of small hosts on some of the lists.

In any case, these aren’t “my” lists. If they are flagging the largest hosts, then maybe that’s because where the largest problems are. Then again, maybe we are measuring the wrong things? This is the very discussion that this entire post was meant to bring up. Thanks for participating.

Hello.

I think your initiative is right on the spot. It can be become a stepping stone to start more regulation.

But this kind of regulation must be centralized, like a regulatory agency to oversee internet activity in the US. I make a reference to this, as you made a reference to the Department of Transportation and the EPA.

As you may know, the Bank Secrecy Act provides for companies that provide financial services to have a Know Your Customer program as part of their anti-money laundering activities.

Online services providers should be subject to this kind of oversight. These types of rules are already in place for other industries and can be easily applied to this industry which is growing exponentially and without proper oversight.

As far as enacting these types of laws requires politics to be taken into consideration, but that is the system we live in. There are multiple ways like you mention to protect the American citizen-if other countries don’t want to cooperate…-. This is one suggestion to incorporate into other recommendations,

Hope it helps,

Eduardo J. Nogueras, CISA