When it’s time to book a vacation or a quick getaway, many of us turn to travel reservation sites like Expedia, Travelocity and other comparison services. But there’s a cybercrime-friendly booking service that is not well-known. When cyber crooks want to get away — with a crime — increasingly they are turning to underground online booking services that make it easy for crooks to rent hacked PCs that can help them ply their trade anonymously.

We often hear about hacked, remote-controlled PCs or “bots” being used to send spam or to host malicious Web sites, but seldom do security researchers delve into the mechanics behind one of the most basic uses for a bot: To serve as a node in an anonymization service that allows paying customers to proxy their Internet connections through one or more compromised systems.

As I noted in a Washington Post column in 2008, “this type of service is especially appealing to criminals looking to fleece bank accounts at institutions that conduct rudimentary Internet address checks to ensure that the person accessing an account is indeed logged on from the legitimate customer’s geographic region, as opposed to say, Odessa, Ukraine.” Scammers have been using proxies forever it seems, but it’s interesting that it is so easy to find victims, once you are a user of the anonymization service.

As I noted in a Washington Post column in 2008, “this type of service is especially appealing to criminals looking to fleece bank accounts at institutions that conduct rudimentary Internet address checks to ensure that the person accessing an account is indeed logged on from the legitimate customer’s geographic region, as opposed to say, Odessa, Ukraine.” Scammers have been using proxies forever it seems, but it’s interesting that it is so easy to find victims, once you are a user of the anonymization service.

Here’s an overview of one of the more advanced anonymity networks on the market, an invite-only subscription service marketed on several key underground cyber crime forums.

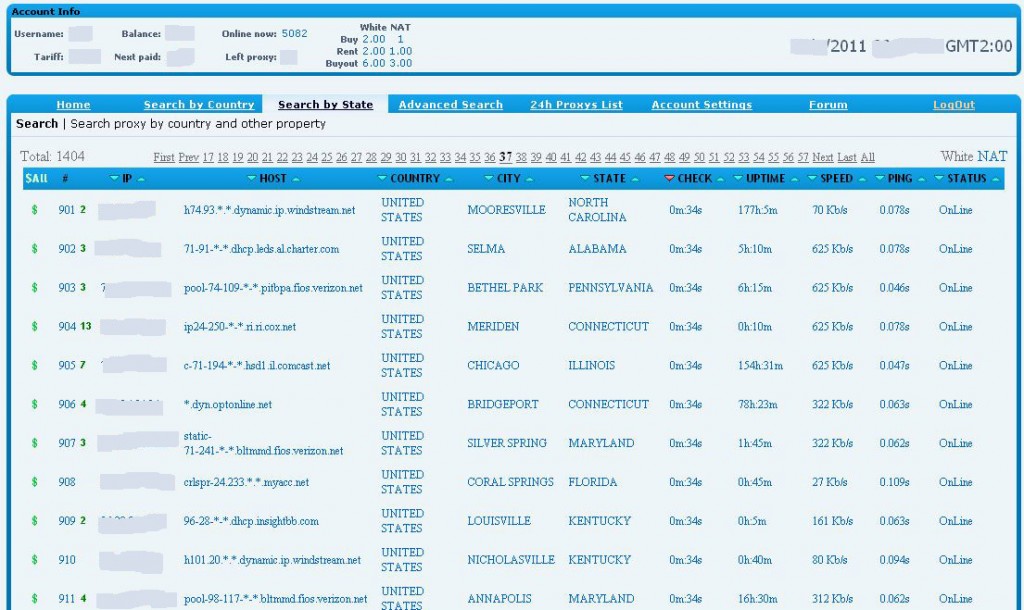

When I tested this service, it had more than 4,100 bot proxies available in 75 countries, although the bulk of the hacked PCs being sold or rented were in the United States and the United Kingdom. Also, the number of available proxies fluctuates daily, peaking during normal business hours in the United States. Drilling down into the U.S. map (see image above), users can select proxies by state, or use the “advanced search” box, which allows customers to select bots based on city, IP range, Internet provider, and connection speed. This service also includes a fairly active Russian-language customer support forum. Customers can use the service after paying a one-time $150 registration fee (security deposit?) via a virtual currency such as WebMoney or Liberty Reserve. After that, individual botted systems can be rented for about a dollar a day, or “purchased” for exclusive use for slightly more.

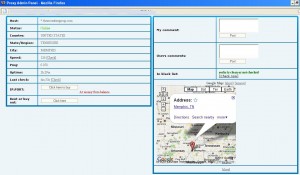

I tried to locate some owners of the hacked machines being rented via this service. Initially this presented a challenge because the majority of the proxies listed are compromised PCs hooked up to home or small business cable modem or DSL connections. As you can see from the screenshot below, the only identifying information for these systems was the IP address and host name. And although so-called “geo-location” services can plot the approximate location of an Internet address, these services are not exact and are sometimes way off.

I started poking through the listings for proxies that had meaningful host names, such as the domain name of a business. It wasn’t long before I stumbled upon the Web site for The Securities Group LLC, a Memphis, Tenn. based privately held broker/dealer firm specializing in healthcare partnerships with physicians. According to the company’s site, “TSG has raised over $100,000,000 having syndicated over 200 healthcare projects including whole hospital exemptions, ambulatory surgery centers, surgical hospitals, PET Imaging facilities, CATH labs and a prostate cancer supplement LLC with up to 400 physician investors.” The proxy being sold by the anonymization service was tied to the Internet address of TSG’s email server, and to the Web site for the Kirby Pines Retirement Community, also in Memphis.

Michelle Trammell, associate director of Kirby Pines and president of TSG, said she was unaware that her computer systems were being sold to cyber crooks when I first contacted her this week. I later heard from Steve Cunningham from ProTech Talent & Technology, an IT services firm in Memphis that was recently called in to help secure the network.

Michelle Trammell, associate director of Kirby Pines and president of TSG, said she was unaware that her computer systems were being sold to cyber crooks when I first contacted her this week. I later heard from Steve Cunningham from ProTech Talent & Technology, an IT services firm in Memphis that was recently called in to help secure the network.

Cunningham said an anti-virus scan of the TSG and retirement community machines showed that one of the machines was hijacked by a spam bot that was removed about two weeks before I contacted him, but he said he had no idea the network was still being exploited by cyber crooks. “Some malware was found that was sending out spam,” Cunningham said, “It looks like they didn’t have a very comprehensive security system in place, but we’re going to be updating [PCs] and installing some anti-virus software on all of the servers over the next week or so.”

Other organizations whose IP addresses and host names showed up in the anonymization service include apparel chain The Limited; Santiam Memorial Hospital in Stayton, Ore.; Salem, Mass. based North Shore Medical Center; marketing communications firm McCann-Erickson Worldwide; and the Greater Reno-Tahoe Economic Development Authority.

Anonymization services add another obstacle on the increasingly complex paths of botnets. As I have often reported, tracing botnets to their masters is difficult at best and can be a Sisyphean task. And as TSG’s experience shows, it’s far easier to keep a PC up to date with the latest security protections than it is to sanitize a computer once a bot takes over.

[EPSB]

Have you seen:

Reintroducing Scanlab (a.k.a Scamlab)…Many sites and services require customers to present “proof” of their identity online by producing scanned copies of important documents, such as passports, utility bills, or diplomas. But these requests don’t really prove much, as there are a number of online services that will happily forge these documents quite convincingly for a small fee.

[/EPSB]

Nice sleuthing, as always great postings Brian.

Well, I can’t even find the posts I’m replying to in all this mess. I only know what they said because I got their comments in email, so I guess I’ll reply to them all here.

@JBV

“You just don’t get it, do you, Deborah? Ed isn’t expressing his opinion, he’s quoting Brian’s post from last September, and pointing to another of Brian’s security advisories. If you weren’t so quick to post – and had looked at the links, you would have seen this. Ed is trying to do damage control, as are many other commenters here, including me, who are concerned that some nonprofessional reader of this blog may believe that you are well-versed in the current state of computer security. You aren’t!”

What links was I supposed to look at? I didn’t see any. And if you think that any casual reader of this blog is going to go back and read through a year’s worth of posts and all their comments before they say a peep, well here’s a reality check for you. I am a good and faithful reader of things in general, and I can just barely keep up with the few threads here that I’ve posted on in the past week or so. To expect me to read and understand everything that’s gone on in the past 6 months is just plain unreasonable.

And if you think that some poor innocent who might be struck blind and deaf by reading the words of an infidel, here’s another reality check for you – they won’t. People as blind, silly, and uncaring as you are so concerned about won’t wade through all this verbiage and take it seriously enough to be persuaded any one way or the other. Heck, I’ve been reading the email notices to this blog and the smattering of the few comments that post to them in the first few hours for months. And I had no idea that there was this depth of discussion going on until I took the plunge and threw my two bits into the ring.

I’m not a security professional, and it has done nothing but astonish me that such a furor has been raised because a knowledgable, intelligent person has dared to stand up and say something in this crowd.

Honestly, if you think that calling me a moron and “disliking” me into oblivion is your best strategy to deal with me, I’m tempted to think that all of you are simply morally and intellectually bankrupt. Because I am knowledgable, I am not stupid, and perhaps most significantly, I am not alone. Perhaps something that distinguishes me from my peers is that I have this quixotic bent to my personality that makes me say what I mean and mean what I say, and I’ll stand by it even if it seems like the whole world is against me.

@Chad

” Two motorcycle riders. One wears a helmet and one doesn’t. Just cause the one without the helmet is a good driver does not mean that he/she should recommend riding bikes without helmets because he/she likes the breeze on his/her hair.”

Oh, there is so much wrong with this little story on so many levels – where should I begin?

In the first place, there are people, and I would be one of them, who think that back in the times when Americans liked to feel the breeze in their hair, and who knew that they’d better be good drivers or something terrible would happen, were better times and better people than we have and are now.

Actually, I think I’ll leave my response at that, even though I could really tear into the subject of what pampered, bullied babies Americans have become (and how falsely glorified the bullies think they are). Either you get it or you don’t.

@Nick P

“Windows 7 uses about 350MB of RAM and works pretty quick on a formerly WinXP machine. It’s not just a Vista service pack: it’s Vista done *right* and one of few versions of Windows that people loved right out the door. I’ve switched all my relatives over to a hardened Windows 7 and have fewer malware- or reliability-related tech support calls.”

I’ve said before that I’ll give Win7 a fair chance when I’m ready to. But times are hard now, in case you haven’t noticed, and rationalizing breaking free a couple hundred bucks for a new operating system that I don’t think I need is, well, tentative at best.

And I’m not one of your relatives, who needs to call someone for help whenever their computer goes gunny bag. In fact, I haven’t had a serious problem of any kind for a lot of years now, and the ones I have had in the past 10 years I’ve been more than capable of dealing with myself. And I’m no freak. There’s lots of people like me.

I do however look forward to a day when I can afford Windows 7 and take it for a test drive.

Well, if you ever thought you would persuade me of anything, you can forget that. This was an interesting little byway for me, but really all I found here was a bunch of snakes, ready and waiting to strike anyone who ventures too close.

Maybe I should have just used my initials, or lied and identified myself as a male. I keep thinking that’s what I should do in the future, but it just rubs me the wrong way. I am female, and I disagree with you.

Live with it.

And you think I would trust any baboon who calls himself a Security Professional, and believe me I will be hiring someday, after the bashing I’ve gotten on this forum?

Fat chance, suckahs.

Sure, you can all dance your Tra-la-la dance now that I’ll be gone, but you’d better believe that I now have a very bad taste in my mouth for anything smacking of “Security Professionals”.

Nice going guys. See you on the other side (of wherever that is.)

The Securities Group should give Brian a reward for the heads-up.

I’m still waiting to to hear of bots being auctioned off or traded, etc. for the access the machines represent. I.e. being behind the network perimeter of a targeted organization. An attacker might seek specifically bots in small business networks for better spear-phishing opportunities for example. Or perhaps use the bot as a foothold to gain access to private corporate networks. If they matched up the access properties to those who find them valuable, they could presumably up the profit, but they’d have to streamline the discovery what access the bot represents. There’s a lot of commoditization opportunities that the botmasters seem to have overlooked. Seems they have graduated from smash-and-grab tactics though, from what you report here.

I heard about that some years ago while conversing with a hacker.

He had been following a Russian Online BBS, and he said that an individual announced that he had xxxx computers available in his bot net if anyone need some power.

Some other individuals then responded, they “hackled” and agreed on the price and went “offline” to finalize payment and password etc.

So it is done, probably more often than not in gated communities.

The problem lies when these criminal bands start to cooperate, or syndicate themselves.

Sometimes I wish I was a farmer…. :o)

Great website BTW

“hackled” = haggled ?? Wonderfully descriptive, love it!

Reminds me of a service made available by download for repressed persons in Iran; where supposedly they could redirect the mission of several bot servers to remain anonymous in their opposition communications.

I understood followers of the Dalai Lama were using it as well.

The link is changed often so mine is obsolete.

Customers can use the service after paying a one-time $150 registration fee (security deposit?) via a virtual currency such as WebMoney or Liberty Reserve.

—————————————————

And the REAL purpose of these virtual currency brokers IS ???

So only CIA, NVD & Mossad monitor these guys ?

“And as TSG’s experience shows, it’s far easier to keep a PC up to date with the latest security protections than it is to sanitize a computer once a bot takes over.”

Perhaps. But then again, what could be easier than simply restoring your most recent known-good system image, assuming you keep your system backed up and know within a reasonably short time that your system has been made a ‘bot.

I’m not disagreeing with you, but here again it seems like there is at least a potential difference between best practices for business (and maybe mobile) computing and best practices for home computing. (And of course for people who really don’t want to think about anything. They should be geared up with all the latest and best security protections too.)

In a business computing environment, quite likely there is more than one user per machine, and none of the users are particularly paying attention to it, thus none are especially likely to notice odd behaviors. Indeed, in a work environment this would probably be considered an unproductive use of time. And in some mobile computing environments, where multiple networks are being accessed, what’s happening where and when may simply be too much to keep track of. In these types of environments I can see the wisdom of relying on the latest security protections.

But in the single user home computing environment, particularly if the goal is maximizing the usefulness of one’s computing resources, the argument can be made for substituting alertness and brain power for security protections.

I’m still fairly new to this blog, and never have been terribly familiar with the security concerns of businesses, organizations or mobile users, though I’m very much interested in learning about them. But I see a lot of people “disliking” what I have to say, and honestly, I frequently can’t figure out what it is that they “dislike”. I’ve found that the best personal security strategy for me is to forego “canned” security solutions and do-it-myself. I’m basically satisfied with the outcomes I’ve had, but would like to learn more and share experiences with others.

So if you are of the persuasion that no one should forego the latest security protections and you see me say something you disagree with, please don’t just hit the “Dislike” button. Say a few words about what you “Dislike” or disagree with and why.

What’s to dislike is the assumption that more than a very small fraction of computer users are capable of doing what you do. The canned solution is so much better than anything 99% of users – and organizations too which is a scary thought – can do on their own. The last 1% is irrelevant to any discussion of security – pwned or not, they’re not going to make a difference in the grand total.

Besides, just re-imaging when pwned is like rebuilding the house when it has been broken into. So much better to do a few things to make it harder to break in and steal whatever it is that has value. If the cost of breaking in (including risk to self) exceeds the value of the loot a rational thief will not bother. Most thieves are rational.

@grumpy: “The canned solution is so much better than anything 99% of users … can do on their own. The last 1% is irrelevant to any discussion of security”

This statistical view of security is so right that I have to wonder why it avoids naming names:

Most browsing occurs in Microsoft Windows, so, naturally, ALMOST ALL malware is designed to run ONLY in Windows. That means the “malware resistant computer” exists right now, and it is any computer which does not run Microsoft Windows online.

Sadly, “canned solutions” (to malware) have been rendered generally ineffective. Can they stop bot malware from getting in? Probably not. Can they find a hiding bot? Almost certainly not.

“Besides, just re-imaging when pwned is like rebuilding the house when it has been broken into. So much better to do a few things to make it harder to break in and steal whatever it is that has value.”

As other comments mention, recovering a clean image assumes that one CAN know when a bot is present (and that it is not in the image). There is no such test and no such tool.

But the response also assumes that a “few things” CAN be done to “make it harder to break in.” The time has long passed when significant improvement was easy:

* Anti-virus scanners cannot keep up with new malware production.

* Polymorphic malware “encrypts” its files so they cannot be found by scanners.

* “Rootkit” technology prevents the OS from showing malware files, or showing modified file contents.

* “White Hat” bots might possibly be helpful, but then you have to agree to live with an active bot.

“If the cost of breaking in (including risk to self) exceeds the value of the loot a rational thief will not bother. Most thieves are rational.”

That confuses the decision the attacker makes to take a particular distribution approach with what happens after the approach succeeds. Yes, of course, attackers will choose the most profitable approach to get their malware to run, which often will put a bot in place. But only AFTER the bot is in place can they look around to see what they have.

Most bots are not going to stumble on a pot of gold, although some will. But a bot has worth of its own, for hiding identity, for distributing spam and malware, for DDOS attacks, for distributed computation and so on. The attacker who finds no gold does not simply remove the bot and slink away to find somebody else. The bot has worth anyway, and maybe gold will appear someday.

@ grumpy

“What’s to dislike is the assumption that more than a very small fraction of computer users are capable of doing what you do. The canned solution is so much better than anything 99% of users – and organizations too which is a scary thought – can do on their own.”

Fair enough. Unfortunately there aren’t any hard statistics to tell us what the computer savvy breakdown is out there.

And it could be a matter of perspective. Ive been in university and technical environments my entire adult life, and personally know a lot of people who can do what I do, so it might be hard for me to see outside of the box that I live in. And if you hang out on Arstechnica, Slashdot, Techdirt, the How-To Geek, Overclockers, etc., you’ll see an amazing number of people who truly understand their computers and technology to quite a profound degree. Really, I often feel like a very small fry in those crowds. (Which might be why I have the time and interest to hang out here, while they’re too busy and absorbed in their technical projects… 😉 )

So maybe we can compromise on those numbers a bit. Say, the technically capable being quite a bit more than 1%, (reaching for a tail to pin on the donkey) – ooh, maybe 10%? 15%? And a lot of those tech-jockeys are for hire, as I soon will be. Perhaps this number is still too low for you (a security professional?) to consider being significant. That I don’t know, but you’re probably safe in assuming that this technically savvy minority would have less need of professional security services and advice than the remaining majority does. And it’s also reasonable to assume that the longer we live with the newer technologies, the larger this pool of technically savvy people will grow.

“Besides, just re-imaging when pwned is like rebuilding the house when it has been broken into. So much better to do a few things to make it harder to break in and steal whatever it is that has value.”

Absolutely agreed. An ounce of prevention is worth a ton of cure, particularly if you have something of great value to steal. But one of the discussions going on is about the different forms that good prevention can take.

As to whether there is such a thing as a known-good system backup, I think that’s been addressed in later comments. Ultimately that’s up to the system owner to decide, hopefully on a solid basis of knowledge about the system’s history, and a timeline of when and how the system was attacked. If you don’t know those things and cannot risk reinfection, then a clean install on a reformatted hard drive is most likely your only good solution. But that would be the case whether you had “canned” security in place prior to the attack or not.

@DeborahS

Lets put it this way – in 4 years of doing virus removals (usually reformat as I don’t know what else may be lurking in the computer). I have yet to see a fully patched computer – windows, adobe, java etc. that was pwned. – though thats not to say I might have missed one or two or that the owner of such a computer just hasn’t brought it in because he doesn’t know it has a problem.

@Deborah, my general dislike is that your posts seem to prescribe simplistic solutions that evidence a lack of first hand familiarity with the environments for which you prescribe. btw that’s a hot button for me, I’ve seen it too often in politics – when I was an elected local government official every time someone new joined discussions about significant problems the same thing happened, usually revisiting solutions that were already flogged to death multiple times. Good advice for newbies is to listen and learn, and remember that ’tis better to remain silent and be thought a fool than to speak and remove all doubt. Sorry to be so harsh, but you asked for it.

Specifics in your post this time: you said “what could be easier than simply restoring your most recent known-good system image, assuming you keep your system backed up and know within a reasonably short time that your system has been made a ‘bot.”

Both assumptions are false most of the time. Since only one has to be false to invalidate your suggestion it is seldom applicable.

There is another assumption that you totally missed. You implicitly assume that a regular backup can be known to be good. It can be difficult to establish when a system was first compromised. Restoring a compromised backup is worse than useless. Read the separate response I will be posting to comment on Brian’s story for more insight about that.

I could go on, but don’t want to dump on you. You are trying to understand and learn and contribute and those are all laudable. if you would want some private email exchange let me know and I will set up a one-shot address to establish contact.

-ISP

Infosec Pro – It’s rare to encounter someone, in real life or in cyber-ia, gifted with the ability to assertively rather than aggressively, point out the errors in someone’s submission while at the same time treating that person with dignity and respect. In addition, the your offer to review the subject in greater detail with Deborah offline, was a generous and kind gesture. You are either the parent of teenagers, someone with a lifetime prescription for xanax or just a truly nice person with no agenda. Maybe all three. But anyway, just thought I would say thanks for keeping it a safe zone here for the girls (or guys) who are not as knowledgeable as this group in general but want to participate. Comments like JBV’s just serve to belittle people and ultimately create a hostile environment. What can possibly be gained from using the word stupid in response to someone who is admittedly less than knowledgeable on the topic discussed?

I’ve learned quite a bit from following the discussions on this blog and find most of you intelligent, articulate and refreshingly facetious. Thanks for all the knowledge and sharing. If I do post soon, I’ll formulate it as a question and not a statement. 🙂 As a side comment, I hold a primary position in a well known hospital’s emergency dept and I always tell people I meet to be nice to everyone you cross paths with in life because I’m consistently amazed at how small a degree of separation exists between people who have little to no knowledge of one another. Most of the time, my job puts me in the position of providing a cushion-like barrier between someone going home happy and healthy or buying a first class ticket on the reaper express. The person you insulted yesterday could be the person who will be saving your life tomorrow. I think it’s better to have that person WANTING to help you rather than BEING REQUIRED to help you. While it’s true professionally the quality of care will be equal, the delivery can be noticeably different. Whether people in the medical community, or people in general, choose to acknowledge that fact, well, that’s another story entirely. The bottom line is that positive energy is a powerful force, and the same can be said for negative energy. Peace & Love. V

Good post Veronica;

I should be so bold as to be more considerate of folks. It takes a bold personality to do that. Probably the opposite opinion of most. :p

Good post, Veronica. This goes for everyone from the ER employee to the person serving or preparing your food.

You never know if the person you belittle will be preparing or handling your food, washing your shiny car, or even interacting with your children later. Being kind as a rule can have a great effect on your life.

“my general dislike is that your posts seem to prescribe simplistic solutions that evidence a lack of first hand familiarity with the environments for which you prescribe. btw that’s a hot button for me, I’ve seen it too often in politics – when I was an elected local government official every time someone new joined discussions about significant problems the same thing happened, usually revisiting solutions that were already flogged to death multiple times. ”

First of all, I’m not aware of having “prescribed” any solutions, though I believe I have proposed some topics for discussion that I haven’t seen brought up in the time that I’ve been “lurking” here.

I too have been heavily involved in local politics, plus other highly charged social situations, but perhaps I take another view. It is perhaps a jaded view of public discourse that any important topic can be “flogged to death”. Almost always, anytime a subject is reopened for discussion, new facts and perspectives can come to light that are well worth the time and trouble. Or people previously unexposed to them can be brought into the discussion. You could say that the subject of whether big government is good or bad has been “flogged to death” a thousand times, yet it continues to be valuable for us to talk about it.

Regarding the use of system backups to recover from an attack, I think the subject is being discussed in other posts. I was merely suggesting that if one has a known-good backup, restoring it is simple enough. Of course if you don’t have one or you don’t know that it’s good, the point is moot.

In addition to the great responses already I would add that this particular statement bothers me “the argument can be made for substituting alertness and brain power for security protections”

Your general approach seems to rely on noticing odd behavior created by an infection. This is seriously flawed when your dealing with an infection that doesn’t elicit odd behavior. If a trojan is sitting on your machine logging keystrokes and quietly sending them out to the internet how are you supposed to use alertness or brain power to detect that? I know I certainly couldn’t do that.

You also advocate forgoing the latest security protections, that is extremely poor advice to anyone. Its akin to a soldier taking off a bullet proof vest and going into combat, thinking he can reason out where bullets will be fired and simply avoid them that way. On the internet use the security tools made available to you first, then you use common sense and alertness. Both concepts are flawed without the other.

@ helly

“Your general approach seems to rely on noticing odd behavior created by an infection. This is seriously flawed when your dealing with an infection that doesn’t elicit odd behavior. If a trojan is sitting on your machine logging keystrokes and quietly sending them out to the internet how are you supposed to use alertness or brain power to detect that? I know I certainly couldn’t do that.”

No, I might not be able to detect a keylogger’s behavior either, but I can stop it from sending its data out on the internet (that’s where the “brain power” part comes in), or at least be able to detect its attempts as something to look into. And that’s how I’ve caught the 3 or 4 malwares (is that a word?) I’ve been infected with in the last 8 years.

“You also advocate forgoing the latest security protections, that is extremely poor advice to anyone. Its akin to a soldier taking off a bullet proof vest and going into combat, thinking he can reason out where bullets will be fired and simply avoid them that way. On the internet use the security tools made available to you first, then you use common sense and alertness. Both concepts are flawed without the other.”

First of all, I don’t and haven’t “advocated” foregoing the latest security protections. I’ve merely stated that it’s an option that some people successfully use.

I think the analogy of the bulletproof vest doesn’t quite hold up here. Or at least the implication that the latest security protections are equivalent to a bulletproof vest is highly suspect. There’s plenty of testament in comments on this blog that the latest security protections are unable to stop many of the bullets. And whether each and every “bullet” (malware) can mortally wound you seems a little hyperinflated to me. Nobody here is suggesting that you go out on the battlefield with no protection or precautions of any kind. What’s being discussed is what the options for protection might be.

I am impressed that you were able to discover and stop outbound traffic from your PC related to a trojan without security tools. If you wouldn’t mind sharing how you did so I would be curious?

I can certainly think of ways to do so, but I don’t know how I could communicate that to the average computer user as an ongoing recommendation. Which is also back to the basis of my response to your approach. Your recommendations, while they definitely can work, are not good for the average computer user. I spent a long time working with the general public on computer issues, and in that time I only met a handful of competent individuals who could do what you do successfully. My point is that if an average user is coming to this site looking for advice, your strategy could seriously mislead them.

My examples are usually pretty bad, agreed. But I think this one holds up. A bullet proof vest is part of a layered protection system. I agree it won’t stop every type of attack and its not perfect, but if it stops attacks it should be used.

The root of my argument is that you are discussing an optional security approach that works for you, that does not include using the general security tools available today. Common sense is a great way to stop threats, but it should only be one layer in a security strategy. If your approach works for you that is excellent, but don’t post it out as a fail proof recommendation for others less informed to follow.

As a personal example I run a virtual machine for all of my browsing. In 8 years I have never had an unintentional infection to my computer. I don’t advocate my approach to everyone because it really isn’t functional for everyone.

@ helly

“I am impressed that you were able to discover and stop outbound traffic from your PC related to a trojan without security tools. If you wouldn’t mind sharing how you did so I would be curious?”

My first line of defense is ZoneAlarm, v6.5. When properly configured and functioning, it will notify you whenever a process asks for internet access, and suspend the request until you click “Allow” or “Deny”. It also has an Internet Lock, which among its other uses will trap packets and data on your system so you can take a closer look at them. Essential Net Tools has a monitor of all internet-active processes and connections, and a neat right-click function to open SmartWhoIs, which allows you to look up the IP address of anyone connected to your system. And, much less often, I use CommView to take a look at packets and what exactly is in them. Of course it helps that I tested network servers at Microsoft and basically know how to read the packets, and where to find parsers, etc., but the knowledge is available for anyone who knows to look for it. Tamosoft, the makers of ENT, SmartWhoIs and CommView used to have forums where such things are discussed. I don’t know if they still do, but I’m sure other such forums exist for anyone who wants to seek them out.

Whether the malware can establish and use your internet connection is key to their success. Of course I could be wrong, or someone else could have a better answer, but a primary cornerstone of my strategy has been to guard that door.

@DeborahS: “My first line of defense is ZoneAlarm, v6.5. When properly configured and functioning, it will notify you whenever a process asks for internet access, and suspend the request until you click “Allow” or “Deny”.”

I vividly recall hearing about these issues some years ago. At that time, malware had learned to LIE to the outgoing firewall about what process it was, and to run code in other processes, to get around being stopped.

@ Terry Ritter

“I vividly recall hearing about these issues some years ago. At that time, malware had learned to LIE to the outgoing firewall about what process it was, and to run code in other processes, to get around being stopped.”

Well, I was definitely not paying attention to discussion of security issues until relatively recently, so I missed out on that.

Either I’ve been incredibly lucky, or ZoneAlarm’s methods of identifying processes is not what the malware writers expect, or one of my other tactics prevented that particular type of malware from getting into my system.

In principle that could be done, but the success of that type of malware would depend on how well it anticipated each outbound firewall’s method of identifying and vetting processes. I do know that ZoneAlarm looks at the components of processes, and you can configure it to vet each one before allowing the primary process to access the internet. I don’t have anything terribly restrictive set up, but I still see entries in the log where ZoneAlarm has blocked a process automagically because it didn’t have the component dlls and child processes it’s supposed to have. I suppose if I was really paranoid I’d investigate every one of those log entries, but I’ve done that so many times before and it just turns out to be something innocuous. The ones I’m looking at for earlier today turn out to be the FiOS wireless router (in my new apartment – just moved here) begging for internet access by piggybacking on svchost.exe. I don’t know why it wants internet access, and it’s good to know for future reference that ZoneAlarm is blocking it, but it’s definitely not malware.

And just because there once was malware designed to lie to firewalls doesn’t mean that they always succeeded. Nor does it mean that those types of malware are still in use – I wouldn’t know. But if they are no longer being used, it could well be because they didn’t work, or they didn’t work often enough. Anybody know if this type of malware is still in use?

Deborah: There are many readers of this blog who are new to the concept of computer security, in addition to business people, and the pros who frequently post here. You are doing them all great disservice with your sweeping generalities that really are saying “Who needs all this security anyway?”

The answer is obvious – everyone needs as much security as they can have in today’s internet environment. The home users need regular updating for programs, antivirus protection, a firewall, and some basic rules for keeping protected and safe surfing. The business person needs to protect the system, its users, and environment.

Your position is as stupid as if you were on a sinking boat with room left in the lifeboats, and you were saying, “Oh, don’t bother taking me along, I can swim to shore.”

@JBV

“Deborah: There are many readers of this blog who are new to the concept of computer security, in addition to business people, and the pros who frequently post here. You are doing them all great disservice with your sweeping generalities that really are saying “Who needs all this security anyway?””

Well, well. Oversimplification is perhaps an unavoidable evil in public discussions, and in this case I have to reject your reformulation of my position. I’ve never said that security isn’t needed, I’ve only proposed that there are options to using the “canned” security measures, and reasons why one may not want to use them. I haven’t said that one should use nothing at all, but the options that I use may not, and probably aren’t, suitable for everyone.

If the assumption is that there is one and only one security strategy that’s going to work for everyone, I’m suggesting that this assumption is flawed.

DeborahS, your advice is perfectly reasonable. In fact, on my own machines, I regularly (every 6 mos or so) reinstall from known safe images.

Ignore the dislikers. In addition to fanboys of other methods, there are plenty of black hat hackers on this forum who don’t want people to view good solutions.

I agree. There is no reason for the huge number of “thumbs down” on DeborahS comments from the legitimate folks who regularly post here.

Fully erasing the hard drive and reinstalling the operating system and subsequent updates and programs is the only sure way to get rid of malware. Making a disk image after all those installations gets one a “known good” image that can be re-installed in the future to save time, unless there have been hardware changes. It’s also a good way to recover from data corruption caused by hardware failures. An intermittently failing power supply does mean things to a hard disk.

Malware can hide in any operating system, but cannot evade a full disk erasure.

But the future is not good with all the counterfeiters and other criminals active in China; counterfeit routers have already been found by the military in their systems, and eventually we will be finding motherboards and add-in cards with embedded malware or backdoors on consumer equipment. That’s one good reason for moving the manufacturing of semiconductor chips and circuit boards back to the US.

@ Tony Smit

“But the future is not good with all the counterfeiters and other criminals active in China; counterfeit routers have already been found by the military in their systems, and eventually we will be finding motherboards and add-in cards with embedded malware or backdoors on consumer equipment. That’s one good reason for moving the manufacturing of semiconductor chips and circuit boards back to the US.”

Even though I was somewhat glib earlier today about finding all the blocked requests in ZoneAlarm from my new FiOS wireless router, later I wondered if in fact it might be malware. True, I just moved here and haven’t set up my network, so the router may have some functionality that I don’t need to connect one computer to the internet. But it could also be a virus or some kind of malware embedded in the router. For now, ZoneAlarm is blocking it and I don’t understand enough about what it’s trying to do, but malware is going to be high on my list of possible explanations when I do look into it.

Completely agree that we should bring hardware manufacture back into the US, and for more reasons than just security. But so far as security goes, even hardware manufactured in the US has turned up with malware in it out-of-the-box.

@ DeborahS wrote

“But so far as security goes, even hardware manufactured in the US has turned up with malware in it out-of-the-box.”

The first virus which ever infected me was the “I love you virus.” I got it from a software vendor, right after I wrote a review praising one of their products, a few hours before warnings came out about that virus.

Good points on malware and counterfeits. But, I might be able to clarify why Deborah’s posts get so many thumbs down. It’s just the things she’s said. She claims strong inside experience in the development processes of mainstream software like Microsoft’s products. Then, she makes claims that nobody with inside experience would make and seem nonsensical. Best example was her strong arguments against patching and her claim that she has an old OS that she doesn’t patch and she’s better off. She said her experience showed patches are extremely damaging/risky and she’s safer never patching her systems. Most people say patching can cause damage, must be done carefully, and doing it is usually better (because being a victim is easy & worse).

It’s hard for me to hear her claims of experience and work at companies like Microsoft, then hear stuff like that where a tester should know better. So, if she makes nonsense claims, I give a thumbs down on it. Note that I usually don’t do that if I merely disagree with a point or someone seems unknowledgeable about the subject. In that case, I offer my views in hopes that we all might contribute and learn something. But, when people say they’re quality assurance professionals, then say we’re safer never patching, I have to give a humongous thumbs down.

@Nick P

You raise a number of issues that seem to be quite simple to you and quite complex to me. I’ll do my best to do justice to that in hopes that we can understand one another.

First of all, it’s been 8 years since I left Microsoft, so by no stretch of anyone’s imagination should I be thought of as a currently employed tester. I am a free agent now, and I can think whatever I want to think. I sometimes still jokingly call myself a tester, because I think that in many ways I’m a “tester” at heart, regardless of my current job title.

Likewise, “quality assurance professional” is a label you put on me, not one that I ever claimed myself. My official job title at Microsoft was “Software Test Engineer”, and even when I was a tester at Microsoft I scoffed at that job title and the label of “quality assurance professional” that some software testers arrogate to themselves. After 2 years of formal education in Electrical Engineering and 7 years in Engineering Systems at Ingersoll Rand, I think I know what both an engineer and a quality assurance professional is. And I don’t think that I, or any of my Microsoft tester colleagues could honestly claim either label. True, there was a class of testers who could probably claim the title of being engineers, but at least when I was there they were an elite minority.

As for software testers being quality assurance professionals. “Quality Assurance” is a job function that only has meaning when there is an engineering specification that is assumed to be a good design, and the QA job is to verify that the finished product conforms to the specification. That’s what you do in a factory. The engineering team designs the product, the manufacturing team produces the product according to the design, and QA verifies that it was done and to what precision.

That’s not what happens in software development, or at least that isn’t what happened at Microsoft in the years I was there and in the development teams I worked on. For one thing, the “product”, the code, is generated by the engineers, the developers, presumably to the exact degree of implementation they had in mind for it. But the testers’ function is not to verify that the product conforms to the specification. No, the testers’ function is multi-pronged, and whether the code performs according to the developer’s original specification is only one task out of several, and arguably not the most important one. Actually, it would take me a very long time to detail all the prongs in the tester function, but they all revolve around the question of “does it work?”. So at it’s very basis, software development is radically different from manufacturing, because the only way to verify whether the design of a general purpose operating system is a good one is to test it. Sure, Computer Science degree programs attempt to give their students, our future developers, a huge array of tools to produce good design with, but in the end the crucible is in testing.

Do I have a jaded opinion of BVTs (build verification testing), the ones who test the patches? Yup, I do. I don’t know if this is the place to air all my observations and prejudices, but yes, I’ll own up that I didn’t and don’t have much confidence in the ones that I used to work with. And I haven’t seen anything to convince me that anything substantial has changed since I was there. In fact, I’ve pretty much proved to my own satisfaction that, at least in Vista, they’re just as slap-dash, get-it-out-the-door-now as they ever were. BVTs and production testers are two different critters. Most people outside of software development don’t know what either one of them are.

However. I’ve said before on this thread and I’ll say it again. For people who just use their computers relatively lightly, and for Windows users who basically only run Microsoft applications, patching poses no big risks, and is probably a good idea if they don’t want to pay attention to security problems. Their systems will eventually need to be reinstalled and they won’t know why, but so long as that doesn’t happen too often, and they don’t get hit with a zero day, it will all be ok.

I could keep writing, but I’m beginning to wonder if all those “dislikes” that puzzle me so much are really more indicators that people don’t understand where I’m coming from than anything else. Well, I hope this fills the gap somewhat, though I’m not at all sure that it does.

I can see and understand why end users of software, particularly operating systems, want and even need to feel that it’s all completely under control and it always works right, they just have to trust it. I just don’t have that confidence. We’ve raised a few of the issues in this thread alone, particularly DLL Hell, as structural reasons why relying on patching is a bad idea. I could elaborate further, but I think I’ll leave it at that for now.

@ DeborahS on Apr 10

I appreciate your reply. I’ll consider the new information during future conversations.

The reason why canned security is pushed is most users think to do anything with a computer requires a great deal of skills and high levels of education. It does help make working with computer easier. You don’t have to have an advanced degree to be smart online. Also most user don’t want to be bothered with being safe online. All that want the computer to do is work. They don’t care how it works or why so long as it does. The network I run I try to educate my user on why certain security measures are used or are now being added. If you do it in small doses they are more likely to listen. The best is if you can give them an example of why they need to be this way. Over the past year I have been making some real progress with my users. Many are starting to understand that there is no one fix to security, and that it must be layered.

We IT professionals need to do a better job communicating with the end user why security is so important and why things are this way. Too many times we get frustrated when the user does not do it our way. We must remember they are not in the trenches every day like we are. They do not know how insucre computers really are.

I have no problem with your approach but not every user can do this approach. We must do a better job educating the end user. Education is the only approach that will help reduce the risk to our networks.

@ Brian,

“… it’s far easier to keep a PC up to date with the latest security protections than it is to sanitize a computer once a bot takes over.”

On an end user machine maybe, however patches etc are known to make systems fail horribly, especialy when mission critical.

The simple fact is unless you know it’s safe, installing any software onto many modern OS’s is a gamble.

In reality the only way to know you are not going to break something is by testing…

And this is the rub, testing takes resources and time, neither of which is generaly readily available in medium size or smaller enterprises.

And time can be a real issue as zero day attacks have shown.

Many organisations cannot just down their systems as and when a patch becomes available. They have to wait for an opportune moment (such as early sunday morning). Which leaves systems in a vulnerable state for longer.

We have seen the time between the discovery of a previously unknown exploit and it being exploited from weeks to just hours, whilst the time to produce patches appears in most cases to be rising.

At some point in the near future installing the latest patch will “be to late” for a significant number of people, no mater how fast they install it. Therefor we can expect the number of systems botted will rise.

Thus we realy need to consider how we “build” systems so that they can be quickly re-built from scratch and data re-loaded.

The default instals of most purchased systems unfortunatly do not make this easy to do 9if anything they appear to take a perverse pleasure in making it as difficult as possible).

It is way past the time the software industry should have started sorting this out. Unfortunatly like Nero in many cases the companies are playing the fiddle and just watching, to see who gets burned before them.

@Clive, I think that’s a self-serving rationalization.

The risk of patching causing problems is greatly overstated in my experience, and conversely the risk from not patching is underestimated.

The main driver is that the cost of patching is felt by the system owner, and even if there are no unforeseen side effects it takes time and effort so even problem-free patching causes problems for the owner.

On the other hand the pain of unpatched systems is felt more by others than by the system owner (who probably neither knows nor cares that his system is part of a botnet).

So how does the incentive align? It supports Clive’s argument.

@Clive Robinson: “we realy need to consider how we “build” systems so that they can be quickly re-built from scratch and data re-loaded.”

Allow me to point out that a LiveDVD boot can be considered a “quick re-build,” and storing data in the clouds can minimize the need for data re-load. So we can already do what you want, just probably not in the way you want to do it. But is it even possible to do what you want in the way you want?

“It is way past the time the software industry should have started sorting this out. ”

I guess that would depend upon what “this” is. I take that to be “malware.”

Unless we want to redo the complete design for the Internet, this time including security, and also repeal the law that large, complex systems inevitably have faults, software is not the solution. The fundamental problem is our HARDWARE, which does not offer protection for a stored system. That lack supports malware infection and bots. Then, of course, the OS must change to use that protection, which will in fact impact the way users see the system. No mere software patch can do what is needed.

@ Terry,

No it’s not malware, the problem is the way we build software with all the dependencies for bits all over the system.

One aspect of this is known as “DLL Hell” (which is not just an MS problem) whereby Dynamic Linked Libraries get updated and break some but not all earlier software dependent on them.

You end up with the problem of stick with the old (possibly insecure) DLL and working software or switch to the new DLL and have broken software.

DLL Hell is just one small aspect of this problem of software and patches / updates.

Decent point, Clive, but I think that point is overused. In my experience, patching rarely disrupts the most typical applications. And lots of small to midsized enterprises just use run-of-the-mill apps like Exchange, Office, and Sharepoint. Updates rarely break such apps compared to app updating in general. So, unless they have many custom or 3rd party apps of questionable design, they should be updating by default because the risk is lower.

If they are worried, they could update a small portion of the computers first, make sure everything works with minimal testing, then do a widespread deploy.

@ Nick P

“In my experience, patching rarely disrupts the most typical applications. And lots of small to midsized enterprises just use run-of-the-mill apps like Exchange, Office, and Sharepoint. Updates rarely break such apps compared to app updating in general. So, unless they have many custom or 3rd party apps of questionable design, they should be updating by default because the risk is lower.”

Again, the issue is about what environment security protection is needed for. I’m sure you’re right that in an enterprise environment, and/or one where only the standard issue Microsoft applications are used, patching may not cause any serious problems. But I’m here to tell you how much havoc they can wreak if you really use your computer with tons of 3rd party apps to do tons of different things. Those patches were never designed or tested for that kind of (ab)use, and they will break your system sooner rather than later. Just as an experiment, when I bought my last PC with Vista installed, I brought it up to date and let it automatically patch itself whenever its little heart desired, and it was irretrievably broken 2 months later. Maybe you could say that I shouldn’t be running all the software I was running on the poor thing, but heck – what are computers for if you can’t use them?

Most folks dont seem to update their programs.Its exploits in older programs that become vulnerabilities.Sure some hotshot will find a way into a newer OS or newer programs and not much we can do about that until the sploit is known.

Many also DO NOT report to microsoft any errors in their OS.This too is flawed at the user level.

I never had Vista.But i did run XP since its inception almost 10 years.Before that Win 98 and i kept that one patched up too.My last virus was a ripper virus on win 95.Thats going way back.

Sorry Deborah i still have to disagree.As a user i reformat out of choice and back up my data offline.But from a company perspective this isnt a valid option sometimes.Especially with servers up 24/7 nowadays.

I do win7 now also.Unlike others im not paranoid about Microsoft.The more reports they get ,the better it will interact will third party software i do use.

“The default instals of most purchased systems …”

The default installs of most consumer systems contain unwanted programs, called junkware, shovelware, and other unflattering names. These programs are deeply embedded into the computer and are difficult to completely remove as their uninstall routines don’t completely clean them out of the registry. Some of these have hidden executables that poll for updates and those aren’t uninstalled. It takes a lot of digging to get them out. Worse, the computer manufacturer provides a “restore disk” or “restore partition” that, when used, reinstalls all those unwanted programs, which frustrates attempts to start over with a clean system. Hard disks have huge capacities these days and the computer manufacturers should provide a “restoral partition” that provides a clean installation of only the OS and minimal device drivers.

The OS being Windows.

For future reference, there’s a new technique for getting closer to the source of the IP address:

http://www.newscientist.com/article/dn20336-internet-probe-can-track-you-down-to-within-690-metres.html

Hmm, interesting, could be applied to locating dissidents stuck behind the Great Firewall of China.

One countermeasure would be to tweak the driver to artificially delay the SYN-ACK handshake, but that would have to be done at the perimeter, and the routing info still gets you pretty close.

TOR is the obvious answer. Botnets also serve the same purpose, as Brian explained. The reported technique would locate the ‘bot not the crook, unless the ‘bot can be monitored successfully (there are countermeasures against that btw).

Tor isn’t the obvious or best answer to situations like the Great Firewall. It’s just one of them. Tor’s operations have a signature so to speak that makes it more obvious that someone is using Tor. Iran recently temporarily blocking Tor users is an example of that, due to unusual packet parameters in that example. So, if you want deniability & reliable traffic, then Tor isn’t always the best option. Regular relays or proxies are often better.

In the US, the best options are offshore proxies, hacked wifi networks, botnets and relays accessed via hacked wifi networks. (In that order). The last option provides a much higher privacy profile than Tor. That protocol is also much simpler than Tor: you can be sure that the only way you can get caught is if they track you or your computer in that physical area. With Tor, new attacks form every year. The recent BitTorrent source IP attack is a good example. Anyone wanting assurance of untraceability should avoid Tor or use Tor on top of a better scheme.

@Nick P;

True – I had a link once to a software that would takeover a bot-net much the way competing criminals do to each other, to complete a way to communicate anonymously between dissidents. The link is changed every so many days, so mine is trash.

But it was an interesting way to communicate! However the same innocent folks who are infected with bots were the target of police during some of the investigations against dissent in these countries.

@ David,

The system actually only provides an improvment if you are a leaf off of a branch node where the geo-location of the branch node is known (such as a Uni Campus server room).

Most network IP address to geo-location use an assumption of the distance data travels in a given time (ie round trip time).

For many reasons that can be hellishly inaccurate, not just because the propergation speed in a data cable can vary wildly on the cable type (compare say optical fiber to Cat 5 twisted pair) but also due to buffering in unknown devices such as bridges (that could be actually gatways on and off an underlying network such as globe spanning ATM or older X25 networks).

Further the likes of mobile phone companies overload IP addresses quite serverly and their might be in reality over 200 actual smart phones using the same IP address.

The same issues also apply to ADSL networks as well in many places.

The wonders of ISP-level NAT.

This is nothing there is a popular hacking site that u can pay $5 for a lifitime subscription of the bot metwork. Some of this people crypt their viruses so they are undetected. And if it gets detected they renew the stub and update so installing antivirus for some smart botnet owner isn’t gonna free up your computer

Question for the group…. should we as an industry take a pro-active stance and identify and notify these targeted companies at the ISP or organization level, or allow the bots to continue to exist for better detection, as they are known? I’ve always found this to be an interesting debate, but when does it become such a hazard that action is taken (or has it already come to that)?

Its tempting to just want to go out and notify the ISP for each individual bot, and I think maybe if there were some legal requirement for ISP’s to respond it could be effective. I’ve never had luck contacting small organizations for any security related issues, so I’m not certain that could be effective.

Given the current state of things, I think the practical answer is to let these bots continue to exist for the moment. The efforts of industry and to a greater degree law enforcement should be on identifying bot net operators and C&C servers. Shutting down those nodes (and making arrests where possible) seems more likely to make an impact. Microsoft is a good example of the gains that can be made with this approach, and for the moment it seems like the best approach for the industry too.

“…installing some anti-virus software on all of the servers over the next week or so.”

This remark horrifies me in so many ways and on so many levels. No sense of urgency, only servers are updated, and no mention of any other hardening measures, user education or prevention.

I sure hope they do more than that, b/c otherwise I wouldn’t trust Cunningham within a 10 mile radius of my systems…

True, but if Cunningham tried to do it right there is a good chance his client would look at the price tag, fire him, and hire someone to do what he described. It’s just enough to provide a good alibi or court defense, that’s the goal not fixing the problem.

Interesting part of Brian’s story is that the network had been scanned and a spambot removed a couple of weeks earlier, and their IT consultant “had no idea the network was still being exploited”.

Obviously the consultant is sufficiently knowledgeable to pass as a professional, and yet they didn’t detect or prevent the compromise. This speaks to the difficulty of establishing a known clean baseline.

Furthermore, if they are “updating [PCs] and installing some anti-virus software on all of the servers” they may or may not eradicate the malware successfully, and they may or may not remediate whatever vector was used to infect the network originally. In other words it is pure chance whether they fix the problem and it stays fixed.

And that is using a professional IT consultant!

It is really hard to go back and do forensics on a compromised network, especially if it was compromised long ago in an unknown fashion and the attacker has had warning to cover their tracks.

The only way they can be sure they have a clean secure network is to rebuild from scratch and totally harden before introducing any user data that might have been exposed to the compromise – any bets on how practical that might be?

GekkeHenkie said it perfectly well. Problem is that Cunningham is not atypical.

@InfoSec Pro: “Obviously the consultant is sufficiently knowledgeable to pass as a professional, and yet they didn’t detect or prevent the compromise. This speaks to the difficulty of establishing a known clean baseline.”

Even taking just the comments on this blog, there seems to be a vast conventional-wisdom consensus that bots can be: 1) found, and 2) removed. Oddly, I dispute BOTH parts:

* There is no test which guarantees to find a hiding bot. There is no way to certify being bot-free.

* The only way to recover from infection is to re-install the system (or recover a known clean image), including applications.

I partly agree with you and one-uped the post accordingly, but I can’t really believe he was a very competent professional. I know what passes for an IT professional in places like Memphis. You will get plenty of people who have basic experience, certifications and know how to talk to managerial types like they know shit. Then, you will get really technical people who can root out bots. Most of the people who get contracts and jobs in big companies in Memphis are the former type, which is why I sometimes have to drive out there and clean up their messes.

There are numerous techniques for identifying bots. You have normal scans. Then, you have software like Strider Ghostbuster that can detect hiding rootkits. (I doubt he employed such an approach.) Then, there are whitelisting firms that have huge databases of known good files that should be on a system and can automatically highlight questionable files. This approach has been used with great success by a few firms.

In all likelihood, the “professional” just ran an AV scan from a boot disc, looked at some attributes of the system from within the corrupt system, made some obvious changes and then declared it “clean.” Today’s botnets take a much more thorough approach, possibly utilizing every approach I mentioned above. Hence, I have my doubts that he was really skilled at botnet removal. I mean, the Best Buy geek squad kids I know remove infections like this all the time. So, why couldn’t this guy pull off a basic job? (Occam’s Razor: Lip service skills.)

@Nick P: “There are numerous techniques for identifying bots.”

But they would seem to be more appropriately described as “wishful delusions” instead of effective bot-detection techniques:

* “You have normal scans.”

But Polymorphic malware “encrypts” malware files differently on each machine, making scans useless.

* “Then, you have software like Strider Ghostbuster that can detect hiding rootkits.”

The issue is not whether an occasional specific rootkit can be detected, but whether EVERY rootkit can be detected, because that is the only way to avoid having a bot. That answer is no, and it will always be no.

* “Then, there are whitelisting firms that have huge databases of known good files that should be on a system and can automatically highlight questionable files.”

I agree with the whitelisting approach, actually, but Windows presents problems:

First, all such file comparisons need to be done outside the OS, since the OS itself may have been subverted. In general, that means by LiveCD, because even code which directly implements the file system may not be allowed to load correctly.

Next, Windows goes out of its way to change essential files, like the Registry, which means whitelisting cannot help. Can whitelisting scan the Registry to assure that no malware is being started? I do not think so.

Last, but not least, Windows has traditionally included a range of apps which seemingly could have no security implications at all. Consider the video and music players, and the word processor, all of which have in fact been used to start and run attack code. Or consider .PDF files. Can whitelisting scan the data files for those apps to show a lack of infection? I do not think so. The issue is not what we have found, since that should have been fixed, but what remains for malware to exploit.

In summary, let me repeat, and louder this time, so people can hear me:

THERE EXISTS NO TOOL WHICH CAN GUARANTEE THAT A BOT IS NOT PRESENT.

THE ONLY CORRECT RESPONSE TO BOT SUSPICION IS A FULL OS INSTALL WITH APPS (or the recovery of an uninfected image).

BOOTING A LIVECD IS ALMOST AS GOOD AS A FULL OS INSTALL BEFORE EVERY SESSION.

I think wishful delusions is an unfair term. I’d call them “techniques for identifying rootkits.” A given technique may or may not work on a given rootkit. You’re certainly right in saying that there’s no tool that will guarantee that a machine isn’t subverted (well, at least on x86 & windows ;). But when’s the last time users, admins or even security gurus paid for near-perfect assurance? The market demands solutions which work well most of the time. So, that’s what I’m focusing on here because the repair guys I know rarely fail during a removal and offer money back or free service call for restoring to clean slate. That’s good enough for most users.

So, on to the specifics. A normal, signature-based AV scan will certainly fail to catch polymorphic malware. A whitelisting scan on a LiveCD that compares whats on the system to a database of known good files will usually find things that aren’t supposed to be there. A technology like Strider compares what things look like on the inside with what a LiveCD see’s to identify discrepencies and the presence of a rootkit. Properly configured trusted boot and disabling VT in the BIOS can prevent other kinds of attacks.

Many infections are also not quite as sophisticated and effective as a professional grade Zeus bot and delivery kit. The best make the news regularly, but many run-of-the-mill infections are cannon fodder. I’ve been able to clean quite a few infections without leaving the corrupted system because they weren’t very resilient or covert. The most sophisticated threats require a clean install, as I wouldn’t trust any removal techniques on them (or clean install is actually quicker than painstaking tracking + removal).

So, I agree that there’s no tool that can guarantee a clean PC and up-to-date LiveCD (or other read-only boot media) is ideal for solving persistent malware issues. However, there are quite a few cases where taking down a system totally, reformatting, reinstalling and updating are extremely laborious and otherwise costly. An hour of probing, identifying and removing a threat we know we can handle is a better use of the customers’ time. If we are unsure, then we take more costly steps. I do prefer the clean slate method, but I mention the alternative because I’ve been in so many situations where clean slate wasn’t an allowed option.

@ Terry,

Yes it can be shown to the level of a proof that a system cannot detect if it is infected by malware or not.

However the practicality is to what percentage of malware can be found and how?

First off you need to divide malware up into groups, firstly is the obvious “Known / Unknown” grouping that is the zero day issue. Secondly there is the issue of “Stored / Not Stored” between reboots. And yes there are further subdivisions.

The point is over 90% of known malware that can run on a system can be caught by running software on the active system, even if it is already “infected”.

Catching and removing this malware alone would make significant in roads into the issue. Which I think is one of the points Nick P is making, likewise Brian and several others.

Further atleast 90% of the remaining known malware (ie root kits and the like) can be found by re-booting the system into a different OS that then scans the semi-mutable memory (ie HD’s and other storage media attached).

You are effectivly detecting over 99% of the known malware that can be stored on a system, without doing anything overly complex.

However some systems cannot be just stopped and re-booted because they are considered “mission critical”. But there is a way around this in that the same effect as the second method can be achived by running systems in a virtualized manner. That is where the base OS is designed as a hypervisor to effectivly run the “commodity OS” in a “sand box” and can thus monitor semi-mutable memory and communications paths during normal operation. This is a method which I know Nick P has made reference to in the past.

Effectivly what you are doing is checking for where the malware code is stored for the time when the system is not active.

Whilst not all malware does this a large amount of malware currently makes the assumption that it needs to be able to survive a re-boot on a standalone system and modifing semi-mutable memory is the only way it can do this.

So the second method also alows you to pick up anomalies in the system where files have changed that should not have. Thus effectivly you are seeing the footprints of anomalous system operation, that may be indicating there is malware that is not currently known on the system.

Thus the second method is not just reactive to known malware but also sensitive to unknown malware.

There are various other techniques that can be used to check other semi-mutable memory on the system (ie not just that in the Flash ROM of the BIOS and I/O cards but also inside the CPU etc).

Further there are also techniques which will pickup anomalous behaviour in the fully mutable memory (ie RAM) that you would expect from the likes of network worms etc, that don’t write code to the semi-mutable memory on a system.

However all of these extended techniques tend to be difficult to implement and the reason for this is primarily to do with the way we design and build software including the OS.

It is another reason why I said earlier it is well past time we started sorting this issue out.

Because sorting out malware is a multistage process and stage one is detection. So if your OS and software is designed so that malware detection is not just difficult but extrodinarily difficult you have to stop and ask if this is the way we should go…

The categorization you mentioned is a key point here. Many people look at rootkits and malware like the system is totally a black box that doesn’t leak useful information and the malware authors are so good at stego they could get honorary Ph.D’s on the subject. In most cases, this couldn’t be further from the truth.

Most rootkits use well-known techniques to hide their presence. They *must* hook into or emulate certain API’s or subsystems to achieve the proper level of control or invisibility. And these subsystems do have consistent patterns of behavior that are disturbed by such hooks. That’s one avenue of detection.

The code must also have a method of executing. Overflows, low level programming attacks, and poor permissions are common ways this occurs. This can be beaten with restricted execution setups, OS’s with reverse stack (e.g. SourceT), or higher level languages for application building. Para-virtualization or MAC help here too. Give the rootkit no way to run and it’s suddenly not an issue.

Rootkits have to maintain their code somewhere to be persistent. Many important system files don’t change except during updates. A “known-good” analysis or a Tripwire-style differential analysis of critical system files can often detect rootkits. A good backup and rollback strategy can undo such changes if an unauthorized modification is detected.

Then, of course, there’s the virtualization Clive mentioned. This is one of my primary methods for dealing with malware because there’s hardly any malware out there designed to outright defeat virtualization solutions. A properly isolated VM will restrict the malware from the start, can be analyzed freely by outside software (including volatile state), and can be rolled back to a clean state at the first sign of problems.

With most malware, subversion is pretty easy deal with. It’s those subversive “people” that one must worry about. 😉

@ Clive

“…sorting out malware is a multistage process and stage one is detection. So if your OS and software is designed so that malware detection is not just difficult but extrodinarily difficult you have to stop and ask if this is the way we should go…”

I-am-not-a-developer, but I have worked closely with OS developers and tested OS code, so I have at least a passing familiarity with the subject you’re discussing. And it seems fair to say that the next generation of OSes (or a generation soon to come) needs to address several of the points you’ve raised. Not just for malware detection and management, but for overall system functionality, stability, maintenance over time, and performance.

Our current OS paradigm is essentially one of a central kernel with DLLs, drivers and executables layered on top of it. (That’s a very loose way of putting it, but sufficient for this discussion.) As I understand it, this design keeps the most basic system operations in the kernel as simple and as abstract as is minimally possible, to maximize efficiency of communications with the CPU and all the hardware on the system. Then the vastly more detailed implementation of specific functions is farmed out to the DLLs, drivers and executables.

This fundamental architecture is what produces not only the DLL Hell you mentioned previously, but also the difficulties in scanning for malware that you discuss in this post. In my experience, everyone who is aware of these problems would like to see them solved, but so far no one has had the brilliant flash of insight that would lead to a solution.