An attack late last week that compromised the personal and business Gmail accounts of Matthew Prince, chief executive of Web content delivery system CloudFlare, revealed a subtle but dangerous security flaw in the 2-factor authentication process used in Google Apps for business customers. Google has since fixed the glitch, but the incident offers a timely reminder that two-factor authentication schemes are only as secure as their weakest component.

In a blog post on Friday, Prince wrote about a complicated attack in which miscreants were able to access a customer’s account on CloudFlare and change the customer’s DNS records. The attack succeeded, Prince said, in part because the perpetrators exploited a weakness in Google’s account recovery process to hijack his CloudFlare.com email address, which runs on Google Apps.

In a blog post on Friday, Prince wrote about a complicated attack in which miscreants were able to access a customer’s account on CloudFlare and change the customer’s DNS records. The attack succeeded, Prince said, in part because the perpetrators exploited a weakness in Google’s account recovery process to hijack his CloudFlare.com email address, which runs on Google Apps.

A Google spokesperson confirmed that the company “fixed a flaw that, under very specific conditions, existed in the account recovery process for Google Apps for Business customers.”

“If an administrator account that was configured to send password reset instructions to a registered secondary email address was successfully recovered, 2-step verification would have been disabled in the process,” the company said. “This could have led to abuse if their secondary email account was compromised through some other means. We resolved the issue last week to prevent further abuse.”

Prince acknowledged that the attackers also leveraged the fact that his recovery email address — his personal Gmail account — was not taking advantage of Google’s free 2-factor authentication offering. Prince claims that the final stage of the attack succeeded because the miscreants were able to trick his mobile phone provider — AT&T — into forwarding his voicemail to another account.

In a phone interview Monday, Prince said he received a phone call at 11:39 a.m. on Friday from a phone number in Chico, Calif. Not knowing anyone from that area, he let the call go to voicemail. Two minutes later, he received a voicemail that was a recorded message from Google saying that his personal Gmail account password had been changed. Prince said he then initiated the account recovery process himself and changed his password back, and that the hacker(s) and he continued to ping pong for control over the Gmail account, exchanging control 10 times in 15 minutes.

“The calls were being forwarded, because phone calls still came to me,” Prince said. “I didn’t realize my voicemail had been compromised until that evening when someone called me and soon after got a text message saying, ‘Hey, something is weird with your voicemail.'”

“The calls were being forwarded, because phone calls still came to me,” Prince said. “I didn’t realize my voicemail had been compromised until that evening when someone called me and soon after got a text message saying, ‘Hey, something is weird with your voicemail.'”

Gmail constantly nags users to tie a mobile phone number to their account, ostensibly so that those who forget their passwords or get locked out can have an automated, out-of-band way to receive a password reset code (Google also gets another way to link real-life identities connected to cell phone records with Gmail accounts that may not be so obviously tied to a specific identity). The default method of sending a reset code is via text message, but users can also select to receive the prompt via a phone call from Google.

The trouble is, Gmail users who haven’t availed themselves of Google’s 2-factor authentication offering (Google calls it “2-step verification”) are most likely at the mercy of the security of their mobile provider. For example, AT&T users who have not assigned a PIN to their voicemail accounts are vulnerable to outsiders listening to their voice messages, simply by spoofing the caller ID so that it matches the target’s own phone number. Prince said his AT&T PIN was a completely random 24-digit combination (and here I thought I was paranoid with a 12-digit PIN).

“Working with Google we believe we have discovered the vulnerability that allowed the hacker to access my personal Gmail account, which was what began the chain of events,” Prince wrote in an update to the blog post about the attack. “It appears to have involved a breach of AT&T’s systems that compromised the out-of-band verification. The upshot is that if an attacker knows your phone number and your phone number is listed as a possible recovery method for your Google account then, at best, your Google account may only be as secure as your voicemail PIN.”

AT&T officials did not respond to requests for comment.

Beyond such vulnerabilities, customer support personnel at mobile phone providers can be tricked into forwarding voicemails to other numbers. And this is exactly what Prince claims happened in his case.

“In this case, we believe AT&T was compromised, potentially through social engineering of their support staff, allowing the hacker to bypass even the security of the PIN,” Prince wrote. “We have removed all phone numbers as authorized Google account recovery methods. We are following up with AT&T to get more details.”

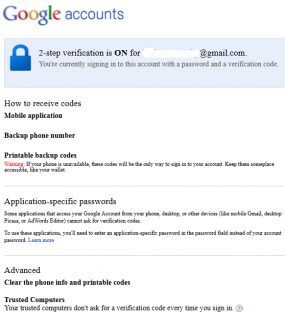

If you use Gmail and haven’t yet taken advantage of their 2-step verification offering, I’d strongly encourage you to take a moment today and do that. But even if you already use 2-step verification, you may want to review other security options. For example, you probably have supplied Google with a backup email address. If that backup email is also a Gmail account, is that account protected with 2-step verification? If not, why not?

If you use Gmail and haven’t yet taken advantage of their 2-step verification offering, I’d strongly encourage you to take a moment today and do that. But even if you already use 2-step verification, you may want to review other security options. For example, you probably have supplied Google with a backup email address. If that backup email is also a Gmail account, is that account protected with 2-step verification? If not, why not?

Also, consider whether you want your 2-step verification codes sent to a mobile device that resides on a network whose customer service personnel could be vulnerable to social engineering attack. Alternatives include sending text messages or automated voice calls to a Skype line, or removing the mobile network entirely. Google has a mobile app for Android, Blackberry or iPhone called Google authenticator; it works much like a token or key fob, in that it can be used to generate a new code whether or not your phone has access to the mobile network or Internet.

Does the CEO really need access to operational systems, like customer configs and DNS?

It’s still a very small company. The CEO probably does anything that needs doing.

Fair enough, although regardless of CEO or junior admin, I think sensitive changes to customer settings made by CloudFire staff should be done by two people under a two-eyes principle/SoD. Or just have the customer make these changes self-service and offload the risk completely.

Otherwise you might be betting the ranch without even knowing it.

The folks at Cloudflare could answer it better than I could. It’s not a web hosting company. You continue to host your site normally, but instead of using your host’s nameservers, you use Cloudflare’s. As I understand it, it distributes the DNS functon over a large number of servers, so the page loads are faster and it’s harder to bring a site down (due to DDoSing or slashdotting), as long as people are requesting a website using its URL. They also cache pages that have been recently viewed, which reduces load on your host’s server, and they route traffic from IP addresses on Project Honey Pot’s blacklist through a challenge screen. When a particular site has a severe peak in traffic, they also take actions to mitigate the load further.

Anyway, it’s entirely possible that having access to DNS settings is intrinsic to how they provide their service. I don’t know the details that well.

Excellent information and emergency response handling by Cloudfare!

This is proof that if they want it enough they will get it. But your advice “If you use Gmail and haven’t yet taken advantage of their 2-step verification offering, I’d strongly encourage you to take a moment today and do that” is absolutely the truth. We can see that the 2FA made it so they had to work hard to get want they wanted. And that is what we want… we don’t want to make it easy for them by just handing it to them. Imagine how easy it would have been if he had not activated the 2FA? And you are not going to find a more secure and easier user experience anywhere. So activating the two-Factor Authentication technology where you can telesign into your account by entering a one-time PIN code, is worth the time it takes to set it up and have the confidence that your account won’t get hacked and your personal information isn’t up for grabs.

This seems like an extremely involved attack.

Target: A customer of Cloudflare’s.

Route:

Compromise Cloudflare employee (in this case, CEO)

– Compromise his e-mail (Exploit a flaw in Google’s Gmail)

– Comprompose his voicemail (Given that he had a PIN, another exploit? Or already a victim of a targeted attack?)

And this was done to get at a Cloudflare customer. Either it was a really high value target, or they didn’t mind throwing away all that work because they’ve gotten other reimbursement out of it.

The term is “HVT”, short for “high value target”.

If the transient compromise of the CloudFlare customer was leveraged to establish an advanced persistent presence on some target network, it was worth it.

My considered professional opinion is that the ultimate target was not in fact the CloudFare customer but rather some victim who could be vulnerable to exploitation through the CF customer compromise.

Stop thinking in ankle-biter concepts, the first cyberwar started awhile ago. The prize is economic control of the world. On those terms this falls somewhat below Flame or Zeus. No big deal.

Two-factor authentication (2FA) is, at this point, a prerequisite to any system that wants to promote itself as being secure. However, 2FA systems can be violated if not properly designed.

The flaw in many 2FA systems is that the information – whether by voice or SMS – to validate an account is frequently sent *to* the user’s mobile number. This is a fundamental error because of the very problems illustrated in the article (e.g., calls being forwarded) and the possibility of the mobile having been cloned.

A 2FA system that reverses the process is inherently more secure than any other system available. A system designed so that the verification code is shown in open text on the web page *instead* of a field into which data (the code) can be entered is a better design. The code displayed must then be sent by the mobile that is associated with that account for verification and ONLY if that mobile (not a clone or a spoofed number – neither will work) sends the code is verification completed.

2FA systems aren’t perfect but utilizing the unique device ID of a mobile by reversing the process as described here, combined with a code and a PIN, reduces the likelihood of intrusion to an infinitesimal level.

As far as I’m aware – and I could be wrong as I don’t follow the field closely – mobile cloning is impossible in many countries or at least no evidence that it’s been successfully accomplished on newer GSM and so called “4G” networks exists.

It wasn’t always the case. Prior to some iteration of the GSM standard that enabled strong encryption SIMs could be cloned.

And countries that are on the USA encryption technology embargo list still suffer cloning due to not recieving the newer GSM technology.

While old fashioned cell phone cloning isn’t possible, some GSM networks support it as a customer feature at the network level. I had two SIMs on Elisa Finland through 2011 when I left. We (Nokia) used the service to enable employees to have two phones (shipping and alpha) which would receive phone calls at the same number and have the same caller ID. Technically the second subscription had its own phone number, but the network sent the primary number for caller ID and all inbound calls for the primary subscription would ring to both, and SMSs would be delivered to both.

The service is fairly important to people developing and testing phones (both vendors and carriers), so I wouldn’t be surprised if many or even most carriers supported it.

Remember that while you may not subscribe to a feature, if it’s available and an attacker can socially engineer an attack on your carrier, the feature could be added to your account, used, and discarded with you only noticing when you get the bill.

I don’t expect such an attack to be common- it only makes sense for a HVT, and I’d hope the carriers wouldn’t be susceptible to it, but…..

This “feature” is certainly available, but it does not affect the 2FA method that I described because the cloning occurs at the SIM level, not the UDID level.

UDIDs are embedded into each piece of wireless hardware made worldwide. Despite any cloned or spoofed SIM – or even a network feature – when a text is sent *from* a mobile device it carries the UDID of that device. Absent the original device, which is associated by its mobile number combined with the UDID, sending the text, verification is not granted.

The “MO” (industry-speak for “mobile originated” or an SMS sent from the cell phone into the network) method of 2FA is still significantly stronger than the “MT” (mobile-terminated) method employed by many 2FA companies.

I suppose that would overcome the issue of vendor supplying the tokens for one time passwords having their network hacked, the encryption keys/seed code and who knows what else stolen and then being slow to admit it.

I’m not sure I understand how your authenticating system would determine that

“The code displayed must then be sent by the mobile that is associated with that account…. (not a clone or a spoofed number….”

Does this approach need to wait until the USA has another set of mobile phone identifiers [proposed] allowing carriers to brick stolen phones? Perhaps that identifier would be fairly resilient as a uniquely device specific code.

The system is fully functional now and operates in the US and UK (other countries can be enabled easily with the addition of a local number). The UDID is sent in the text message as part of the message string that is uneditable.

If the user loses their phone and reports to the carrier, who then transfers the number to another device, our system handles the transfer transparently. No action on the part of the user is required.

I think it’s important to remember that Google doesn’t demand that you use a mobile # as your phone recovery option. I personally use a land line, but I don’t do that for added security. Instead, I choose that method because I use the Google authenticator on my cell phone, and my theory is that if I ever need to use recovery by phone then there’s a good chance that I’ll have lost access to my mobile phone for some reason.

I do think that using a land line makes you less susceptible to having your voice mail intercepted, but in a case like this I have no doubt that the hackers would have just had the land line forwarded if they needed to. It seems like they were determined enough to go that route if they needed to. Still, that may have been harder than resetting a mobile voice mail PIN and then spoofing the number (then again, maybe it wouldn’t have been).

I’m glad Google fixed the 2FA flaw, but this story reminds me of what the infamous hacker Kevin Mitnick said in one of his books (paraphrasing): If someone has enough skill and enough determination they will find a way in; all you can do is make it as hard for them as possible.

Along those lines, if you’re into security then keep your eyes open to building design. Ever seen a building that might contain information that is both sensitive and valuable, and it has some unique landscaping done around it? Like big boulders or thick posts on the outside of the building? Buildings like that often look cool and unique, but most people don’t even realize that the design makes it pretty hard to physically penetrate. Like Mitnick said, if someone is determined enough they will get in, even if it means driving a truck through the wall of your building. All you can do is make it as hard as possible for them.

Thank you for reporting over this, it is certainly interesting (and scary) to see how much effort and inventiveness is employed by hackers. There are 3 things I did not understood, am I too thick or there are still aspects not entirely clarified:

1. What role had the call at 11:39 a.m. in the hacking incident was the hacker probing to see if the phone number he had is correct or it is a more technical explanation ?

2. What was the exact chain of events ?

a) Mr. Prince private gmail account compromised (how?) Even without 2FA I would imagine someone cautious as Mr. Prince seems to be would have avoided personal question with easy to guess/research answers

b) test phone call by hacker to Mr. Prince to check his response ?

c) Mr. Prince business gmail account compromissed by sending reset instructions to his private gmail account (already compromissed)

d) Mr. Prince alerted by voicemail (10 times) and wrestling back the control of his (business) account. Mr. Prince used 2FA to reset password and was unaware the private address was compromissed

e) hacker social engineers AT&T or by other means redirects voicemail such that Mr. Prince remain oblivious to further password resets

f) Mr. Prince assumes the hacker gave-up and the intruder enjoys a few hours of access to both business and private gmail accounts.

g) The accounts rightful owner is alerted something amiss by voicemail greeting inadvertence and re-take control. Is this the way the breach occured ?

3. How could someone otherwise cautious assume all okay after alerted 10 times in 15min of password being changed ?

George, this might help (it’s what Alpha was referring to)

http://blog.cloudflare.com/the-four-critical-security-flaws-that-resulte

https://getfile2.posterous.com/getfile/files.posterous.com/temp-2012-06-04/rcBuoJHABhwgmaethlqeBGzinwuBiJvbsfycoAwwvbcuiJvkekwBmtDhmtuJ/attack-timeline.png.scaled500.png

The phone call was an automated call from Google letting him know that the password for his business email password had been changed. Presumably he had his personal account setup to send a verification code to his cell phone if he ever needed to do a password reset.

This is the way I interpret the chain of events (anyone can correct me if I’m wrong):

1. Hacker manages to trick Google into associating an email address that he controls with Prince’s personal email account

2. Hacker social engineers AT&T into bypassing the PIN for the cellular voice mail

3. Hacker sends password reset request for Prince’s corporate Google Apps email to Prince’s personal email

4. Hacker then initiates password reset request for Prince’s personal email

5. Prince gets the voicemail saying his personal password has been reset, and he starts fighting for control of his personal account

6. This part is a bit blurry, but if you read the blog entry then you see that the hacker eventually gained control and Prince’s voice mail started getting forwarded to another number. So I’m guessing the hacker gained control of Prince’s personal email by keeping him from receiving the password reset verification code.

7. Once the hacker had the personal email account he was able to use it to verify the resetting of the corporate Google Apps account (apparently this is where the flaw came in, because 2FA was disabled on the corporate account during this process)

8. Once the hacker had the corporate email account it was game over. He was able to access the Google Apps administrative panel and use that to initiate a password reset request for the Google Apps account of his target. CloudFlare was sending copies of those requests to an administrative account, so the hacker was quickly able to access that account and verify the password reset.

9. The hacker was able to access his target’s account and change his DNS records, which appears to have been his ultimate goal

There’s a diagram on the blog post that Brian linked to. It helps explain the sequence of events.

It’s not that hard to have the phone company redirect calls to a different phone number. (Matthew’s voice mailbox and its password were NOT the issue; it was AT&T initiating the call-forwarding from Matthew’s phone number to a phone number under the control of the attacker.)

It’s not so strange for a phone company to forward a phone to a second number because of a call from a third number. That’s how businesses, whose voice mail systems and phone consoles depend on electricity, can forward their phones to their answering services during a power failure.

The phone company may be relying on the fact that the destination number is a form of authentication; it only makes sense to forward phones to numbers under ones own control. But by using this hacker’s technique as well as caller ID spoofing, one could take over a succession of phone numbers and voice mailboxes on different phone companies, making it difficult to determine where the messages were actually going and who might have been able to listen to them.

Brian wrote his post after mine, so I didn’t have access to the diagram when I wrote my chain of events. However, if that diagram is correct then I’m still unclear as to how the CEO got the voice mail. If his number was being forwarded then he never should have received it.

A more logical interpretation is that the hacker social engineered AT&T into clearing the voice mail PIN and then he spoofed the cell # and called in to check Prince’s voice mail. That explains how Prince could get the voice mail and the hacker could too.

Also, that diagram does not match with Prince’s version of events in his blog post. If the diagram is by him then I hope he updates his original post to reflect the correct chain of events. His blog post says that the recovery email was changed on his account back in May .

Regardless, I agree with you that it’s not hard to forward a number. The only reason I use a land line is in case my mobile is lost. However, if you’re in the U.S. and you try to forward a number by calling customer support then you should be prompted to answer security questions. In my last job I had to call Telcos a lot and after 9/11 they got pretty strict. I think that it would be at least as hard to forward a number as it would be to bypass a voice mail PIN.

Does 2 step authentication work with IMAP capable email programs like Thunderbird?

Great question, Jeff. Yes, it does. Google’s system has a feature called “application specific passwords,” and you basically create a label for the app you want to add, then it will generate a longish random passphrase. The next time Thunderbird asks for your password (you can clear the password box in Thunderbird if you have it saved there, and then force the application to check for new mail), enter the random passphrase that Google assigned to that account, instead of the password you would normally enter into Thunderbird.

Brian,

I maybe off base in wondering if this qualifies as multi-factor auth. If the cached password is simply replaced with a strong password that is supposed to be app/device specific and that device is compromised, how does a second factor come into play to secure the channel?

Would you consider this to be Google’s accommodation of communication channels that simply won’t support multi-factor auth? In which case maybe it should come with a stronger warning label – If you want the convenience of using a cached password in your tablet, be aware that you are creating a weaker message channel.

Haven’t thought it through end-to-end – just wondering.

As always, thanks for your reporting!

Semantics aside, it’s a darn sight more than what most others are offering, which is to say nothing.

Just today I was having a conversation with a source about the many sites in the underground that appear to offer SSNs, DoBs, MMNs and other very sensitive data such as credit reports, almost certainly because they are compromising accounts/PCs of people who have legitimate accounts at expensive and sometimes exclusive businesses that resell this information legally. Why aren’t those users required to have some kind of 2-factor, 2-step, out-of-band, whatever you want to call it?

I realize I’m sort of changing the subject a bit, but your question struck a nerve because I find it frankly ridiculous that Microsoft and Yahoo aren’t offering something similar.

You’re absolutely right – don’t get me wrong – there’s a reason I don’t use Microsoft or Yahoo e-mail.

The Gmail free accounts have always been a better offering. IMAP support, ability to track activity and methods used to access the account plus the https option are three examples of where they have – and continue to – stand out from others.

I just am trying to highlight that if this guy has motivated, skilled attackers and uses apps to access either enterprise or personal accounts ‘backing-up’ his Gmail enterprise account, it sounds like he needs to be paranoid. I’m not sure the cached app password can be compromised unless some one gains control of the app/browser/device. Nonetheless it may be a lesser degree of protection. Just because Google offers it as a convenience, e.g. POP3, doesn’t mean it fits in a firm’s security policy. The BYOD trend increasingly magnifies potential problems, particularly if the enterprise is not able to secure those user devices with a content manager to encrypt and enable wiping.

As a side note – despite personal use, I had no idea what to make of Google as an enterprise offering until they bought Postini; that made me sit up and take notice.

It isn’t technically two factor, but it’s better to think about it as cached credentials from two factors.

Each page you visit on Google while you’re logged in requires authentication, but Google doesn’t prompt you at each page, instead it gives you a cookie. That cookie is representative of successful two factor authentication. The generated key that you give to Thunderbird (or GoogleTalk, ….) falls into the same category.

When you close your browser or restart your computer and then visit Google again, it doesn’t typically challenge you for two factor authentication – it trusts your computer’s security. The same applies to the Application specific passwords.

Note that you can rescind them at any time from the same UI that generated them. Google will also tell you the IP addresses of recent sessions along with location and application classic (desktop, mobile, ….).

I’ve been using two factor authentication from Google for a few years (on all of my accounts) and am quite happy with it.

All the comments are great but I am also concerned of what data was taken on his e-mail. A so sophisticated and time consuming attack makes me think that there where interesting emails.

The bigger question here is: What the heck is a CEO of a company using GMail service at all and putting any company info on its servers?

Why isn’t he using his own company’s server for sensitive email, one that he presumably has control over, including long password, SSL transport, SFTP ability to get in behind the scenes and change the password.

Plus, to boot, he doesn’t have to put any sensitive information on a Google server, which a) may still be powned by the Chinese hackers for all we know, b) is owned by the notorious DoubleClick and subject to its Google advertiser scanning (along with any additional vulnerabilities that it might bring), and c) good luck getting hold of someone live to do something about it right away once the breach occurs.

The lesson here is not to rely on a proven untrustworthy Google for any critical information in non-pre-encrypted 256-bit AES server storage, period.

Unless it is a throw away email address, you should only use email in which you have 24 hour access to a live person who can help you with access to the server in such cases.

Apparently, the CEO doesn’t trust his own IT staff, so maybe the question ought to be asked: why should anyone else?

@Mouzer – While we in the tech world would like to think that all companies large and small have the luxury of setting up their own servers, maintaining the infrastructure, etc., it’s just not the case. The unfortunate fact – as Google’s domains and apps sales attest – is that most small businesses simply want to turn these functions over to someone else and focus on their own revenue-generating activities.

It is for that reason that 3rd party 2FA systems exist.

Either he’s technical enough to make that decision himself or he’s not. If he’s not, who does he trust: his hiring protocol or Google?

Running your own servers for public facing services requires expert knowledge in server security as well as in the specific service.

The company’s expertise is in content delivery. It’s far safer and more secure to outsource requirements in which you don’t have expertise to trusted 3rd parties.

Again, it being a small, recent, start-up company, his gmail address probably is much older than Cloudflare itself and still gets more email than his Cloudflare address. And gmail’s spam filtering is better than most people could do for themselves.