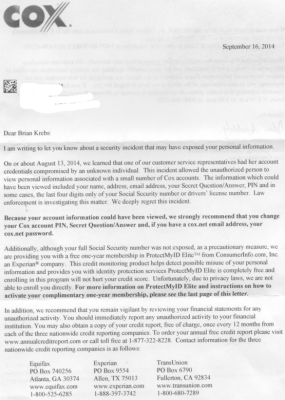

In September 2014, I penned a column called “We Take Your Privacy and Security. Seriously.” It recounted my experience receiving notice from my former Internet service provider — Cox Communications — that a customer service employee had been tricked into giving away my personal information to hackers. This week, the Federal Communications Commission (FCC) fined Cox $595,000 for the incident that affected me and 60 other customers.

I suspected, but couldn’t prove at the time, that the band of teenage cybercriminals known as the Lizard Squad was behind the attack. According to a press release issued Thursday by the FCC, the intrusion began after LizardSquad member “Evil Jordie” phoned up Cox support pretending to be from the company’s IT department, and convinced both a Cox customer service representative and Cox contractor to enter their account IDs and passwords into a fake, or “phishing,” website.

I suspected, but couldn’t prove at the time, that the band of teenage cybercriminals known as the Lizard Squad was behind the attack. According to a press release issued Thursday by the FCC, the intrusion began after LizardSquad member “Evil Jordie” phoned up Cox support pretending to be from the company’s IT department, and convinced both a Cox customer service representative and Cox contractor to enter their account IDs and passwords into a fake, or “phishing,” website.

“With those credentials, the hacker gained unauthorized access to Cox customers’ personally identifiable information, which included names, addresses, email addresses, secret questions/answers, PIN, and in some cases partial Social Security and driver’s license numbers of Cox’s cable customers, as well as Customer Proprietary Network Information (CPNI) of the company’s telephone customers,” the FCC said. “The hacker then posted some customers’ information on social media sites, changed some customers’ account passwords, and shared the compromised account credentials with another alleged member of the Lizard Squad.”

My September 2014 column took Cox to task for not requiring two-step authentication for employees: Had the company done so, this phishing attack probably would have failed. As a condition of the settlement with the FCC, the commission said Cox has agreed to adopt a comprehensive compliance plan, which establishes an information security program that includes annual system audits, internal threat monitoring, penetration testing, and additional breach notification systems and processes to protect customers’ personal information, and the FCC will monitor Cox’s compliance with the consent decree for seven years.

It’s too bad that it takes incidents like this to get more ISPs to up their game on security. It’s also too bad that most ISPs hold so much personal and sensitive information on their customers. But there is no reason to entrust your ISP with even more personal info about yourself — such as your email. If you need a primer on why using your ISP’s email service as your default or backup might not be the best idea, see this story from earlier this week.

If cable, wireless and DSL companies took customer email account security seriously, they would offer some type of two-step authentication so that if customer account credentials get phished, lost or stolen, the attackers still need that second factor — a one-time token sent to the customer’s mobile phone, for example. Unfortunately, very few if any of the nation’s largest ISPs support this basic level of added security, according to twofactorauth.org, a site that tracks providers that offer it and shames those that do not.

Then again, perhaps the FCC fines will push ISPs toward doing the right thing by their customers: According to The Washington Post‘s Brian Fung, the FCC is offering in this action another sign that it is looking to police data breaches and sloppy security more closely.

Brighthouse Cable here in Florida does not have 2FA on their online accounts

Thanks for the info about twofactorauth.org! I’m actually kind of shocked that so many organizations with PII haven’t taken this step yet.

While it’s great that the FCC has taken some action, my opinion is the fine levied is not substantial enough to get a reaction out of other organizations. 595k seems like a drop in the bucket for Cox, especially considering the revenue of the greater Cox Enterprise.

It seems that fines levied against organizations related a hack or breach are minimal and equate to a slap on the wrist. I’m curious to see if this will change going forward and the penalties will increase over time.

I agree the fine is too small to be anything other than a slap on the wrist. Since most large companies now have some form of ‘hacking’ insurance, I wonder if the insurance also covers the penalty or if that is excluded from the policy.

I don’t know how it works in the US but in the UK directors seem to get a free ride and it is the shareholders that effectively bear the cost of these fines.

Directors have a fiduciary duty to shareholders to look after the company.

So:

When shareholders vote on directors remuneration, perhaps they should vote for a total for the “remuneration pool”. This pool should first be used to pay fines such as these, then directors salaries, benefits etc, then if anything is left their bonuses. If the fines in a year exceed the pool any deficit should be clawed back from deferred bonuses such as share options.

Shareholders should not end up paying these sort of fines!

560k is a lot of money though. Idk… As long as they got fined a good amount I’m happy tbh

https://www.youtube.com/channel/UCijmVN7B2_TF5NqwpE9AwLA

This brings up an interesting dilemma: now that Cox has better security than any of the other providers in the Va area, will you be moving back to them?

I have a better solution than shaming or sending an inconsequential note by clicking on something: the FCC should require 2FA rather than inconsequential fining after the fact. Yes, more gov’t meddling. But it sure seems necessary in this case.

Leave the government out of it. As a new generation of internet users comes online with a pre-built aversion, concern and suspicion regarding online security, these sort of customer-side snafus and security mishaps will greatly affect their bottom line enough to pay attention to these sort of issues. Companies will flock to security experts and their firms as a new market for “security as a service” will dawn in the upcoming years. Everyone wins except the companies who do not take security seriously, and anyone who gets caught with their guard down(good or bad guys.)

In my experience the government does not have the vision, real-world knowledge, skills or follow-through to properly implement anything to a benefit. Any laws that are passed will simply contain bureaucratic loopholes or, like many laws these days, passed but never enforced. The Federal Government can pass many laws it wants, it doesn’t mean they will be followed or even enforced. True change in internet/computer network security is only going to come at a real-world, private sector level.

“Leave the government out of it.”

+1

Make that +100!

+1*10^100!

How old are you – without regulation you wont get any improvement.

Hint look at the CF that was the break up of ATT and compare that to the UK’s.

I’m old enough to remember when AT&T controlled everything. They were amazingly bad at physical security. A friend of mine penetrated their security so often they ended up sealing all access points near him in concrete, so nobody – not even AT&T – could gain access. AT&T felt that the weeks it would take to regain access in the event of a fault at that point was worth it.

One need only look at just how horrible AT&T still is to see that things haven’t changed one bit. They were worthless then, they’re still worthless now.

The real issue is the enforcement. If that works, companies do fix things. I experienced that first-hand with a net-neutrality complaint against AT&T. Without that, my Internet connection would still go down 4 times a day…

The public is not going to force change. Most people, even young people, don’t know enough about Internet security nor do they seem to care much (they post everything publically on FB, for example.)

They got fined only $595K? You know that it’s their income literally for 2 hours of operation. Those guys at Cox are probably laughing right now.

Great job, Brian.

So here’s my quandary in this: for Cox and other large utilities that have 24/7/365 on-site staffing… why would this information and these capabilities even be exposed to the public Internet? There is simply no operational need for this stuff to live outside the firewall. Yes, there are service techs that need access but at the scale these guys work at they can have MPLS / intranet over cellular (yes, that is a thing at a certain scale).

And, yes, yes, people can compromise machines inside the firewall and still get the data… but why make it easy? A big part of good security is a layered defense, and the biggest and best layer for right now is having specific and direct control over which endpoints can get to your data.

Simple answer. It doesn’t matter where you store your data, if your staff has no idea what “social engineering” is, or when they’re being played by such an attack. That’s how Evil Jordie was able to bypass all their security and get straight into their database.

From the article above: “[Evil Jordie] phoned up Cox support pretending to be from the company’s IT department, and convinced both a Cox customer service representative and Cox contractor to enter their account IDs and passwords into a fake, or “phishing,” website.”

Would it make a difference where this data was stored? I think not.

It doesn’t matter where it’s *stored*, it matters where it’s *accessible from*. In this case, it was accessible from the public Internet. Lizard Squad Dude was able to get a username and password for an internal user and suddenly they can has data. At the very least Lizard Squad Dude should have also needed control of an internal machine, which is a significantly larger hurdle to overcome.

If due diligence and due care were exercised by the individuals that were affected, I think they should get a slice of the fine – Say 10% cut evenly across the board for those affected. Even if the cut was applied to future billings, thats a decent chunk of change.

As it is, once Cox lawyers and the FCC get together, most of these conditions are agreed upon in advance before it hits the press.

Its too bad that a security laziness act by BOTH people – who should know better than take the word of some one over the phone fell for the bait. If its an outside URL they visited, shame on them ! Why does some one need Cox internal credentials on a URL that’s not part of the Cox domain, or one they have never used before?

Cox is lucky the creep did not get the keys to the kingdom…..

=\

I am a new subscribers to Cox and the account security is attrocious. I have gained access to my account by withholding verification information no less than 3 times since I setup service.

I doubt Cox will be able to comply due to call center negligence without completely redesigning call center software to withhold information from call center employees, requiring two factor auth to make changes to accounts and view account holder PII.

Brian – How do you know that only 61 persons were affected? Or, am I misreading the first paragraph where it states “for the incident that affected me and 60 other customers”.

The victimized party gets data compromised, deals with the headache. And they have “to remain vigilant”. The most they receive is a year or two of “identify protection”.

The negligent party doesn’t change anything. Pays a pittance of a fine. After all 500K is cheaper than doing something about it in the long term.

The government prosecutes and they receive the fine.

Who wins and who loses no matter the scenario?

I literally received 3 protectmyid emails due to various company negligence. I can’t extend it. Otherwise I’d be good for half a decade.

It seems the negligent party as a token of their benevolence, customer support, should discount, upgrade, or provide some amount of services for free. Of course I jest.

Hey Brian, how much of that money affected customers will see? (My best guess is 0$…)

These kinds of “social engineering” hacks have been used for quite a few years and by many of the most notorious hackers. I doubt it will change any time soon.

As for Cox:

There is a certain kind of thinking within the board room meetings of communications companies like this. They are not only bound by contracts that last years but are also convinced of the superiority of the software they use. Most of which is out-dated and can barely hold the strain of an increased load of a growing customer base. Cloud based services become quite tantalizing when seen as a way to centralize control and remove human error (such as this article presents). It is interesting to me to find that a shift into using “apps” seems to be seen as the answer instead of actually fixing the problem. Personally, I would be inclined to think that companies like this would be able to do better than this by now (with their age, scope, and size)…..but I guess that’s just a dream within a dream.

New hire employees are certainly given ‘some’ training on PII but it’s not always clearly understood. What I find interesting is that we have at our disposal plenty of good technology to deal with PII properly and yet…..it’s NOT done. But, to tow the company line is to say to a customer that “your information is safe with us”. Wether it actually is or not becomes an entirely different question. Surely there has been enough high profile incidents to provide ample proof of this?

As for the fine:

It really doesn’t mean anything. Cox is not a mom-and-pop operation.

I had Cox for a while and it struck me that they were first an entertainment company and communication was an after thought. Anyway the fine bites a little because Cox is privately held, not a corporation.

While the point may very well be there, what exactly will the fine do to fix it? All it means is Cox will charge their customers more to cover the costs…

And 595k? That’s basically nothing. I’m NOT proposing more be demanded. What I am saying is: NO amount of fine will ever change the competency of support lines in defending against social engineering maneuvers. It’s just a way for the gov to get some dough.

Ugh that sucks…the penalty sucks. They will make that back in an instant. I know for a fact.

Why the fine itself really doesn’t matter much:

http://www.darkreading.com/risk/what-the-boardroom-thinks-about-data-breach-liability/d/d-id/1323037?

I have had a sordid past with Cox – I finally started calling them Cox*ker Communications after that – still do. They are the worst cable provider I’ve had since TCI went out of business! Both of them deserve to go out of business!

I literally switched to on air TV, other cable/internet, or satellite in every market where I’ve encountered them, and convinced all my friends and clients to do the same. We have not regretted it since!

I find it funny that companies these days think it is ok to just provide “Identity Protection” and then move on. Here we are given ID protection that does nothing while companies continue with slaps on the wrist for poor security.

I enjoyed reading your Reddit postings Brian, with all the investigative reporting you do, as well as visiting cyber crime forums, I was curious if you have ever been flagged or confronted by your ISP?

Are you asking about the ISP that hosts this site, or the ISP that provides my home an internet connection? Both have been very good to me.

Cox still doesn’t support basic TLS encryption for their email. Many less technical users use their ISP’s email and don’t realize all of their email are sent plain text. Dangerous.