The new $30 AirTag tracking device from Apple has a feature that allows anyone who finds one of these tiny location beacons to scan it with a mobile phone and discover its owner’s phone number if the AirTag has been set to lost mode. But according to new research, this same feature can be abused to redirect the Good Samaritan to an iCloud phishing page — or to any other malicious website.

The AirTag’s “Lost Mode” lets users alert Apple when an AirTag is missing. Setting it to Lost Mode generates a unique URL at https://found.apple.com, and allows the user to enter a personal message and contact phone number. Anyone who finds the AirTag and scans it with an Apple or Android phone will immediately see that unique Apple URL with the owner’s message.

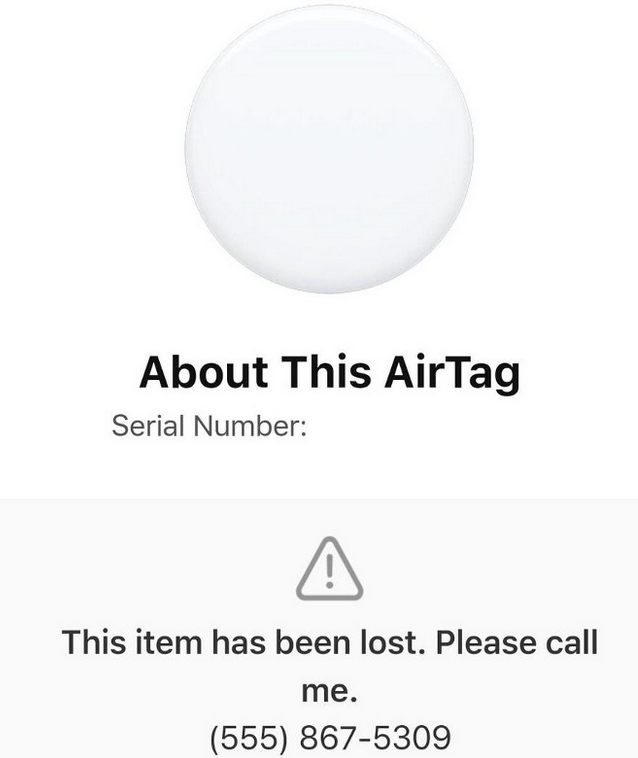

When scanned, an AirTag in Lost Mode will present a short message asking the finder to call the owner at at their specified phone number. This information pops up without asking the finder to log in or provide any personal information. But your average Good Samaritan might not know this.

That’s important because Apple’s Lost Mode doesn’t currently stop users from injecting arbitrary computer code into its phone number field — such as code that causes the Good Samaritan’s device to visit a phony Apple iCloud login page.

A sample “Lost Mode” message. Image: Medium @bobbyrsec

The vulnerability was discovered and reported to Apple by Bobby Rauch, a security consultant and penetration tester based in Boston. Rauch told KrebsOnSecurity the AirTag weakness makes the devices cheap and possibly very effective physical trojan horses.

“I can’t remember another instance where these sort of small consumer-grade tracking devices at a low cost like this could be weaponized,” Rauch said.

Consider the scenario where an attacker drops a malware-laden USB flash drive in the parking lot of a company he wants to hack into. Odds are that sooner or later some employee is going to pick that sucker up and plug it into a computer — just to see what’s on it (the drive might even be labeled something tantalizing, like “Employee Salaries”).

If this sounds like a script from a James Bond movie, you’re not far off the mark. A USB stick with malware is very likely how U.S. and Israeli cyber hackers got the infamous Stuxnet worm into the internal, air-gapped network that powered Iran’s nuclear enrichment facilities a decade ago. In 2008, a cyber attack described at the time as “the worst breach of U.S. military computers in history” was traced back to a USB flash drive left in the parking lot of a U.S. Department of Defense facility.

In the modern telling of this caper, a weaponized AirTag tracking device could be used to redirect the Good Samaritan to a phishing page, or to a website that tries to foist malicious software onto her device.

Rauch contacted Apple about the bug on June 20, but for three months when he inquired about it the company would say only that it was still investigating. Last Thursday, the company sent Rauch a follow-up email stating they planned to address the weakness in an upcoming update, and in the meantime would he mind not talking about it publicly?

Rauch said Apple never acknowledged basic questions he asked about the bug, such as if they had a timeline for fixing it, and if so whether they planned to credit him in the accompanying security advisory. Or whether his submission would qualify for Apple’s “bug bounty” program, which promises financial rewards of up to $1 million for security researchers who report security bugs in Apple products.

Rauch said he’s reported many software vulnerabilities to other vendors over the years, and that Apple’s lack of communication prompted him to go public with his findings — even though Apple says staying quiet about a bug until it is fixed is how researchers qualify for recognition in security advisories.

“I told them, ‘I’m willing to work with you if you can provide some details of when you plan on remediating this, and whether there would be any recognition or bug bounty payout’,” Rauch said, noting that he told Apple he planned to publish his findings within 90 days of notifying them. “Their response was basically, ‘We’d appreciate it if you didn’t leak this.'”

Rauch’s experience echoes that of other researchers interviewed in a recent Washington Post article about how not fun it can be to report security vulnerabilities to Apple, a notoriously secretive company. The common complaints were that Apple is slow to fix bugs and doesn’t always pay or publicly recognize hackers for their reports, and that researchers often receive little or no feedback from the company.

The risk, of course, is that some researchers may decide it’s less of a hassle to sell their exploits to vulnerability brokers, or on the darknet — both of which often pay far more than bug bounty awards.

There’s also a risk that frustrated researchers will simply post their findings online for everyone to see and exploit — regardless of whether the vendor has released a patch. Earlier this week, a security researcher who goes by the handle “illusionofchaos” released writeups on three zero-day vulnerabilities in Apple’s iOS mobile operating system — apparently out of frustration over trying to work with Apple’s bug bounty program.

Ars Technica reports that on July 19 Apple fixed a bug that llusionofchaos reported on April 29, but that Apple neglected to credit him in its security advisory.

“Frustration with this failure of Apple to live up to its own promises led illusionofchaos to first threaten, then publicly drop this week’s three zero-days,” wrote Jim Salter for Ars. “In illusionofchaos’ own words: ‘Ten days ago I asked for an explanation and warned then that I would make my research public if I don’t receive an explanation. My request was ignored so I’m doing what I said I would.'”

Rauch said he realizes the AirTag bug he found probably isn’t the most pressing security or privacy issue Apple is grappling with at the moment. But he said neither is it difficult to fix this particular flaw, which requires additional restrictions on data that AirTag users can enter into the Lost Mode’s phone number settings.

“It’s a pretty easy thing to fix,” he said. “Having said that, I imagine they probably want to also figure out how this was missed in the first place.”

Apple has not responded to requests for comment.

Update, 12:31: Rauch shared an email showing Apple communicated their intention to fix the bug just hours before — not after — KrebsOnSecurity reached out to them for comment. The story above has been changed to reflect that.

Cr-apple-tastic!!!

crapple and tackle?

Starlight would not approve!

$tarlight do not approve!

867-5309, love it. Showing your age.

I guess we can be glad the area code wasn’t included. Of course, that would have messed up the beat.

Yes, this is a serious bug in Apple’s web site, to allow XSS in the phone number field. Bad security and pretty stupid.

But there is still the problem of scanning NFC tags or QR codes and have the phone OS, or the App, automatically take you to a website. Most should display the plaintext destination/action, and prompt to allow or block.

In this case, it might not matter much since found.apple.com is not going to raise red flags.

But I do remember finding writable NFC tags embedded in the tables at Panera Bread. Something about the devices that would normally light up and buzz, and require the customers to go up to the counter to pick up their food…. re-imagined so that the device would wirelessly send the food servers the your table number instead. The customer just places the device on the table (over the label with the tag embedded underneath) when they sit down after ordering.

There are also printers with NFC tags built in that automatically open up that vendor’s printer app on the phone when scanned.

When they leave the writable flag on, it’s only a matter of time until someone rewrites the tag to send everyone to a Rick Astley Youtube video.

So true. When a restaurant wanted to provide their menu on my smart phone via a QR I said no and I’ll always say no to QRs as you have no idea where that QR will take you. Don’t assume that a thief hasn’t replaced the original QR with their own, even if you were in a bank!

There are good, open source, QR code readers for your phone. And by default, they will only display the plaintext contents of the QR code without auto-navigating using your browser.

I don’t know if this is really much of a bug. I can program a NFC tag with an evil link as well.

If you really want to call this a security issue then the problem is in the tag reading software, not the tag. The last time I tried putting a link on a NFC tag my phone asked if I want to go to the page so it seems like this is a problem already solved, at least on Android with the NXP reader.

That was my initial thought as well. But looking at the blog he wrote when he went “public with his findings”… there is a Stored XSS vulnerability in Apple’s website. The finder of a lost tag are not seeing the suspicious phishing URL when they are scanning the tag. Because the URL is always going to be that Apple owned website.

The “bug” is the fact that the tag owner can put arbitrary javascript in the phone number field and cause an immediate redirect to a malicious site.

Now I get it but it seems this isn’t exactly something difficult to fix. It isn’t like you need to send a patch. Just fix the website already! Is Apple that bureaucratic?

I’m THAT person that sniffs random NFC tags. Some security firm places NFC tags for the guard to sniff as they make the rounds, presumably to insure they actually make the rounds. I could detect the tag but don’t recall getting anything particularly interesting on it.

Yeah, that was the point made by the security researchers. It is super easy to fix.

The issue is that since he is external to Apple, yeah, he’s got to go through the bureaucratic process with so many checks. Which is why some things don’t get credited properly. If someone on the internal team gets wind of it, they can fix it faster, and might skip a lot of the bug bounty program steps that would have ensured credit for Bobby.

What is it with developers not wanting to write Input Validation anymore? Did we forget all the lessons of yesteryear? Are they THAT afraid of regex?

A trillion+ company and shafting the good guys helping to find potential exploits in their software? Excess profits have made Apple careless about walking the talk!

I think Apple has a systemic/cultural issue to deal with as it’s had a lot of these white hat flubs and snubs being reported lately.

I wrote this comment at Mac Rumours yesterday building on my original comment there after the WaPo news dropped:

“”

Given the import of the issue itself and the attention it has received, this is an issuer deserving of a CEO-level response with an apology and an action plan to fix the issue.

it’s nonsense that some lower level employee responded with vague reassurances of an ongoing investigation.

the initial reports surfaced about 4 business weeks ago. That’s plenty of time to grasp the internal issues and release a robust action plan.

This response is not OK. At this point I’d be ok with congress pulling apple’s CEO, CTO and CSO in for a hearing.

I posted the following on the 9th and Apple has proved it to be truer than ever:

“

At this point I can accept no excuse or justification from apple for why it isn’t paying best in class bounties.

Slow to scale excuses arguments? Ridiculous.

Smaller than industry rewards? It’s literally a marketplace of exploits. Not every hacker is a white hat. Some are beyond US Justice, others it takes years to catch. When Apple isn’t the first stop for exploits, in such cases the damage is done by the time such holes are closed and the crooks caught.

For God’s sake, people have literally died and been hacked into pieces because of unpatched Apple bugs.

And in the meantime Apple wants us to put our medical histories, identification, house and office keys in our devices…

Yes we can blame NSO and FSB etc, but they are finding what is already there. There is no reason Apple couldn’t find most of it first if it doubled down on this.

Apple is the richest company in the history of humanity. It has the financial resources to rival some nation states. There is no traditional business barrier to Apple doing what it needs to here.

Not able to run a robust bug discovery program that draws the best and most submissions (and conversely staffing internally to handle these)? Apple is fully able.

There is no reason that the above can’t be solved. And at this point is only because of perceptual and cultural lag, possible arrogance, clear lack of CEO priority, and definite CFO cheapskatedness.

I might add it’s pretty glaring that attention and resources are lacking here even as Apple instead builds proof of concept golden keys inviting state coercion to expand their CSAM intrusion into other areas…

“

“

Fyi on the richest company in history comment, they are the 5th richest company in history, a little under 10x less valuable as the richest company ever the Dutch East India Company, anyways your point stands

What I don’t like about the found AirTag routine is that it requires one to expose their phone number.

Would be far preferable for both sides to be able to communicate using the kind of anonymous disposable email addresses one can now create in iOS 15.

Also, it’s kinda ridiculous that the AirTags aren’t locked to the AppleID in FindMy like other apple devices are.

As a result, many folks won’t get their AirTag back because finders, of this 30$ device, if they act before Lost Mode is set, will be able to erase and set the found AirTag back to factory settings by removing and installing the battery 5x.

Update: I just tested list mode in one of my AirTags and see that apple has improved the contact info during set up.

Now the owner has the option to use A phone number or AN email address. These fields at this time (no idea what happens when apple closes the bug that is the subject of Brian’s story here) allow the user to use any number or any email address (the latter, however being populated with the owner’s AppleID email address but changeable.)

If I recall correctly, the original list mode only allowed use of a phone number (and as few folks have burner numbers this was problematic.)

People pay such premiums for Apple devices. A 10 cent NFC tag can hold any arbitrary data. It doesn’t require the finder to go to a website at all, and you can just put whatever phone, email, etc. that you want.

The only downside to not having the finder proxy through a website first, is that there is no marking a tag as “lost”, before the contact info is presented to the finder. But that is trivial because you can use anonymous contact info and won’t care if someone gets that info before you report it as lost.

And if you really want to emulate the behavior of Apple Airtags, you can just use a cheap NFC tag and write a github gist URL (or any free web service that lets you create a page accessible with a unique non-guessable URL).

No need to be in Apple’s ecosystem at all.

And your NFC tag will relay through the devices around it allowing you to track your luggage through the air when it gets on the wrong flight?

Oh, that’s cool. It’s a Bluetooth tracker too. I was just considering the NFC part.

The issue is a xss issue in apples own site. All the airtag does is send you to a url based on it’s serial number, it doesn’t store any data, you fundamentally misunderstood the bug in question

Looks like you replied to the wrong comment, as I was the first comment here mentioning this is a XSS in the website.

You don’t have to expose your phone number, LOL, you get a Google Voice or similar number.

The issue is a xss issue in apples own site. All the airtag does is send you to a url based on it’s serial number, it doesn’t store any data, you fundamentally misunderstood the bug in question

I miss my IBM 3270

This type of attack will mostly be tacked at locations where they know that the victim is worth the $30 payout. Drop them in New York banking district or the stock market perhaps, but in Willoughby? You’d be wasting $30. LOL.

If you don’t know Willoughby, there is only one in the U.S. and three in the world.

Well its nice to now, however the airtag is not really an new idea.

I mean you need an other apple device to find your device. And if a thief will find an apple tag, he can remove it or throw it into the bin