A faulty software update from cybersecurity vendor Crowdstrike crippled countless Microsoft Windows computers across the globe today, disrupting everything from airline travel and financial institutions to hospitals and businesses online. Crowdstrike said a fix has been deployed, but experts say the recovery from this outage could take some time, as Crowdstrike’s solution needs to be applied manually on a per-machine basis.

A photo taken at San Jose International Airport today shows the dreaded Microsoft “Blue Screen of Death” across the board. Credit: Twitter.com/adamdubya1990

Earlier today, an errant update shipped by Crowdstrike began causing Windows machines running the software to display the dreaded “Blue Screen of Death,” rendering those systems temporarily unusable. Like most security software, Crowdstrike requires deep hooks into the Windows operating system to fend off digital intruders, and in that environment a tiny coding error can quickly lead to catastrophic outcomes.

In a post on Twitter/X, Crowdstrike CEO George Kurtz said an update to correct the coding mistake has been shipped, and that Mac and Linux systems are not affected.

“This is not a security incident or cyberattack,” Kurtz said on Twitter, echoing a written statement by Crowdstrike. “The issue has been identified, isolated and a fix has been deployed.”

Posting to Twitter/X, the director of Crowdstrike’s threat hunting operations said the fix involves booting Windows into Safe Mode or the Windows Recovery Environment (Windows RE), deleting the file “C-00000291*.sys” and then restarting the machine.

The software snafu may have been compounded by a recent series of outages involving Microsoft’s Azure cloud services, The New York Times reports, although it remains unclear whether those Azure problems are at all related to the bad Crowdstrike update. Update, 4:03 p.m. ET: Microsoft reports the Azure problems today were unrelated to the bad Crowdstrike update.

A reader shared this photo taken earlier today at Denver International Airport. Credit: Twitter.com/jterryy07

Matt Burgess at Wired writes that within health care and emergency services, various medical providers around the world have reported issues with their Windows-linked systems, sharing news on social media or their own websites.

“The US Emergency Alert System, which issues hurricane warnings, said that there had been various 911 outages in a number of states,” Burgess wrote. “Germany’s University Hospital Schleswig-Holstein said it was canceling some nonurgent surgeries at two locations. In Israel, more than a dozen hospitals have been impacted, as well as pharmacies, with reports saying ambulances have been rerouted to nonimpacted medical organizations.”

In the United Kingdom, NHS England has confirmed that appointment and patient record systems have been impacted by the outages.

“One hospital has declared a ‘critical’ incident after a third-party IT system it used was impacted,” Wired reports. “Also in the country, train operators have said there are delays across the network, with multiple companies being impacted.”

Reactions to today’s outage were swift and brutal on social media, which was flooded with images of people at airports surrounded by computer screens displaying the Microsoft blue screen error. Many Twitter/X users chided the Crowdstrike CEO for failing to apologize for the massively disruptive event, while others noted that doing so could expose the company to lawsuits.

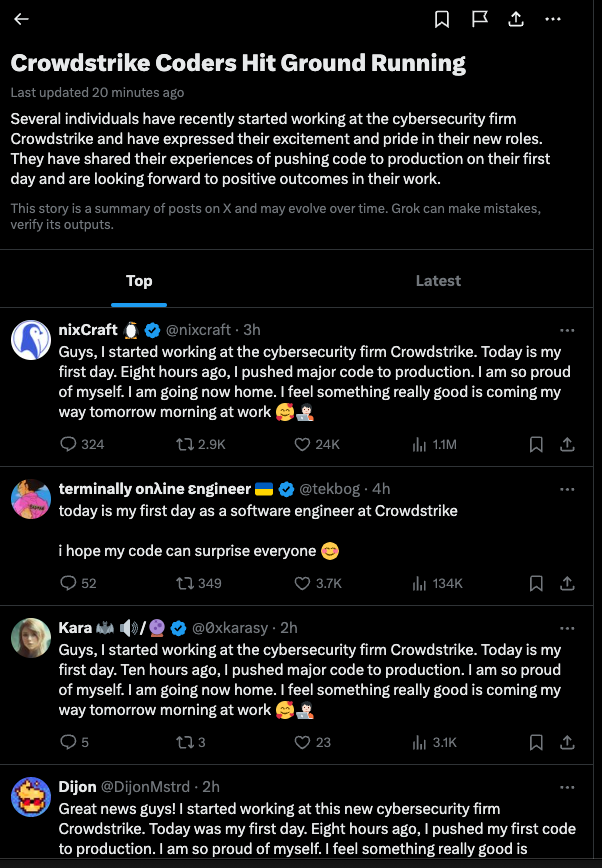

Meanwhile, the international Windows outage quickly became the most talked-about subject on Twitter/X, whose artificial intelligence bots collated a series of parody posts from cybersecurity professionals pretending to be on their first week of work at Crowdstrike. Incredibly,Twitter/X’s AI summarized these sarcastic posts into a sunny, can-do story about Crowdstrike that was promoted as the top discussion on Twitter this morning.

“Several individuals have recently started working at the cybersecurity firm Crowdstrike and have expressed their excitement and pride in their new roles,” the AI summary read. “They have shared their experiences of pushing code to production on their first day and are looking forward to positive outcomes in their work.”

The top story today on Twitter/X, as brilliantly summarized by X’s AI bots.

This is an evolving story. Stay tuned for updates.

Just call it X

I am a Certified Healthcare CIO, so I have years of background working in this field. There is a lot of friction between the “new” generation of IT Leaders and my generation of IT leaders. My generation has always focused much effort on testing and regression testing of code and patches, the result being longer patch cycles. New DevOps teams want to patch more often with less testing. Both approaches have their pros and cons. This is an example of the “old” way of testing being more appropriate. In my world, if we had 10 variants of Windows Operating Systems (Windows 10, release xxxxxx, Windows 11, release xxxxxx), I would require 1000 tests to be run, 100 on each variant, before we released to PROD. In todays DevOps world, it is common to minimize testing, and just fix “whatever breaks” on the backend, after-the-fact. The reality is that we need to meet in the middle between these two processes and come up with something that prevents this from happening.

There is truly no excuse for this. IMO, the CIO, CTO, and the CEO needs to be held accountable for this, as this is one of the most basic process issues of any technology department, much less a technology company.

I’m not an IT Pro of any kind, so it’s good to know my thinking lines up with someone so experienced as regards your last paragraph.

As for being held “accountable”, is that likely to happen?

It never does in the case of breaches when the cause is almost always because data was left unsecured.

Years of man-hours look to be required for the fix.

Hope this never happen again. This affects a lot of people.

As I understand (from other reports) the update wasn’t even a properly configured driver file. So much for testing!

Honestly, I can’t see CrowdStrike recovering. The lawsuits will run into the billions.

Systems with bitlocker installed are making recovery a lot more difficult than just deleting a file.

Any encrypted drive is a mess to recover. A lot of corporations are struggling with this. Whole departments have had their entire inventory of PCs blue screened. One Friday, I worked with one group that was sharing one PC, the only one PC still working. I think it had been wiped and was not yet provisioned for a new employee.

“Any encrypted drive is a mess to recover.”

That’s precisely why I only use encrypted drives on my laptops. If someone steals my laptop, they won’t be able to get anything from the drives. And I will never need to recover them — at worst, I just reinstall and move on. They are usually nothing more than a means to access my more important computers.

This still could be a security incident or cyber attack, even one gone bad. Who is telling us it was due to a flawed software update? The company responsible for preventing the cyber attack.

And to refute the “not a security incident” we were told that cybersecurity was built on the triad of Confidentiality, Availability, and Integrity. In this instance one might argue that Confidentiality and Integrity weren’t impacted (as your comment suggests, that is still to be determined), but Availability was blown away. But hey, that’s perfect security, isn’t it?

The bug may have existed in the driver over the last few versions and went undetected until they just happened to push out a file containing only null bytes. This should never have happened, but it’s probably a good thing it was discovered before someone leveraged this for malicious purposes.

You are making a great point.

I’m skeptical about those X comments saying it’s their first day. They sound like bot accounts repeating the same thing.

read much? they are bots, ya douche

Not all were bots, a lot of them were parody posts by cybersecurity professionals, everyone just having a laugh at CrowdStrike’s expense

Hey “ANDY”. No need to be, as you say, a “douche” and call Stratus out with that tone. Keep this pleasant and helpful.

Did anyone look at eventvwr of a pc that received the malformed C-00000291*.sys file?

Does falcon load and use it right away or has to reboot first?

Falcon doesn’t require reboots for driver or definition updates, it’s good like this. Downside is that to have this security the agent has to be able to auto-update itself in real-time, so even though the file was only being pushed for approximately 1.5 hours, a large number of computers picked it up in this time. This also affected people on n-2 or older versions of the agent as the bug was in the driver for some time.

Please read point 8.6 of their TOS before claiming them “responsible”: https://www.crowdstrike.com/terms-conditions/

Responsible are the nitwits who installed this crap on critical systems!!!

It says:THE OFFERINGS AND CROWDSTRIKE TOOLS ARE NOT FAULT-TOLERANT AND ARE NOT DESIGNED OR INTENDED FOR USE IN ANY HAZARDOUS ENVIRONMENT REQUIRING FAIL-SAFE PERFORMANCE OR OPERATION. NEITHER THE OFFERINGS NOR CROWDSTRIKE TOOLS ARE FOR USE IN THE OPERATION OF AIRCRAFT NAVIGATION, NUCLEAR FACILITIES, COMMUNICATION SYSTEMS, WEAPONS SYSTEMS, DIRECT OR INDIRECT LIFE-SUPPORT SYSTEMS, AIR TRAFFIC CONTROL, OR ANY APPLICATION OR INSTALLATION WHERE FAILURE COULD RESULT IN DEATH, SEVERE PHYSICAL INJURY, OR PROPERTY DAMAGE.

whoever installed this NOT FAULT-TOLERANT crap on critical systems should be held responsible!

Such entertaining moments. I love it.

Making few steps backwards and analyzing the whole stuf:

-management certifying these gadgets without deep knowledge and hands-on only makes stupid decisions.

– any system without fuses and feedback will! fail. Strong-for Microsoft. Strong for mono cultures.

-You don’t have any backup system or different system? Your problem.

– Look at China. Maybe the new MAo-Tse-Tung’s OS is better. We have thousands of experts good for nothing.

– The forced updates is planned disaster.

– nexw time, when it happens next time (during peace), it will be more expensive and more disturbing.

Amen

hehehe cia rootkits such as the ontopic cause more harm than all Rvssian hackers together. A cloud-based “antivirus” software running with admin privileges, which records everything users do and sends that info to the cloud, is a spying trojan by definition. But the really interesting thing is not that that rootkit finally exposed itself in the worst possible way, but who and how made admins all over the world in multibillion companies install spy software in their networks. They wouldn’ve done themselves such obviously harmful thing which openly leaks all the company data to the third party vendor, if it was up to them, 100%.

Drink faster, comrade. Vodka evaporates in rare American air.

I hope Crowdstrike also fires the Sales & Marketing executives that have probably been screaming that the new “features” they have been promising need to go out faster. Also, developers, “Yeah, I checked the code” is not the equivalent of actually running a battery of regression tests…

Anyone ever wonder why Google never has issues? The cyberspace is controlled by Google

Just wait until something like this happens with a widespread AI implementation…I’m stocking up the Cabin in the Woods.

Interrogative::” How can we quickly reduce the levels of Global Warming?”

Enhanced/Next Generation AI: “Well, it would sure help if there were fewer humans …let me throw some switches…”

And just think, they are actually trying to build the equivalent of Skynet and interconnect it. Somebody didn’t watch the Terminator movies…

” Incredibly,Twitter/X’s AI summarized these sarcastic posts into a sunny, can-do story about Crowdstrike” I think you got the wrong adjective there, what you wanted was “predictably”

Update, 4:03 p.m. ET: Microsoft reports the Azure problems today were unrelated to the bad Crowdstrike update….

this goes to a default page and nothing publicly available.

Unable to reply to Kent Brockman, so here goes: you should grow your own food, if you are in the business of apology-extortion. Else, have some shame, and let them work on the fix.

CrowdStrike is now almost as well known as AC/DC’s Thunderstruck.

But CrowdStrike is definitely not very entertaining whereas AC/DC is.

At my work, IF we were diligent in the past and provided an emergency number for text alerts, we got links to Zoom calls to try to get this fixed.

Imagine a LOT of Zoom calls with hundreds of people entering names and machine numbers. Imagine scores of techs quickly putting in search requests for well over ten thousand employees to a single shared Excel file to get Bitlocker keys to allow end-users to self-help (separate Zoom call or breakout room for people that get stuck on that). The keys are then pasted into chat with the user name. Things quickly slowed to a crawl. (Why not make copies of the spreadsheet? Security reasons?)

Imagine end users miss their key because they don’t pay attention (fair, wait times were long ) or can’t follow simple instructions (e.g., repeatedly entering their names and machine numbers, resulting in even MORE search requests to a system that’s already addled from incoming searches)

Shoutout to the constructive people that actually activated their audio to… tell everyone they didn’t like this, and they’re mad. I’m paraphrasing. Also censoring.

That said, it could’ve been worse. Things are nearly normal today.

In your post, GovernmentThings, you just predicted things WILL be worse, IMHO. “The keys are then pasted into chat with the user name.” My recommendation is plan thusly…

https://www.itpro.com/security/cyber-crime/hackers-are-creating-fake-crowdstrike-recovery-resources-to-trick-businesses-into-loading-malware-onto-their-network

After learning that the definitions file pushed out by CrowdStrike was filled with zeros, and that caused the kernel-mode drive to crash and BSOD Windows, I suspect that many other kernel-level security systems and drivers will get close inspection by the bad guys. They are probably drooling at the thought of causing RCE errors at the kernel level.

Let’s see how many mistakes were made here. Writing a kernel driver that doesn’t check its inputs; sending an update without testing it; downloading updates without testing (or making a product that doesn’t allow to do that, I don’t know); using third party systems for critical infrastructure; not having proper fallback machines; not having proper resilience planning, just of the top of my mind.