Facebook has built some of the most advanced algorithms for tracking users, but when it comes to acting on user abuse reports about Facebook groups and content that clearly violate the company’s “community standards,” the social media giant’s technology appears to be woefully inadequate.

Last week, Facebook deleted almost 120 groups totaling more than 300,000 members. The groups were mostly closed — requiring approval from group administrators before outsiders could view the day-to-day postings of group members.

Last week, Facebook deleted almost 120 groups totaling more than 300,000 members. The groups were mostly closed — requiring approval from group administrators before outsiders could view the day-to-day postings of group members.

However, the titles, images and postings available on each group’s front page left little doubt about their true purpose: Selling everything from stolen credit cards, identities and hacked accounts to services that help automate things like spamming, phishing and denial-of-service attacks for hire.

To its credit, Facebook deleted the groups within just a few hours of KrebsOnSecurity sharing via email a spreadsheet detailing each group, which concluded that the average length of time the groups had been active on Facebook was two years. But I suspect that the company took this extraordinary step mainly because I informed them that I intended to write about the proliferation of cybercrime-based groups on Facebook.

That story, Deleted Facebook Cybercrime Groups had 300,000 Members, ended with a statement from Facebook promising to crack down on such activity and instructing users on how to report groups that violate it its community standards.

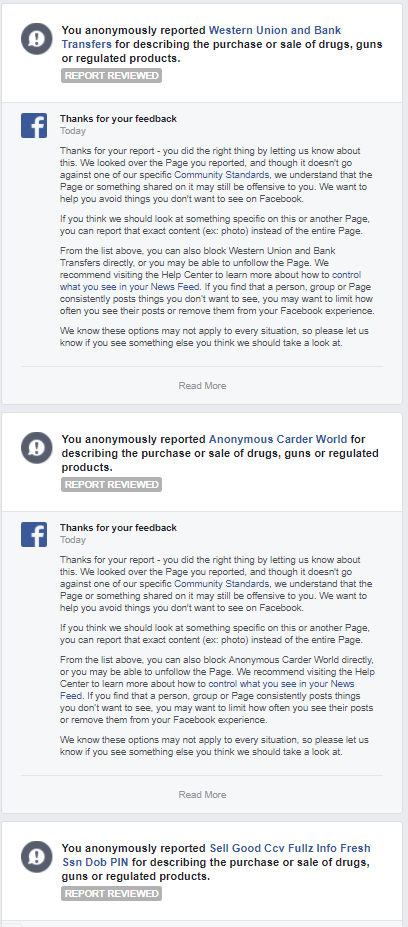

In short order, some of the groups I reported that were removed re-established themselves within hours of Facebook’s action. I decided instead of contacting Facebook’s public relations arm directly that I would report those resurrected groups and others using Facebook’s stated process. Roughly two days later I received a series replies saying that Facebook had reviewed my reports but that none of the groups were found to have violated its standards. Here’s a snippet from those replies:

Perhaps I should give Facebook the benefit of the doubt: Maybe my multiple reports one after the other triggered some kind of anti-abuse feature that is designed to throttle those who would seek to abuse it to get otherwise legitimate groups taken offline — much in the way that pools of automated bot accounts have been known to abuse Twitter’s reporting system to successfully sideline accounts of specific targets.

Or it could be that I simply didn’t click the proper sequence of buttons when reporting these groups. The closest match I could find in Facebook’s abuse reporting system were, “Doesn’t belong on Facebook,” and “Purchase or sale of drugs, guns or regulated products.” There was/is no option for “selling hacked accounts, credit cards and identities,” or anything of that sort.

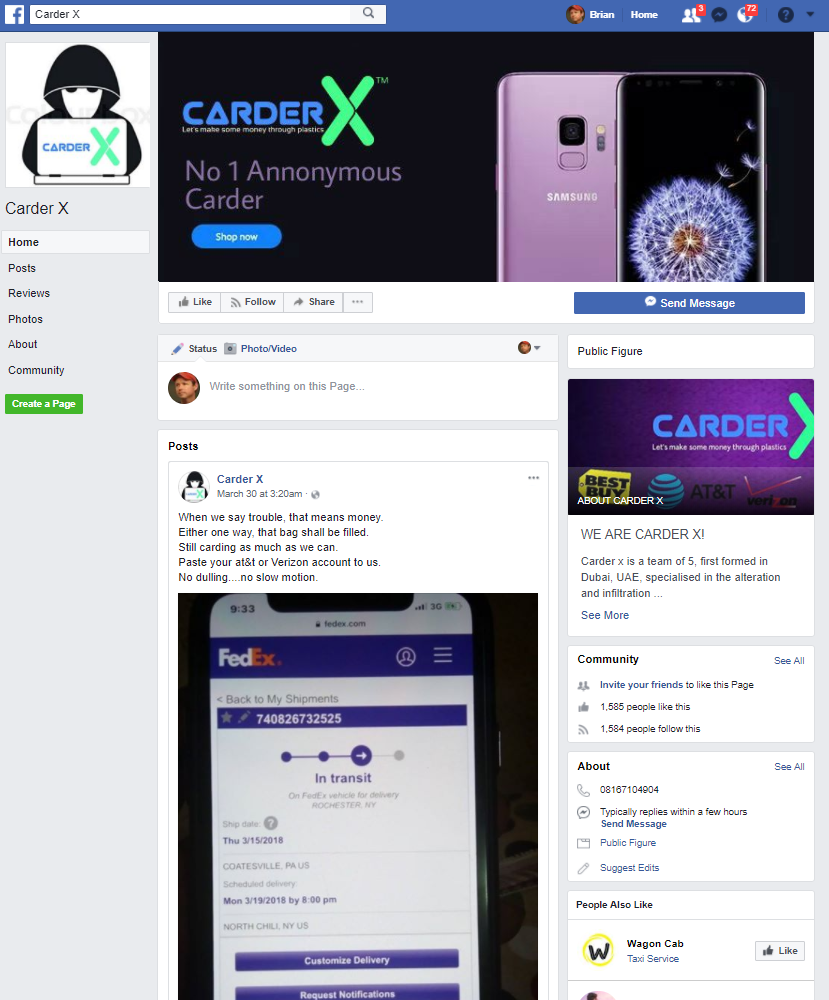

In any case, one thing seems clear: Naming and shaming these shady Facebook groups via Twitter seems to work better right now for getting them removed from Facebook than using Facebook’s own formal abuse reporting process. So that’s what I did on Thursday. Here’s an example:

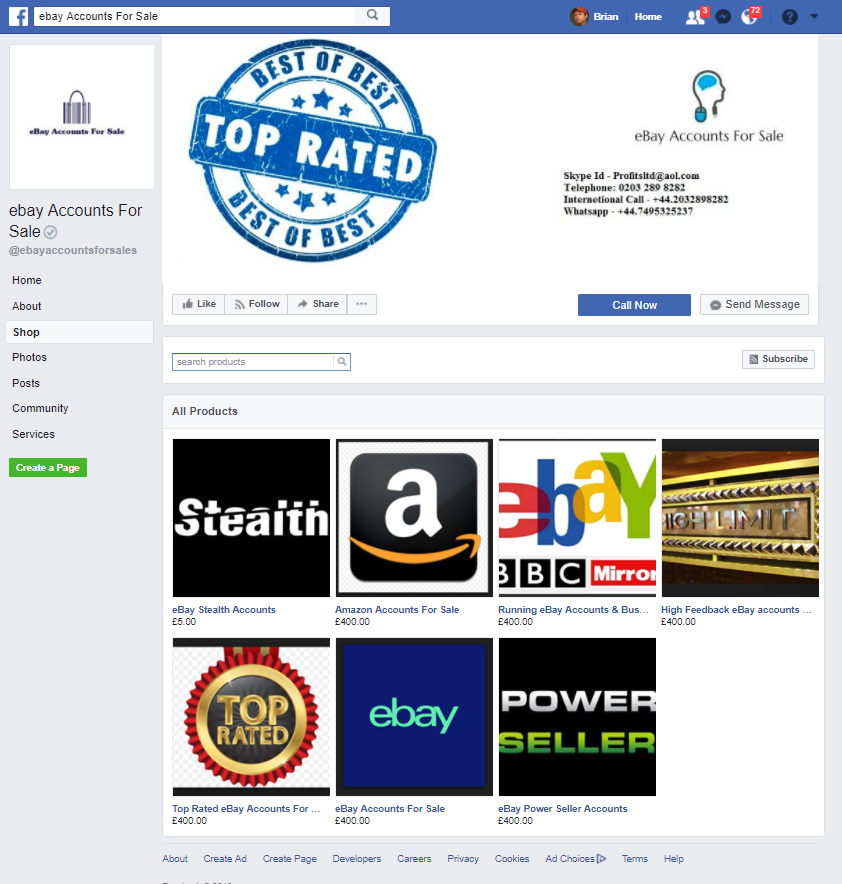

Within minutes of my tweeting about this, the group was gone. I also tweeted about “Best of the Best,” which was selling accounts from many different e-commerce vendors, including Amazon and eBay:

That group, too, was nixed shortly after my tweet. And so it went for other groups I mentioned in my tweetstorm today. But in response to that flurry of tweets about abusive groups on Facebook, I heard from dozens of other Twitter users who said they’d received the same “does not violate our community standards” reply from Facebook after reporting other groups that clearly flouted the company’s standards.

Pete Voss, Facebook’s communications manager, apologized for the oversight.

“We’re sorry about this mistake,” Voss said. “Not removing this material was an error and we removed it as soon as we investigated. Our team processes millions of reports each week, and sometimes we get things wrong. We are reviewing this case specifically, including the user’s reporting options, and we are taking steps to improve the experience, which could include broadening the scope of categories to choose from.”

Facebook CEO and founder Mark Zuckerberg testified before Congress last week in response to allegations that the company wasn’t doing enough to halt the abuse of its platform for things like fake news, hate speech and terrorist content. It emerged that Facebook already employs 15,000 human moderators to screen and remove offensive content, and that it plans to hire another 5,000 by the end of this year.

“But right now, those moderators can only react to posts Facebook users have flagged,” writes Will Knight, for Technologyreview.com.

Zuckerberg told lawmakers that Facebook hopes expected advances in artificial intelligence or “AI” technology will soon help the social network do a better job self-policing against abusive content. But for the time being, as long as Facebook mainly acts on abuse reports only when it is publicly pressured to do so by lawmakers or people with hundreds of thousands of followers, the company will continue to be dogged by a perception that doing otherwise is simply bad for its business model.

Update, 1:32 p.m. ET: Several readers pointed my attention to a Huffington Post story just three days ago, “Facebook Didn’t Seem To Care I Was Being Sexually Harassed Until I Decided To Write About It,” about a journalist whose reports of extreme personal harassment on Facebook were met with a similar response about not violating the company’s Community Standards. That is, until she told Facebook that she planned to write about it.

Isn’t it amazing what sunlight will do to creatures of the night.

Why not 2-factor page authors once a week or month. If they do not respond as a human then the account is inactivated. When reports are made send these humans something indicating a report was made and let them respond. Similar to other sites create feedback rating.

I don’t see how this will help. Some of us take vacations for periods up to a month or even three without technology.

Pings like this would just result in “customers” leaving Facebook. Which isn’t a goal Facebook has.

There are certainly things Facebook can do to improve matters, but this isn’t one of them.

I think it’s naive to think AI is somehow fix this issue anytime soon, if ever.

The world is full of bad actors. Build a massive platform, they will come. AI isn’t going to outsmart them all of them. Trying to hand filter them, little more than a game of wack a mole. Seriously, does anyone really think they are going to eliminate all the trash on a platform this large??

I have never been harassed on the facebags and they have never sold, lost etc my information either.

My secret, never join it!

“My secret, never join it!”- Alex

That is good advice. Once you have joined FaceCrook you have joined a Roach Motel.

Not joining Facebook because as soon as you join it, you’ve joined a Roach Motel is more naive than the comment that not joining is the solution.

I hate to break it to you, but Facebook’s “Roach Motel” status has nothing to do with Facebook being “FaceCrook”, and everything to do with the human race being filled with degenerate followers who can’t think for themselves or who simply don’t care about their fellow human beings. Whether you join Facebook or not, you’re already a part of that “Roach Motel”, so this suggestion is not a solution, it’s folly disguised as smug superiority.

And not joining Facebook is not the solution to avoiding harassment or being otherwise victimized either, as these things happen to plenty of people who aren’t on social media. It might make you feel better not to visit the “bad parts of town” on the internet, but that does not deal with the people causing the problems on the internet, and those people will find you in any “safe” corner of your internet world. To think this would protect you in any way is a grand delusion, but it’s quite false.

I have turned in pages with death threats for people of certain religions only to be told it meets community standards. I have turned in hacked accounts only to be just block the person. As a corporate webmaster and IT manager, I can tell Facebook that until they turn to an old-fashioned security desk with people reviewing complaints that meet the criteria set by the company (no personal opinion involved) they will continue to fail society. The final answer will be a migration to another platform with civilized rules.

In my opinion, Facebook is broken. It claims to be a social media site, but,until now, it has had no responsibility, social or otherwise, except to figure out ways to monetize its users.

Regards,

Q: “Is Facebook’s Anti-Abuse System Broken?”

A: No. It never worked in the first place, so it did not become “broken”, which would have been a change from its original state of existence.

Seems to me, one effective way for FB to handle such bad actors on its site is to give *verified* and trusted security experts such as Krebs the benefit of the doubt and automatically remove any FB account reported by Krebs and other verified reporters.

That will work for 5 minutes, until their Facebook accounts are hijacked.

After reading this post, I decided to do some searching myself. Searched on FRESH Rdp,fullz,cc,logins and like Brian, was told that this did not meet requirements. And there is no way to report criminal activities as searched on.

I wonder if local/state/federal law enforcement agencies were to find these bad actors, and then include FB as a co-conspirator, they might add some other categories to their pitiful reporting tool? Because, and any legal eagles out there correct me if I am wrong, are they not aiding and abetting by not removing them? Oh wait, this is about how much data FB can scrape and then sell. Illegal activities are not their concern.

Not a lawyer, but they can’t be prosecuted unless they’re deliberately made aware of such activities and take no action. This is what the provision known as “Safe Harbor” refers to. If they are aware of it, and take action, they cannot be held liable.

I can only imagine the issues they face here, though I can see they are failing miserably with their solution.

I am an admin of a few Facebook groups and member of groups for others admins like myself, and the stories from admins who have had their personal accounts or groups shut down are frequent and baffling. In these admin groups there are Facebook employees who can respond to the reports and resolve issues, but this only underlines the main issue: human filtering is broken or inadequate, and human intervention is currently the only solution. I’d imagine there are similarities and differences with Google’s spam filters in Gmail, but just from my perspective, seeing the complete lack of spam getting through to my inbox, while seeing the glut of fake accounts and bad actors and the impunity they operate under on the Facebook platform indicates to me the security teams at these respective giants are of vastly different capabilities.

And then there’s Amazon:

http://mebfaber.com/2018/04/18/how-to-launder-money-with-amazon-aka-why-all-my-books-are-now-free/

FWIW, I regularly report Facebook accounts that spam my public posts. I think about 1/3 of them get canceled, 1/6th get “investigated”, and the other 1/2 are “acquitted”.

The most effective way to deal w/ companies is via Twitter.

The problem w/ Facebook’s automated reporting system is that it’s automated. It goes through some form of heuristic, and it’s very detached from the problem space.

E.g. I’m trying to report that an account posted is fake (posting spammy links to movie sites as comments on my public posts). There’s no way for me to report the account with the context of the comment. And I don’t want to leave the public comment, so after I report the account, I delete the comment.

For Brian’s case, it should be easier for an automated system to correctly reach the right conclusion, but it’s pretty clear that the pieces aren’t sufficiently identifiable for the program…

In my case, I don’t care enough about the accounts to use Twitter to get them deleted, they aren’t sufficiently bad for me to set up a Twitter, and it’d be clearly related to my Facebook account…

There was an interesting study put out on this type of abuse on social media a couple years ago. You can check it out here. https://www.rsa.com/content/dam/en/white-paper/hiding-in-plain-sight-the-growth-of-cybercrime-in-social-media-part-1.pdf

i’m sorry that Emperor Trump’s Dank Stash Memes group is so disgusting in their speech, truly, but on your part, to turn to stuff you can actually do instead of whining about everybody else, don’t make stuff public to everyone that you don’t want to see it or that you can’t trust to do things with.

You put photos out there when you have no control over what other people do to them. How people don’t think about this before they post anything in 2018 is beyond me. Why didn’t she claim copyright or have whoever took it claim copyright? It would have come right down. But the word copyright is not in the HP article, period, and this writer benefits from the emotional appeal of ‘look what horrible things these horrible people said to me, horrible’.

You don’t see Mr. Krebs out there complaining about how his website host, by virtue of posting his website and articles, makes him a target for kiddies ddos’ing and demanding his website host change their privacy rules because some idiot kids thought ddos’ing funny, right? No, no, you don’t, because he’s an adult and knows where the blame goes; right smack on the person committing the act.

People have done the hot-0r-not thing to celebrities since the beginning of time, but she only had a problem with it once it happened to her. Or maybe we should make that illegal and/or against the TOS since it hurts their feelings?

In many jurisdictions it’s illegal to sell stolen stuff, or even offer to sell it.

Back in the day of newspapers, the people who accepted classified advertising were trained. If a would-be advertiser asked for an advert saying “oxy 80s for sale cheap. fell off back of truck,” the advert-taker would say “no,” and maybe even call a police detective.

The same is true for “help wanted. only young white men need apply.” The advert-taker would refuse.

FB and Google have “disrupted” that business. A big part of their disruption has been eliminating or deskilling the jobs of people who vetted ads.

It doesn’t seem unreasonable to hold large wealthy companies financially accountable for each instance of promoting carding or selling other stolen goods. It seems reasonable to offer 10% of each fine to a citizen who provides information to authorities leading to assessing that fine. (That has precedent: it’s how the US Environmental Protection Agency multiplied their force to eliminate large-scale pollution in major rivers like the Hudson.)

Now, if the billionaire companies were smart, they themselves would offer $100 to users who reported illegal stuff. This would give them the internal incentive to find it themselves, and to make their internal systems, both software and people, work better.

(There are certainly problems with vigilante enforcement like I’m proposing. But there are problems with the current lack of enforcement too.)

I logged onto my Facebook account for the first time in eight years today. I can only describe the experience as like being sucked into a virtual swamp. There are data receptors everywhere. It was impossible to keep reliable track of my doings while in there. Flagging stuff as private seems to be made deliberately difficult as the privacy of each individual entry must be seperately switched, or so it seems. I could well believe that Facebook has been expressly designed to behave in a way which maximises the extraction of personal data from users while obfuscating the employment of privacy controls. The POTUS talked about draining the swamp. How well he could have applied this to Facebook.

Facebook profit 2017: $16 Billion.

5000 moderators at $50,000 per year = 1/4 of a billion.

Remaining profit: $15.75 billion.

Tough times for Facebook.

Best wait for AI.

They put more importance on shutting down accounts and removing comments for “sticks and stones” hate speech than actual posts that could cause actual harm physically or financially. You can blame the liberals for that. They are more worried about being offended than being robbed.

I’ve reported outright hate speech and was told that it was in conformance of community standards. Apparently stating that you want to kill all N-word people is okay on FB.

I wonder whether also the opposite is true: reporting twitter abuses on facebook to have twitter to finally remove them. Reporting them directly to twitter doesn’t seems to work.

Motherboard published the a similar story today. No way to point them to this one in comments.

I think this is one area where we need more, and effective, smart, government regulation. I can’t say what that regulation would involve, but clearly a well-funded corporate business has failed in its social responsibility.

So, I called my Congressional representatives (at their district offices, which seems to work better) and spoke with staffers about this report by Brian Krebs. (I was ready with a short speech in case I reached an answering machine.)

They listened, they sounded like they understood, and I asked that perhaps staff could find this page and learn how Facebook’s anti-abuse “system” is not working. The URL is a bit long, but Googling for

krebs facebook broken

brings up this page.

One of them pointed out that the Representative had asked Zuckerberg some constituents’ questions. I’ll go look that up right now….

I’m not sure we need more stringent privacy regulations here in the U.S. Sometimes just the credible threat of regulation forces companies to do what they know they should (but otherwise won’t because it might harm profits). That said, it would be nice if we had any real or serious conversations at the legislative level to at least provide this threat. Right now, I’m really not seeing any indication that this is happening.

Regulation historically has served to protect established companies and discourage innovation.

Facebook established itself largely in the absence of stifling regulations. Now that it is in the prime position, it’ll do just fine if there’s new regulations.

What will suffer, though, are less established competitors and innovators in the same field, if new regulations are imposed.

Knowing this, Zuckerberg sat before the Senate comittees inviting new regulations.

We should be careful calling these systems AI just because those who own them call them that.

As this case illustrates they are often AS. Artificial Stupidity.

Similarly, I think of “smartphones” as “dumbphones” often. I’ve had sooo many problems with them. Great when they work.