Facebook has built some of the most advanced algorithms for tracking users, but when it comes to acting on user abuse reports about Facebook groups and content that clearly violate the company’s “community standards,” the social media giant’s technology appears to be woefully inadequate.

Last week, Facebook deleted almost 120 groups totaling more than 300,000 members. The groups were mostly closed — requiring approval from group administrators before outsiders could view the day-to-day postings of group members.

Last week, Facebook deleted almost 120 groups totaling more than 300,000 members. The groups were mostly closed — requiring approval from group administrators before outsiders could view the day-to-day postings of group members.

However, the titles, images and postings available on each group’s front page left little doubt about their true purpose: Selling everything from stolen credit cards, identities and hacked accounts to services that help automate things like spamming, phishing and denial-of-service attacks for hire.

To its credit, Facebook deleted the groups within just a few hours of KrebsOnSecurity sharing via email a spreadsheet detailing each group, which concluded that the average length of time the groups had been active on Facebook was two years. But I suspect that the company took this extraordinary step mainly because I informed them that I intended to write about the proliferation of cybercrime-based groups on Facebook.

That story, Deleted Facebook Cybercrime Groups had 300,000 Members, ended with a statement from Facebook promising to crack down on such activity and instructing users on how to report groups that violate it its community standards.

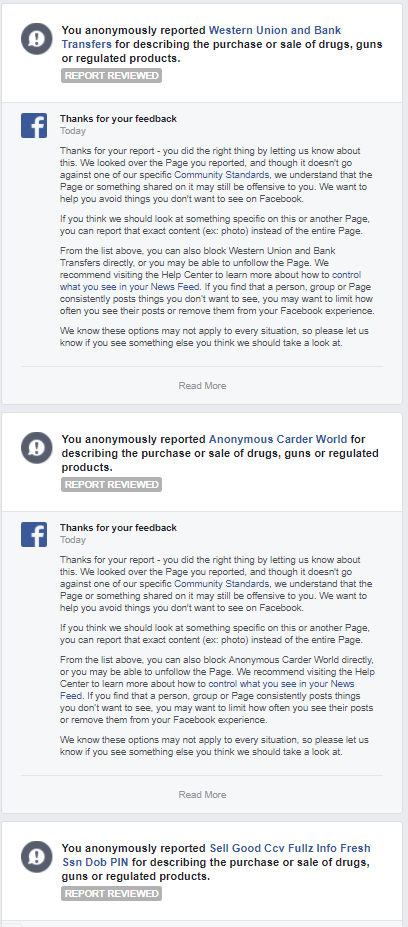

In short order, some of the groups I reported that were removed re-established themselves within hours of Facebook’s action. I decided instead of contacting Facebook’s public relations arm directly that I would report those resurrected groups and others using Facebook’s stated process. Roughly two days later I received a series replies saying that Facebook had reviewed my reports but that none of the groups were found to have violated its standards. Here’s a snippet from those replies:

Perhaps I should give Facebook the benefit of the doubt: Maybe my multiple reports one after the other triggered some kind of anti-abuse feature that is designed to throttle those who would seek to abuse it to get otherwise legitimate groups taken offline — much in the way that pools of automated bot accounts have been known to abuse Twitter’s reporting system to successfully sideline accounts of specific targets.

Or it could be that I simply didn’t click the proper sequence of buttons when reporting these groups. The closest match I could find in Facebook’s abuse reporting system were, “Doesn’t belong on Facebook,” and “Purchase or sale of drugs, guns or regulated products.” There was/is no option for “selling hacked accounts, credit cards and identities,” or anything of that sort.

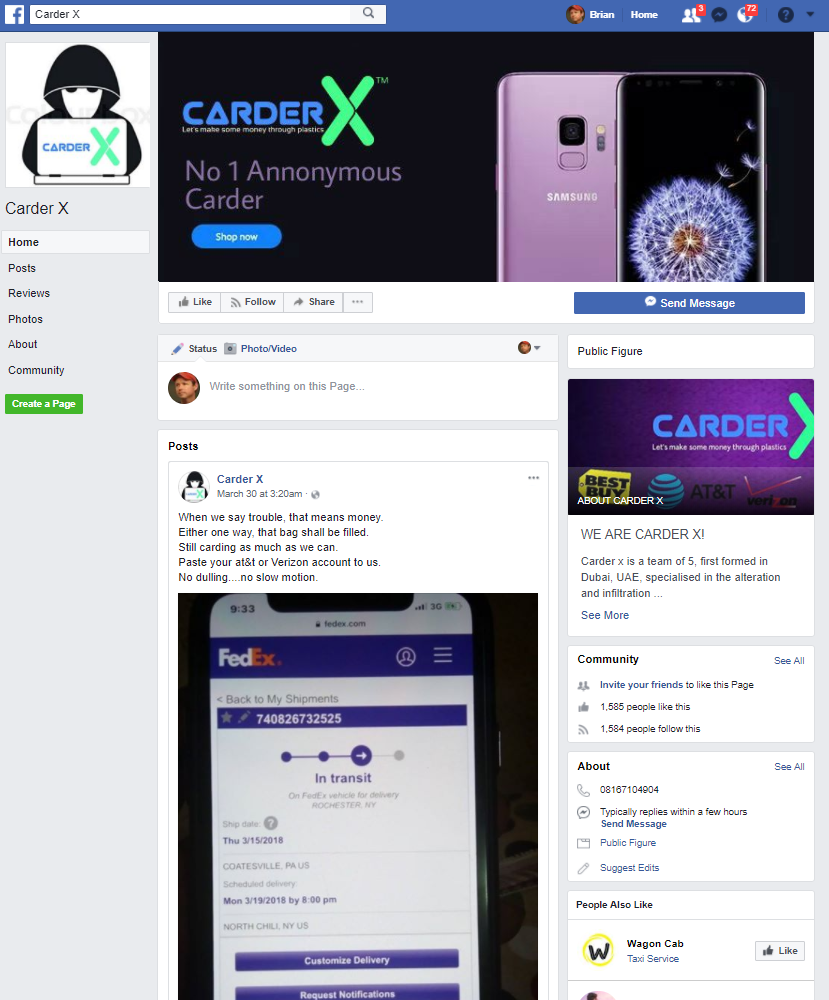

In any case, one thing seems clear: Naming and shaming these shady Facebook groups via Twitter seems to work better right now for getting them removed from Facebook than using Facebook’s own formal abuse reporting process. So that’s what I did on Thursday. Here’s an example:

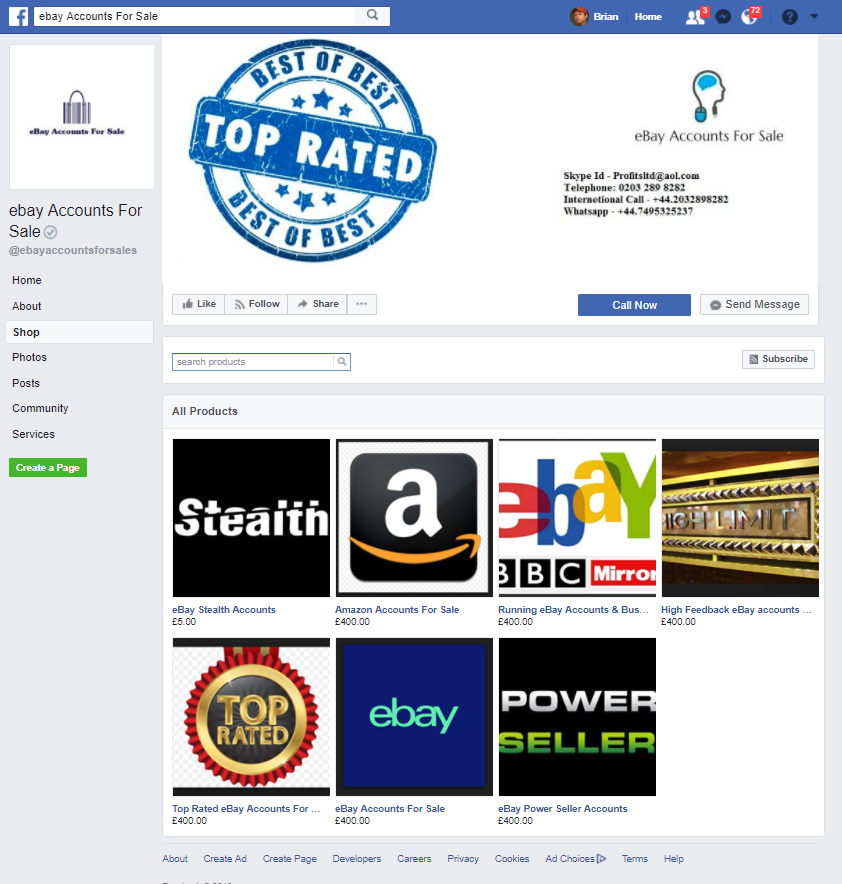

Within minutes of my tweeting about this, the group was gone. I also tweeted about “Best of the Best,” which was selling accounts from many different e-commerce vendors, including Amazon and eBay:

That group, too, was nixed shortly after my tweet. And so it went for other groups I mentioned in my tweetstorm today. But in response to that flurry of tweets about abusive groups on Facebook, I heard from dozens of other Twitter users who said they’d received the same “does not violate our community standards” reply from Facebook after reporting other groups that clearly flouted the company’s standards.

Pete Voss, Facebook’s communications manager, apologized for the oversight.

“We’re sorry about this mistake,” Voss said. “Not removing this material was an error and we removed it as soon as we investigated. Our team processes millions of reports each week, and sometimes we get things wrong. We are reviewing this case specifically, including the user’s reporting options, and we are taking steps to improve the experience, which could include broadening the scope of categories to choose from.”

Facebook CEO and founder Mark Zuckerberg testified before Congress last week in response to allegations that the company wasn’t doing enough to halt the abuse of its platform for things like fake news, hate speech and terrorist content. It emerged that Facebook already employs 15,000 human moderators to screen and remove offensive content, and that it plans to hire another 5,000 by the end of this year.

“But right now, those moderators can only react to posts Facebook users have flagged,” writes Will Knight, for Technologyreview.com.

Zuckerberg told lawmakers that Facebook hopes expected advances in artificial intelligence or “AI” technology will soon help the social network do a better job self-policing against abusive content. But for the time being, as long as Facebook mainly acts on abuse reports only when it is publicly pressured to do so by lawmakers or people with hundreds of thousands of followers, the company will continue to be dogged by a perception that doing otherwise is simply bad for its business model.

Update, 1:32 p.m. ET: Several readers pointed my attention to a Huffington Post story just three days ago, “Facebook Didn’t Seem To Care I Was Being Sexually Harassed Until I Decided To Write About It,” about a journalist whose reports of extreme personal harassment on Facebook were met with a similar response about not violating the company’s Community Standards. That is, until she told Facebook that she planned to write about it.

I sure guess you could be one of the 5,000 they hire!

Hopefully they’ll hire people who live in the areas being moderated.

One of their current problems is they’ve got huge staff in second/third-world countries moderating the pages of first-world users. Their sense of decency and morals and values are at odds with the users.

If they didn’t spend all their time silencing conservatives, they’d probably do a much better job. Gave up on those fools a couple years ago.

Spot on Jason. When you have third world country moderators deciding on what is acceptable and non-acceptable content, you have set the stage for anti American and particularly anti conservative feelings displayed in open forum. They seem to also delete any pro-Israel sites, but give a pass to “Kill all the Jews” sites. After all, these moderators are mostly enemies of freedoms.

While I take great issue with your attitude, I find your point eye-opening. Certainly by contracting folks from other countries to police a largely western community, we open ourselves to manipulation, either deliberate or implicit.

That’s quite an insight actually.

That ‘insight’ was nothing but rightwing talking points.

Ah yes the great conservative victimhood on display.

Yep, facebook is so known for disparaging and limiting conservatives that it was the #1 targeted platform of Russian agents to reach conservative Americans prior to the 2016 election.

Seriously and please, join the rest of us in reality.

Good to see Russian stooges and bots hard at work.

Exactly what I’ve been saying

I get “does not violate our community standards” for reporting 100 percent fake personal accounts all the time.

Sadly your experience with FB’s mainstream reporting system is typical. I’ve reported fake profiles that contact me which are quite obviously only recently set up to begin some sort of confidence scam, only to get the insincere message from FB about their understanding of my feelings but there’s no action being taken. It’s a frustrating experience that really doesn’t encourage the FB community to better involve itself in policing itself.

My report to FB regarding their missing automatic session expiration was also rejected because this issue is not covered according to the terms and conditions of their bug bounty program … that confirms that trust and reputation is more important to FB than real privacy & security for the user.

I get frustrated with their reporting mechanism as well. I’ve reported not groups, but numerous individuals accounts which are obviously fake. I believe they’ve only removed about 3 of my recommendations out of over 50.

please explain how you know profiles are fake?

For example. I had a friend that was suspicious about a contact from someone they didn’t know, and they asked for guidance. When I went to the wall of this “person”, it was obvious it was only recently created, there were no attributes, like friend lists, photo albums, or other normal activities. I thought at the time, that is was someone spearfishing for clueless users, whose accounts could be taken over, because of bad security settings, and/or weak passwords, and maybe a place to launch spam or even worse.

This was quite a few years ago, and when I reported it, the account disappeared off the radar. It had the same exact spelling of another user, and it looked like they tried to copy a few identifiers of this original account to create a doppelganger account, to throw people off.

It’s not just about hacking and carders. The personal story of sexual harrasment on Facebook from this Huffington Post report is disturbing. Facebook basically ignored her requests to remove objectionable content that was targeting her.

https://www.huffingtonpost.com/entry/i-was-sexually-harassed-on-facebook_us_5a919efae4b0ee6416a3be76

Disturbing? You really consider making fun of that “world news reporter” to be disturbing?

Did you know there are reporters at serious risk who cover important news? Consider that there are countries where just being a reporter will get you arrested and killed. Sometimes they are tracked down because their devices got hacked.

I suppose things of that nature pale in comparison to someone at the Huffington Post who cannot accept the fact that she is a public figure yet someway, somehow, individuals talk about her behind her back. Strange, it’s almost as if people are allowed to do that and this “world news reporter” is just mental. How is that even “world news” when she’s complaining about her personal issues anyway?

Mochtroid-X –

Yes, I find comments like “Smash, anal, wearing 2 condoms, using Vicks VapoRub for lube.” to be disturbing. So should you.

Do you seriously believe that comment is a legit threat? Or the screenshot with the comment depicting a gas chamber, do you seriously believe that comment author operates a gas chamber in real life?

Lets get to the bottom of this – was anyone posting pictures taken of her, her home, her friends, or her relatives? Was anyone openly going up to her and clearly displaying how they can hurt her? Was there a knife stabbed into her front door holding a letter written in pigs blood? Were there any casualties at all?

I’ve gotten friend requests from strangers before, and I don’t accept them. Amazing.

And no, I shouldn’t be disgusted with juvenile humor at all. I find it stupid for sure, but I’m not going to delete accounts because someone posted a picture of me and said what they thought about it. That’s absolutely ridiculous.

Found the straight, white, cis dude.

No, you found the grown up

What a shame you cant also behave like a grown up and attempt to disprove the post instead of commenting like an ignorant child

I was never hiding?

Says some twat using the pseudonym “Mochtroid-X”… What’s a Mochtroid? And what happened to the other 9; did they die off or something?

Mochtroids are clones of metroids, from the Metroid series of video games by Nintendo.

X refers to the X Parasties, also from the Metroid series.

Mochtroids tend to die off easily, X Parasites not so much.

But Dave? Dave’s not here, man.

Humor? You are a terrible person! I truly hope that one day you are victim of continually harassment. You deserve it!

There are several flaws in your line of reasoning. Which one to focus on? You gotta pick your battles. I’ll pick one, but rest assured that there are other reasoning flaws.

Not all speech is protected speech. You and I both know this. The question then becomes, “what kind of speech is protected under the 1st amendment?” Your opinion on the subject doesn’t really matter since it’s decided in the courts. From Miller vs. California:

“Obscene material is not protected by the First Amendment. Roth v. United States, 354 U.S. 476 , reaffirmed. A work may be subject to state regulation where that work, taken as a whole, appeals to the prurient interest in sex; portrays, in a patently offensive way, sexual conduct specifically defined by the applicable state law; and, taken as a whole, does not have serious literary, artistic, political, or scientific value. Pp. 23-24.”

Furthermore, “The jury may measure the essentially factual issues of prurient appeal and patent offensiveness by the standard that prevails in the forum community, and need not employ a “national standard.” Pp. 30-34.”

In case you still have doubts: http://www.news-gazette.com/news/local/2014-04-15/man-pleads-guilty-electronic-harassment.html

Rape and death threats are not trivialities. I don’t care how unlikely it is that they will actually be carried through; the threat itself is an act of aggression – the intent of the threat is to cause harm.

Some people are able to happily ignore these things, and some people aren’t. But nobody should be giving the perpetrators a free pass to continue to harass the innocent.

Very serious question. Facebook wants an AI dedicated to reading potentially vile, hateful, and illegal content and then making a value judgement on whether or not others should be allowed to view said content.

Let’s never mind what they will do to Facebook content. Facebook’s content is already terrible. But they’re going to be training an AI to see and react to the worst humanity has to offer. If it’s truly an AI, how are its values and reactions likely to evolve over prolonged exposure to this?

Once again, Facebook seems to be offering to cover a large problem with an even larger one. Kind of like cleaning your carpets with a jackhammer.

No AI is completely autonomous, fortunately. And AI doesn’t have emotional or psychological reactions to what it learns.

You make a great point, though.

Under attack. Help Fiona!

This is an example of why I coach my family to never use this app. It is one of the most dangerous user apps ever invented.

I have also flagged numerous accounts on FB and only had one actually get pulled. I found a “person” who had my business listed as his place of employment, no amount of flagging would get that removed. It’s frustrating that anyone could gain legitimatcy by using my name and I cannot do a thing about it. They just don’t care. Absolute power (and money) corrupts absolutely.

FB’s business model is based upon selling your personal data; they’re not doing it for their health, you know… And in Amerika (technically a 2nd-world country), you don’t own your personal data, which is why FB and others are now thinking twice about setting up shop in Ireland (a member of the EU, where, gasp, your personal data belongs to *you*, not to whichever slimeball bought it last).

April 17, 2018 journalist Jesselyn Cook writing for the Huffington Post wrote an article about her personal experiences titled”Facebook Didn’t Seem To Care I Was Being Sexually Harrassed Until I Decided to Write About It.” Similar story, reporting abuse through Facebook’s Anti-Abuse process ignores obvious bad actors that should not have access to Social Networks while publicly writing about it gets prompt results.

I have been on the fence a long time whether to stay with Facebook or to delete my Facebook profile until reading her story and reading the previous Krebs on Security article, “Is Facebook’s Anti-Abuse System Broken?” I said enough is enough, I quit Facebook. Zuck, look at the groups that are being reported to Facebook Anti-Abuse these articles are focusing on, Alt-Right hate groups, identity thieves, criminals. Why would anybody want to associate with a company that allows their platform to promote such activity?

Thanks, Tim. I’m adding an update to the story with a link to that piece.

I have had pretty good luck reporting fake accounts that are impersonating friends of mine. It may be because they usually get multiple reports of the same fake account.

You don’t need artificial intelligence to predict that with any “new” Facebook AI the chief, overriding and paramount mission will remain increasing profits, which of course depend on the continual expansion of the monthly active user base.

They only care about advertiser revenue and getting their garbage stock to increase in value.

They could care less about the fake profile accounts and/or any illegal activity that takes place on the site

The only way to get them to respond is to get a state AG to launch an investigation into them for what is essentially harboring criminal activity

So it’s that 48 hours thing which seems to be consistent. I have received successful Facebook reports in as little as an hour. I think that is when a live breathing person takes a look. The ones I receive back in 48 hours are always “Thank you.” I think that there is a time-of-response limit that is triggered and clears the queue. I would love for some Facebook stats on how many reports get a temporary contract worker response and how many are fed to the algorithm. I do not think AI is quite up to the challenge although Mr. Zuckerberg is always quick to start coding. How does an Algorithm tell if the “Puppies for Sale” page is fake? (even after a report) I can tell by google searching the pictures, chatting with the page admin, looking up the address, etc. How does the algorithm do that? Can the algorithm realize that there isn’t a 50 breed dog operation located on 1060 W Addison St, Chicago with a local phone number? How is that programmed? While we are asking questions, why can I place a country filter on a page, but not a group, or on a personal page? That would be a handy way to keep Grandma from catching as many cupid scams.

There are lots of complaints about Facebook policies, procedures, and practices. But at least they are not hiding behind claim of “not being a Tier of Truth” and do remove criminal enterprises on FB computers. I am disgusted by DreamHost in Bria California refusing to remove the scam sites that they are servicing. I provided their abuse team the evidence of the scammer running a fake staffing companies and they have continued to provide service. The scammer keeps a ‘company’ site up for a year and lets it expire with other ‘companies’ taking their place. I have been watching the scammer for three years now. Other hosting companies take his stuff down when i provide the evidence.

Facebook is worse than the Holocaust. Anyone who is still on that privacy spilling POS deserves what they get. Same with people who use Yahoo and AOL mail services, etc. When you tell a retarded person that they’re retarded, they tend not to believe you… Because they’re retarded. There is no help for the mongs among us.

Phil,

Wow. The release of personal information that people have chosen to share is worse than the planned and systematic implementation of a genocidal event that eradicated half of an entire ethnic group? You really need to get some perspective or read a factual history book.

0.01 on the troll-o-meter.

This reminds me of this incident where I had the extreme misfortune to stumble across a Facebook page that glorified the killing and extraordinarily graphic mutilation of cats and dogs. It was so extreme that I nearly vomited looking at it. I immediately reported it for violence and Facebook replied two days later saying that page didn’t violate any guidelines and that no action was taken. To say I was furious would be a huge understatement. I seriously doubt these 1500 moderators are doing their job, they’re just randomly hitting buttons or assuming the reports are fake and not even “investigating”.

I know I’m slow but help me out here.

We have a world-wide-accessible system which allows and encourages anyone to write/post anything on any topic at any time, repeatedly and there’s a problem when it actually happens? Millions of posts (and images) everyday which are then supposed to be monitored and culled by… whom? Someone who understands your value system and makes the right call, every time, on time?

If you were running FB, how would you tackle this problem? What filters would you use? What are the parameters?

I know we Americsns have a constitutional amendment which guarantees the right to not be offended or inconvenienced, but most of FB’s participants are from other countries.

Lots of understandable complaints, who knows the answer?

Great stuff. Does yhe mainstream media follow you?

This was my experience as well. I reported a profile that appeared to be a bot impersonating my underage child, and got the reply that it wasn’t violating any terms. After I posted about it on Facebook and a bunch of my friends also reported it, it was taken down, but really? It would have taken 5 seconds for any literate adult to realize the profile I reported was both of an underage person and also “friends” with hundreds of people who were posting violent images in languages that don’t use the English alphabet.

Why bother trying to reform FacePlantBook? Both its data mining practices and its purges of conservatives show that it is rotten to the core. Boycott the damn thing!

The whole anti-abuse is broken- they came after me and deleted my account because I had a public squabble with a brother on his facebook page . Since when is it their job to moniter family quarrels and how does that relate to they being an anti-fraud or abuse watchdog???? I asked them but no one ever replied ( after sending 10 emails to them). Instead I continued to receive requests for proof of name ( which was impossible as it was my nickname by which people know me although my surname was accurate on the account). I finally said delete the damn account – facebook is nothing more than a joke now

I reported a Facebook profile which was actively impersonating Bank of America’s social media presence, and which reached out to me (After I posted something to BoA’s real Facebook page) to try to scam me out of account information. Facebook’s response was the typical “thank you, we reviewed, found nothing, no action taken” despite the fact that 10 seconds of looking at the Facebook Messenger dialogue between the profile and me would convince any moderately awake person that the profile was a scammer.

(I see now that, with no further comment to me at all, the profile is gone; maybe me having spoken with the chief security officer’s staff at BoA through a mutual professional acquaintance had something to do with it).

It shouldn’t be necessary for a reported or a professional link to senior staff of a major bank to get Facebook to have a “Look at the messages, it’s obvious” reporting option. As others have commented, including Krebs himself, Facebook’s report options today are woefully inadequate. The system is broken.

I ran into the same thing 3 years ago when there were a number of “Free Disney Park Ticket” scams running wild on Facebook. The first one I reported, they responded within 24 hours and took it down. The second one that was an identical copy of the first one, with links to the same phishing URL, I received the “We looked over the Page you reported, and though it doesn’t go against one of our specific Community Standards, we understand that the Page or something shared on it may still be offensive to you and others.” At the time of reporting it, there was no option to report a scam so I chose harassment. To this day, the Disney Redeem page is still not taken down. Fortunately it looks like everyone got the hint that the site is bad likely because the URL shortener provider has flagged the URL as malicious.

HELP WANTED!

Internationally known social medium company is hiring people to improve its screening of people who post to its pages. Applicants must have degree in international relations and have worked for a large metropolitan newspaper. Experience in running own web page a plus.

Aha!

“Internationally known social medium company is hiring people to improve its screening of people who post to its pages.”

“Applicants must have degree in international relations”

– Check. I’ve got that.

“and have worked for a large metropolitan newspaper”

– My brother did years ago and told me all about it. That probably counts.

Experience in running own web page a plus

– No, but I’ve been a Moderator elsewhere, and I’m a Facebook group moderator now, so I know all about this stuff. Easy-peasy. When can I start? How much does it pay? And do I get a “Facebook Moderator” T-shirt?

Hey, I fixed that issue. Delete your account from that scammy my web site. And it’s fixed!

Great artFaceBook needs to improve its processes and at least allow an “other” category if the don’t want a “scam” category or “fake news”, etc.

I like the idea that a report on twitter is more likely to get results. Not like for me (less than 100 followers) but true for those with large numbers of followers.

There was a presentation about something similar to what you posted. One of the slides covered on the differences between Facebook groups and Dark Web forums. One of the challenges is the Hydra theory. You sliced one head, another one will appear and it will get harder to face.

Link: https://youtu.be/pKV3_ikClPc

From an intelligence gathering, the more you report, the more FB take them down and the more groups these admins are going to make and increase their FB groups’ visibility to more secure (secret mode).

Why do you think Hackforums is still up and running despite cybercriminals using that forum to sell malware and botnets? If you take it down, they will just move elsewhere making it tougher for law enforcers to monitor.

Personally, i disagree with your approach. Your assumption of a page advertises a selling of logins means they are selling actual logins. I have seen groups naming themselves things like ‘Vietnam Cyber Army’ but does not do any cyber hacking activities and groups like ‘Israel Cyber Army’ which actually hacks websites and openly flaunt their ‘success’. The point is just because a group or a page is called ‘Carding Credit Cards’ does not mean they are doing any actual carding related activities. Are you even in most of these closed groups and see if actual cybercriminal activities were happening before you report them? Or are you simply assuming just by the keywords you used and the groups that appear from your search?

You are absolutely correct. I have reported FAKE profiles to Facebook over 700 times and always get the denial answer.

https://www.facebook.com/antifraudintl.net/

And https://www.facebook.com/Miyuki-170487513066432/

Are FAKE profiles set up and run by Chandra Sekhar Sathyadas to defame, bully and stalk me and now he is attacking Facebook AND its seniors, but Facebook support seem incapable of acting on this fake profile.

How is it possible for Facebook to say it is not in violation of their community standards when they are FAKE accounts? Thank you. Jimmy Jones.

If you have that much of a problem with Facebook, why do you stay?

You’re like that guy who keeps complaining about getting lice from prostitutes.

So leave and let the scammer continue to defame me and others?

Why not try to get Facebook to adhere to their policy and get them to suspend the scammer for having multiple and FAKE accounts in clear violation of the FB community standards.

Because neither Facebook’s policy nor its software are the problem. The problem is the people who are doing the moderating. Oh, and you are not a customer — their customers write checks to Facebook. You are the product they are selling.

Yes, leave.

Hire a lawyer to draft a cease and desist letter, then publicise the letter in a press release. You’ll get the attention of Facebook, with none of the risk of litigation.

As long as you stay, Facebook is making money off your continued views of advertising and data gathered about your interests. They have no incentive to resolve anything if you stay and cost them nothing.