It happens all the time: Organizations get hacked because there isn’t an obvious way for security researchers to let them know about security vulnerabilities or data leaks. Or maybe it isn’t entirely clear who should get the report when remote access to an organization’s internal network is being sold in the cybercrime underground.

In a bid to minimize these scenarios, a growing number of major companies are adopting “Security.txt,” a proposed new Internet standard that helps organizations describe their vulnerability disclosure practices and preferences.

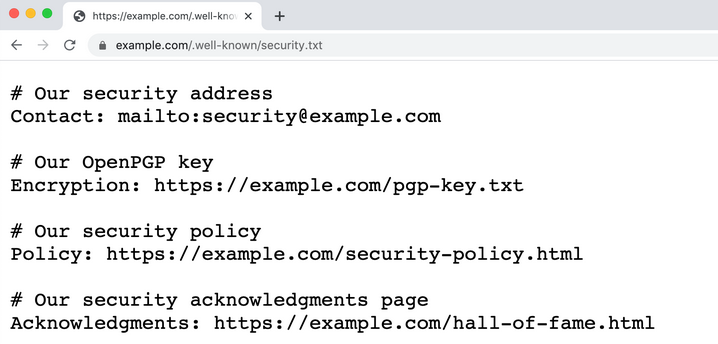

An example of a security.txt file. Image: Securitytxt.org.

The idea behind Security.txt is straightforward: The organization places a file called security.txt in a predictable place — such as example.com/security.txt, or example.com/.well-known/security.txt. What’s in the security.txt file varies somewhat, but most include links to information about the entity’s vulnerability disclosure policies and a contact email address.

The security.txt file made available by USAA, for example, includes links to its bug bounty program; an email address for disclosing security related matters; its public encryption key and vulnerability disclosure policy; and even a link to a page where USAA thanks researchers who have reported important cybersecurity issues.

Other security.txt disclosures are less verbose, as in the case of HCA Healthcare, which lists a contact email address, and a link to HCA’s “responsible disclosure” policies. Like USAA and many other organizations that have published security.txt files, HCA Healthcare also includes a link to information about IT security job openings at the company.

Having a security.txt file can make it easier for organizations to respond to active security threats. For example, just this morning a trusted source forwarded me the VPN credentials for a major clothing retailer that were stolen by malware and made available to cybercriminals. Finding no security.txt file at the retailer’s site using gotsecuritytxt.com (which checks a domain for the presence of this contact file), KrebsonSecurity sent an alert to its “security@” email address for the retailer’s domain.

Many organizations have long unofficially used (if not advertised) the email address security@[companydomain] to accept reports about security incidents or vulnerabilities. Perhaps this particular retailer also did so at one point, however my message was returned with a note saying the email had been blocked. KrebsOnSecurity also sent a message to the retailer’s chief information officer (CIO) — the only person in a C-level position at the retailer who was in my immediate LinkedIn network. I still have no idea if anyone has read it.

Although security.txt is not yet an official Internet standard as approved by the Internet Engineering Task Force (IETF), its basic principles have so far been adopted by at least eight percent of the Fortune 100 companies. According to a review of the domain names for the latest Fortune 100 firms via gotsecuritytxt.com, those include Alphabet, Amazon, Facebook, HCA Healthcare, Kroger, Procter & Gamble, USAA and Walmart.

There may be another good reason for consolidating security contact and vulnerability reporting information in one, predictable place. Alex Holden, founder of the Milwaukee-based consulting firm Hold Security, said it’s not uncommon for malicious hackers to experience problems getting the attention of the proper people within the very same organization they have just hacked.

“In cases of ransom, the bad guys try to contact the company with their demands,” Holden said. “You have no idea how often their messages get caught in filters, get deleted, blocked or ignored.”

GET READY TO BE DELUGED

So if security.txt is so great, why haven’t more organizations adopted it yet? It seems that setting up a security.txt file tends to invite a rather high volume of spam. Most of these junk emails come from self-appointed penetration testers who — without any invitation to do so — run automated vulnerability discovery tools and then submit the resulting reports in hopes of securing a consulting engagement or a bug bounty fee.

This dynamic was a major topic of discussion in these Hacker News threads on security.txt, wherein a number of readers related their experience of being so flooded with low-quality vulnerability scan reports that it became difficult to spot the reports truly worth pursuing further.

Edwin “EdOverflow” Foudil, the co-author of the proposed notification standard, acknowledged that junk reports are a major downside for organizations that offer up a security.txt file.

“This is actually stated in the specification itself, and it’s incredibly important to highlight that organizations that implement this are going to get flooded,” Foudil told KrebsOnSecurity. “One reason bug bounty programs succeed is that they are basically a glorified spam filter. But regardless of what approach you use, you’re going to get inundated with these crappy, sub-par reports.”

Often these sub-par vulnerability reports come from individuals who have scanned the entire Internet for one or two security vulnerabilities, and then attempted to contact all vulnerable organizations at once in some semi-automated fashion. Happily, Foudil said, many of these nuisance reports can be ignored or grouped by creating filters that look for messages containing keywords commonly found in automated vulnerability scans.

Foudil said despite the spam challenges, he’s heard tremendous feedback from a number of universities that have implemented security.txt.

“It’s been an incredible success with universities, which tend to have lots of older, legacy systems,” he said. “In that context, we’ve seen a ton of valuable reports.”

Foudil says he’s delighted that eight of the Fortune 100 firms have already implemented security.txt, even though it has not yet been approved as an IETF standard. When and if security.txt is approved, he hopes to spend more time promoting its benefits.

“I’m not trying to make money off this thing, which came about after chatting with quite a few people at DEFCON [the annual security conference in Las Vegas] who were struggling to report security issues to vendors,” Foudil said. “The main reason I don’t go out of my way to promote it now is because it’s not yet an official standard.”

Has your organization considered or implemented security.txt? Why or why not? Sound off in the comments below.

This sounds ridiculous to me. Personally I have stored my own personal usernames and passwords in a passwords.txt file for years and have only have jokingly stated that if I ever get hacked, it will be my own damned fault.

Hi Justin. Did you read a different story? This post doesn’t have much to do with usernames or passwords.

Hi Brian

Most of us have gmail account. I’ve been using an addon for a year and I can’t live with out it.

It’s called mailtrack. I believe the url is mailtrack.io.

I tells you whether an email is opened or not just like the big firms can tell when we open their emails.

It’s only 2 bucks per month (24 per year) I could have told if the CIO opened your email with mailtrack.

Lol, I don’t think he cares in that way like a sales person or stalker would. He is more likely expecting a reply saying something, “Thanks so much! We are on it”. Or “Thanks, can you help us in some way”

This technology depends on automatic downloading of images to log an HTTP GET request from a “tracking pixel” embedded in an email. This does not work if the organization – like mine and many other security-minded companies – has automatic image downloading disabled in their mail clients.

No, you likely couldn’t have. PS, turn off external images in your email client.

That’s a lot of text files you need to maintain when you’re a large entity with the number of domains in the thousands. And couldn’t the same thing be achieved with TXT records in DNS? Which path has the least resistance wrt. the “security” team making updates to the contents? In my case it would be significantly easier to update DNS en masse than to find all the web admin for every domain and ask them (nicely) to deploy said text file.

DNS TXT records might be cool to use. But length limitations of each record make it less scalable for arbitrary data. Many organizations might want to put longer text policy information out something.

A flat text file gives more room for flexibility, compared to a TXT record that normally is expected to follow an RFC.

Also, many organizations don’t manage their DNS in house. While their website content is much easier to manage.

Agree on the TXT length issue, but we’re talking contact details, not a whole policy. I’d also hope that even if an organisation doesn’t manage DNS in-house, I’d hope that requesting a change isn’t so arduous that it’s actually a bottleneck.

Perhaps both options need to be considered. Both appears to have pros and cons that may appeal to different organisations.

Honestly, any standard should support both. There are cases where it’s hard (or impossible) to modify the website, eg a remote hosted 3rd party e-commerce site pointed to by DNS.

Short TXT rec, possibly just an email or ptr to a longer doc at an arbitrary URL

Any organization worth anything is going to be running a reverse proxy/WAF in front of their sites. Trivial to send ANY security.txt request to a specific URL.

I agree. I think that security.txt sounds like a fucking stupid idea. Why not just put it in robots.txt? Does WordPress even ALLOW us to use some random text file called security.txt?

Fuck this idea before it gets any traction.

Robots.txt is already in the standard, for a very different purpose. It’s not meant for arbitrary instruction for human eyes. It’s meant only to be parsed by web crawling spider robots. Start adding arbitrary text, and it’s gonna be problematic.

Yes, but you touch upon an annoying aspect of all of these security ‘research’ and corporate security scanning companies like Stredtchoid, Internet Scanning Project, Shodan, Censys, Foregenix, etc.

They don’t obey robots.txt and some of them don’t even have a way to opt out of the garbage noise of their incessant scanners. One has to block HUNDREDS of netblocks to lower the signal/noise ratio of logs to be focused on REAL threats. These organizations are only motivated by profit and are as benevolent as Facebook when it comes to their true actions (FB is evil). Several of them go far beyond banner grabbing and port scanning into what amounts to exploitation or DoS. This is something we should be doing on our own.

I am not a fan of security.txt as most of these organizations will send me more junk false positives. The greatest gem I got as of late is an Exchange vulnerability on one of my LINUX servers. Or the incessant crawling for IoT, TV, home router vulns, etc. Furthermore, when did I authorize these jokers to even do this scanning?

Perhaps we should extend security.txt to include details of whether we allow said ‘scanning’ or not and by whom. If that was the case, I might be interested in embracing it.

I agree. It’s been a pain.

The robots.txt exists in the standard for a reason and when people ignore it it sucks. There’s also a do not track HTTP header flag that gets ignored too. Standards only really work when people abide by them.

External scanning is a fact of life on the internet now. If the internet were designed originally for security, then maybe we could complain, but it’s not.

It really depends on what you value most in your organization.

For those who have to deal with false positives, they don’t want to see more.

For organizations who have complete control over their DNS and WHOIS records, a security.txt is redundant.

However, I can see how security.txt might be useful.

Many, many organizations have control over their web servers to create a flat text file but they outsource DNS management to a third party. THe WHOIS contact information may already be utilized by a completely separate department for legal and abuse of just the domain registry info.

Many organizations face security threats beyond the common and recurring vulnerability scans. Whether a white hat with a real vulnerability to report or a black hat trying to extort a ransom.

Yes this is going to be abused, and you’ll get more spam. But it can be useful for security teams who don’t know what they’re missing. Some teams don’t care if they get more spam, if it increases the chance of them getting that one vital report that can often be sent to the wrong person and go down a black hole.

WordPress is just the CMS, you can make a subfolder at your host wherever you want and put a text file in it.

I believe e-mail will never work. And “/securit.txt” is too easy to harvest making it a great phishing target.

I would suggest a multiple-captcha protected web page instead, such as http://domain.com/securityreport

This will help screen all the automated scripts. Add fields and questions to allow a first screening. And now we have the opportunity to write scripts that will parse the submissions, decide whether we want to follow-up, sort by order of importance, etc.

Another option would be to leave a PHONE NUMBER instead. VoIP rates are very cheap. Your first prompt is “Press to continue”. This will make impossible to fully automate calls with pre-recorded messages. Ask the caller to record the reason for their call, explain the vulnerability they would like to report, and their contact e-mail address. Only the serious one will be willing to record a message. Within the first few seconds of listening to the message you will know whether this is worthy or not. When you get a good one, get back to the individual and send them a amazon gift card 🙂

Thanks for the awesome site, Brian.

“Only the serious one will be willing to record a message”

-I dunno about that particular point. Not a bad idea otherwise.

I like the out of band aspect, Amazon gift card not as much.

A Krebbs bobblehead maybe? Numbered edition. Oh yeah.

I do find it kind of hilarious that none of these companies have an

abuse @ org . com that’s monitored? Can’t we mandate as much?

Don’t their whois infos go to someone who can be $5 hammered?

Can’t their ISP get to them? It seems like a solveable problem

in a sense that the ones who have any CHANCE of updating their

situation or intention to do so, they will be reachable somehow.

The ones who aren’t will always be vectors regardless of the info,

the underline on their security paradigm that they’re unreachable!

Even if you could get to them, would they care? We need option #2.

Although I like the idea of adding more info to the WHOIS information.

The internal security team of an organization, is usually NOT the same department that manages DNS.

The “abuse” email address is still there in the WHOIS information. The example /security.txt that Krebs gives, is for Amazon.

They have WHOIS abuse contact email and phone numbers going to their legal team, but for Bug Bounty programs or out of band security vulnerability disclosures (with PGP encrypted email… They use /security.txt

Very different methods for contact, for very different purposes.

For smaller organizations, the WHOIS information is mainly handled by the Domain Registrar, with several degrees of separation from the security team.

So although DNS, WHOIS may seem logical to find the proper contact info, it’s been around so long, and for such a different purpose, it’s more likely to result in messages going to the wrong people.

A plaintext security.txt file might be a good idea if the security team has better control over it, as they can manage it themselves and cut out the middlemen.

I like your ideas. Certainly will protect against automated spam.

Not sure how many security departments want to maintain their own website though. The idea for security.txt is that is really simple.

Instead of complaining about receiving low-quality vulnerability scan reports, maybe companies should strive to not have any vulnerabilities. Thus, no reports. Why is being informed of a scanned security vulnerability considered a nuisance report? Sometimes the smallest vulnerabilities are exploited into spectacular breaches.

They’re oftentimes not actual vulnerabilities but rather false positives triggered by low-quality scanning with no manual review.

Also, attempting to exploit vulnerabilities in someone else’s systems without permission is a felony in the US and many other countries, even if you’re not doing so maliciously.

You are making a huge assumption. You are assuming that the people reporting the vulnerabilities are actually competent. A lot of times, people THINK they have found a vulnerability but they haven’t because they made a mistake, the “vulnerability” requires root or Administrator permissions, physical access to a machine for long periods of time, an insecure software configuration, etc.

The other assumption you are making is people know how to create secure software. We don’t. Don’t believe me, ask yourself why every major operating system, application, database, piece of middle ware, etc. has lots of security vulnerabilities. The reality is security is VERY hard and no one knows how to do it.

Here are some of the challenges in creating secure software:

1. Motiving software engineers and IT people to care about security. Most don’t care and it shows. Examples include not validating input from untrusted sources (the internet, a file not created by the current user, etc.); not patching systems because the security update might break something; not patching their systems or containers because they don’t care; not even bothering to put a password on an internet connected database or cloud storage account (AWS S2 or Azure blob storage) and not creating threat models.

2. Motivating teams to care about security. Customers and senior managers frequently reward teams which create insecure or somewhat secure software. A good example of this is Apple’s iMessage communications application on the iPhone, iPad and I think Mac. Users love the reaction emojis, the support for a lot of different data types (images, audio files, PDF documents, web links, etc.), the ease of use, the polish, the visual appearance, etc. If you took away all of the data types and just supported text, iMessage would be a lot more secure because it would run less code (i.e. have smaller attack surface). However, users would scream because a lot of the features they love were taken away. Note that I like iMessage and use it.

Another example is users love new features and buy products which are easy to use and have great functionality. They frequently will put up with bugs and imperfections and this includes security bugs.

3. Motivating customers to care about security is hard. The most obvious example is lots of people refuse to replace software and hardware which no longer receives security patches. Examples include phones which are used after the vender stops issuing security patches, IT appliances (VPNs, firewalls, etc.) which stop receiving security updates and of course using old versions of Windows which do not receive security updates. I have seen a lot of people online who are proud to still use Windows XP (I say one commenter on this site arguing that his XP machine was safe) or Windows 7 even though these OSs do not receive updates and are easy to hack (no updates means known vulnerabilities and known vulnerabilities means hackers know how to hack your device).

4. Measuring security is hard. How do customers and managers determine if software A is more secure than software B? It’s hard because you have to review the code, the architecture, the deployment and you have to know what threats exist and how the software protects against them. No one does this. Another example is how does a manager know if software engineer X is better than software engineer Y? Security vulnerabilities are often subtle and managers usually have no way of knowing which engineers create lots vulnerabilities and which ones create fewer vulnerabilities.

5. Software is extremely complex and there are a lot of things to defend against. Many of them are not obvious. Here is an example, on computers X + 1 can be less than X. Intuitively, that makes no sense. How can a number’s value decrease if you add 1 to it? In math, it can’t. On computers it can. Here is an example. Lets say you store the number 255 in a unsigned byte. An unsigned byte can store numbers between 0 and 255. If you add 1 to 255, the result is 0. This is called an integer overflow and it leads to a lot of security vulnerabilities. Protecting against them is not hard but it takes work and a LOT of software engineers either will not do the work or do not realize they have to do the work. There are a lot of classes of security vulnerabilities like this.

6. Security bugs are usually not in security code. They are usually in code which does something useful like process a font, an image, a name and address for a package, etc. If this software is passed malformed data, it malfunctions and lets the attacker do something the author never wanted the attacker to do. The problem here is it means software engineers have to get all of their code right, not just a small piece of it.

7. Attackers (hackers) only need 1 vulnerability to break into a system. Defenders (software engineers, IT people, etc.) need to fix all vulnerabilities in order to have a secure system. One mistake and the attacker can get in.

8. Almost all software is built on other software. What this means is almost no team writes 100% of the code their app uses. They build on operating systems, databases and libraries written by other teams or individuals. It’s not economical to write everything from scratch. The problem is this means teams have to keep track of all of the vulnerabilities in all of these things they use and make sure they patch them. It’s also hard because a lot of these products are easy to use insecurely. Examples include using easy to guess passwords (i.e. 12345, QWERTY, password, etc.), SQL injection vulnerabilities and data deserialization vulnerably (JSON, XML and binary), etc. The other problem is software engineers and IT people have to learn all of these systems and that is hard. A lot of software engineers don’t even read the documentation for the libraries and frameworks they use!

9. Looking for vulnerablies or bugs in code reviews is hard, boring and not rewarded. Not surprisingly, lots of things slip through. A code review is a process where software engineer A writes or modifies a part of a program and software engineer B reviews the changes.

10. Perfection is very very very very hard to achieve and is usually not possible to achieve. Think about this when you think about point 7. In order to have secure software, you need to be perfect but humans are far from perfect.

This is not exhaustive list but my point is saying “they just need to write secure software” is unrealistic. Security is hard to achieve, expensive and requires a lot of knowledge and work. Most software engineers, IT people and customers are not willing to do this work and they certainly are not willing to pay for it. Even if they were willing to do it and would pay for it, they would not be able to tell the difference between secure software and insecure software which means they have no way to know if the people creating the software are doing the right thing. Finally, security requires perfection and well, no one and no organization is perfect.

Well said. This is why I walked away from my software engineering job that had a very nice salary. The big paychecks were no longer making up for the anxiety and sleepless nights worrying about what might go wrong with my code or that written by some lowest bid contractor. Luckily I invested all that excess salary over the years instead of blowing it on toys. I’m an early retiree now and every day is Saturday.

“Sometimes the smallest vulnerabilities are exploited into spectacular breaches.”

Very sometimes indeed. But you admitted it as a “low-quality” report yourself.

Do you start high-$ engineers on a low quality lead or a high quality lead first?

Which do you think is more likely to bear fruit?

No organization that does anything online will have zero vulns. Some do strive.

All you can do is take away their public pretense of not knowing about them.

High quality (reproduceable) bug reports in a public forum accomplish that.

Low quality scans of ‘possibugs’ are grains of sand on the beach parking lot.

Where do you want to start, just anywhere? It’s as realistic as zero vulns.

I believe security.txt is a good idea. No need to post your email address in the text. Having a web form with Captcha will remove the possibilities of bots sending spam while allowing security researches to send any findings to the security admins.

Not receiving important notification about security issues have great risks.

Captcha is great. But no longer a simple text file.

Maybe just base64 encode the email. Or something simple for a human to figure out, but would mess up scrapers. I remember when lots of sites used instead of the symbol to avoid spam scraping. That may or may not still work.

Less than symbol “at” greater than symbol, instead of the normal email “at” symbol.

“You have no idea how often their messages get caught in filters, get deleted, blocked or ignored.” Yes, yes I do.

We actively filter by bitcoin wallets in email – to the bin. If disaster hits we will rebuild – we do it twice a year for practice. Not going to waist time chatting with some terrorist cunt that would be sleeping with the fish if anyone knew their name.

Hmm, https://gotsecuritytxt.com/query/krebsonsecurity.com shows nothing 🙂

Tampered security.txt file is an issue. PGP signature can help mitigate that risk. However, the standard should add a federated lookup / verification service for security.txt files or at least offer “pinning” to better catch tampering. Some may counter that would make changes more difficult. In my view, that would be good. Security.txt should rarely change.

To prevent reporting spam, monetize! Announce that you won’t open any email that doesn’t come with a $20 bill “attached”. Or evidence of a donation to a security research fund, from which, as a known researcher, you could request support or reimbursement.

I am wondering whether or not spammers would go to the slight inconvenience of PGP encrypting their email. If not, perhaps the security.txt file could inform users that email submissions without a valid PGP header would be automatically deleted and that all submissions must be digitally signed. Replies would only go to the address in the signature.

…the only issue with this is that anyone can get a gpg (the open source equivalent of PGP) key and can “sign” the email with the key they generate…

…so yes, you “know” them, but not really…

…it would not take too long for the spammers to figure this out…

I think that was his point, a slight inconvenience is often enough to avoid spamming.

Yeah, they’ll figure out what happened, but I seriously doubt they’re going to recode their spamming email client for pgp.

Exactly. General spammers don’t seem to harvest email addresses and encrypt messages to people who use keyservers.

Perhaps he was thinking that slight inconvenience meant that I intended this part of a puzzle to be a magic bullet for weeding out Joe Nmap’s supposed vulnerability report. Unfortunately, that probably isn’t possible.

I would also be concerned that by having a reporting mechanism, some management outside of the security team might think that they could no longer state “we have done everything possible to secure our systems” if a valid report is missed and not acted upon before a breach. I’m afraid liability and lawyers internally could derail any plan.

…so let’s put on a black hat for sake of argument…

…if I force the defender to verify the signature and then decrypt the email I’ve added to their workload which may be what I intended to do in the first place so I could sneak a real attack by…

…or maybe I just wanted to increase their workload cuz that’s the way I am…

I’m not sure if any internal bug bounty program is suitable if the staff is so small that they will get overwhelmed looking at email.

BTW, email clients verify and decrypt signatures automatically without adding to the workload.

…sure, but bug bounty programs are a potential way in so attackers will try to exploit that route…

…web-based email, or email clients that check the ckl/crl list and the key servers is not how you read bug bounty submissions in any case…

…garden variety spammers we don’t care much about in the grand scheme, attackers we do…

It’s risk vs reward. Many organizations agree, the miniscule increase in risk that having a bug bounty program may present… Is usually dwarfed by the benefit of getting a lot more eyes on the real problems on the perimeter.

Exploits of the slightly increased attack surface may be notable, but enterprise environments aren’t getting hit in any meaningful way compared to the attacks that a bug bounty may help with.

…agreed, bug bounty programs have real value…

And security.txt just another optional avenue for bug bounties.

…bug bounties I agree with, spending cycles on garden variety spammers I don’t…

Any bug bounty program is going to require resources.

Knowing how to properly automate the management of an inbox, is a core competency for any security operations team member.

If your security team is full of people who only know how to do things manually, they don’t need to be there.

This reminds me of an old argument that some people try to make.

“This sensor produces too many false positives so maybe we just remove the sensor”

Those people get moved elsewhere. Then we bring on somebody who knows how to tune the sensor to reduce false positives and knows how to automate to handle the rest.

If anyone is really that concerned about getting spammed because of a bug bounty communications channel, and they just want to remove it,… That’s not the right answer. They might get some additional training on how to set up email filter rules.

…the entire purpose of security.txt and bug bounty programs in general is to have human review…

…if you rely too much on automation you have in effect idiot lights…

You can say the same for any firewall logs or authentication logs, malware alerts…

They all need human manual review at some point.

First you have to get the data, then you have to parse and filter through automation, and then you review with a security analyst.

But no security expert seems to recommend that we just don’t collect the data because it might be too hard to review without some automation.

It’s just excuse making.

Some of my problems with `security.txt` are:

* It assumes/requires you run a web server on the vulnerable host, which itself might introduce further vulnerabilities to your network.

* It has no discoverability, which means 404 errors for people randomly probing for it if it doesn’t exist. There’s no reason that kind of nonsense should be part of a modern standard.

* Its format appears to be modeled after robots.txt, which is *not* a well thought out data format. Go the extra step and require it be YAML, or anything else that *is* properly machine readable.

As others have noted, there are already plenty of ways to reach host administrators. There should be a WHOIS abuse contact for the IP address. If it has an rDNS entry, *that* should lead to an abuse contact. If there’s a web server that can be associated with any of that info, it should have a “Contact Us” link. If none of that exists (and/or they’re unwilling to route critical messages internally to the right department), they just don’t care about security, and so would also not bother with a `security.txt` file.

Adding a text file to a web site is just so much posturing. I’ve *already* tried reporting abuse to companies, only to get back (at *most*) the boilerplate “we will investigate and take the action *we* deem appropriate”. Given the amount of scanning for vulnerabilities I see in my server logs, that’s just not working. Why would I bother reporting new vulnerabilities when it is clear you don’t care about existing exploits? Let me know when you’re actually going to compensate me for the attacks you’re *already* launching against me.

I think that this is a new but old topic, through FIRST (https://www.first.org/about/) and TF-CSIRT Truster Introducer (https://www.trusted-introducer.org/) accredited Cybersecurity team shall present the RFC2350 information in a public company webpage.

The idea behind the security.txt is the same, probably provided to a potential large population, but the simple way remains the RFC 2350 inside public company web page.

8% of the fortune 100? Why not just say “8”? Didn’t sound big enough?

Brian Krebs – Curious on your stance on election hacking. VERY surprised to still have seen no mention about election cyber security, kind of a big thing regardless of political affiliation. Its concerning to see nothing at all about it on a trusted cyber security site. I figured you would have been excited to investigate whether or not any of this happened or not. Any reason for shying away from such an important topic?

It’s not surprising to see nothing here.

Because there’s nothing there.

Like it or not, covering stories that are wholly made up out of nothing for political reasons, does still legitimize those claims to some extent.

It’s best for professionals to stay professional and only cover the things which have substance.

Complete fabrications should not even be given a mention, because the side seeking legitimacy will amplify, exaggerate and twist anything coming from a reputable source.

” Any reason for shying away from such an important topic? ”

Because proving a negative isn’t really possible. Investigating phantom anecdotal (BS) evidence to warrant exploring the broad notion isn’t his style. That isn’t to say that all voting machines are secure, far from it, but to actually weaponize their flaws in a massive campaign sufficient to even slightly deflect an election result with so many different vendors, systems, locations, etc is going to require the kind of effort that leaves a sizeable footprint. No actual footprint whatsoever, no actual evidence, no actual reason to suspect anything but the most piddly-didley lone wolf fraud attempts, no actual story to “report” on really. Brian is reporting on ACTUAL security exploits and campaigns. Theoreticals are perhaps interesting but not really in the same vein as security journalism. The broad consensus of verifiable sources of information is that it didn’t actually happen the way some (formerly crack smoking) pillow vendors suggest, and if it did you can bet they would be very excited to report on it indeed. Part of epistemology (the study of knowledge) is how you ask a question, and whether or not you have an expected outcome in mind when you do so. Biases are human, journalism is a construct of imperfect beings, but it is distinct from entirely speculative punditry even as both are intending to sell interesting copy. You understand all this already, I’m sure.

If it’s not a bug it doesn’t meet the security response requirements. Many companies have terrible security reporting practices anyway, so if you do report a major security vulnerability, be prepared that it may not go anywhere, no matter how many times you report it. They are probably understaffed, under resourced, and overwhelmed with said ‘vulnerability scanner’ reports.

Luckily companies wouldn’t leave source code and packages in development publicly exposed, that can be very easily accessed, so the need to report such lapses is mute.

https://krebsonsecurity.com/.well-known/security.txt

”

Nothing Here!

Kindly search your topic below or browse the recent posts.

”

Brian, where’s your security.txt!

Since we have set up security.txt for all our customers, we have received dozens of reports from dubious “security researchers” who would like to have a bounty for pointing out totally obvious “problems” like not having a DMARC record. Having some WordPress API enabled that could possibly be abused, ignoring the fact that said API is actually used and is up to some level protected from abuse by a plugin. Out of dozens of reports, I’ve never had one actual security issue.

Canary Trap is an industry leader in Cyber Security Testing, Penetration Testing, Awareness Training and Incident Response Management. Canary trap is actively engaged by organizations of all shapes, sizes, and industry types.

Why wouldn’t LinkedIn or something equivalent work, as it would show the background, credentials, connections, etc. of the party reaching out? Its like a social network PKI in some ways.

https://hackerone.com/security.txt says it all.

ok, i imagine when someone actually replace the security.txt file with it’s own. It’s gona be fun, making fun of this.

Used to be in everything related to cyber security. I must say – all of my clients just got lucky and ya, the software worked for the noob actors. Pure luck that no one got them as real target. But hey, really, no one can fix the lack of brain in heads of emploeyes. Whatever software or checks you run…there is no protection against stupid people. Be mad at hackers or what so ever…that’s the fact.

My product did well, but couldn’t stand that lack of brain, complete lack of mind in those people, even if it had to be once a year. And creating serucity.txt really reminds of them. It’s a very stupid idea, ready to be exploited.

Looking great work dear, I really appreciated to you on this quality work. Nice post!!

Not sure if gotsecuritytxt is reliable. I just manually checked an organization that definitely has a security.txt file (for months) located in the /.well-known/ path, and the domain.com/security.txt properly redirects to the file too.

I just had gotsecuritytxt scan for it, and it says 404, no contact info. But I can get to the file from multiple places on the Internet.

So it’s possible gotsecuritytxt is broken for new scans, or maybe it’s on a block list now for too much scanning.

IDK, but it seems easier to just check manually.

Depending on the web server configuration, hackers of a compromised web site may be able to take advantage of this by creating a malicious page (or redirect page) at https://example.com/security.txt/index.html and if someone visits https://example.com/security.txt they will get the malicious page.

Nice! This information is very useful. Thanks for sharing this , keep sharing such information…