Phishers are enjoying remarkable success using text messages to steal remote access credentials and one-time passcodes from employees at some of the world’s largest technology companies and customer support firms. A recent spate of SMS phishing attacks from one cybercriminal group has spawned a flurry of breach disclosures from affected companies, which are all struggling to combat the same lingering security threat: The ability of scammers to interact directly with employees through their mobile devices.

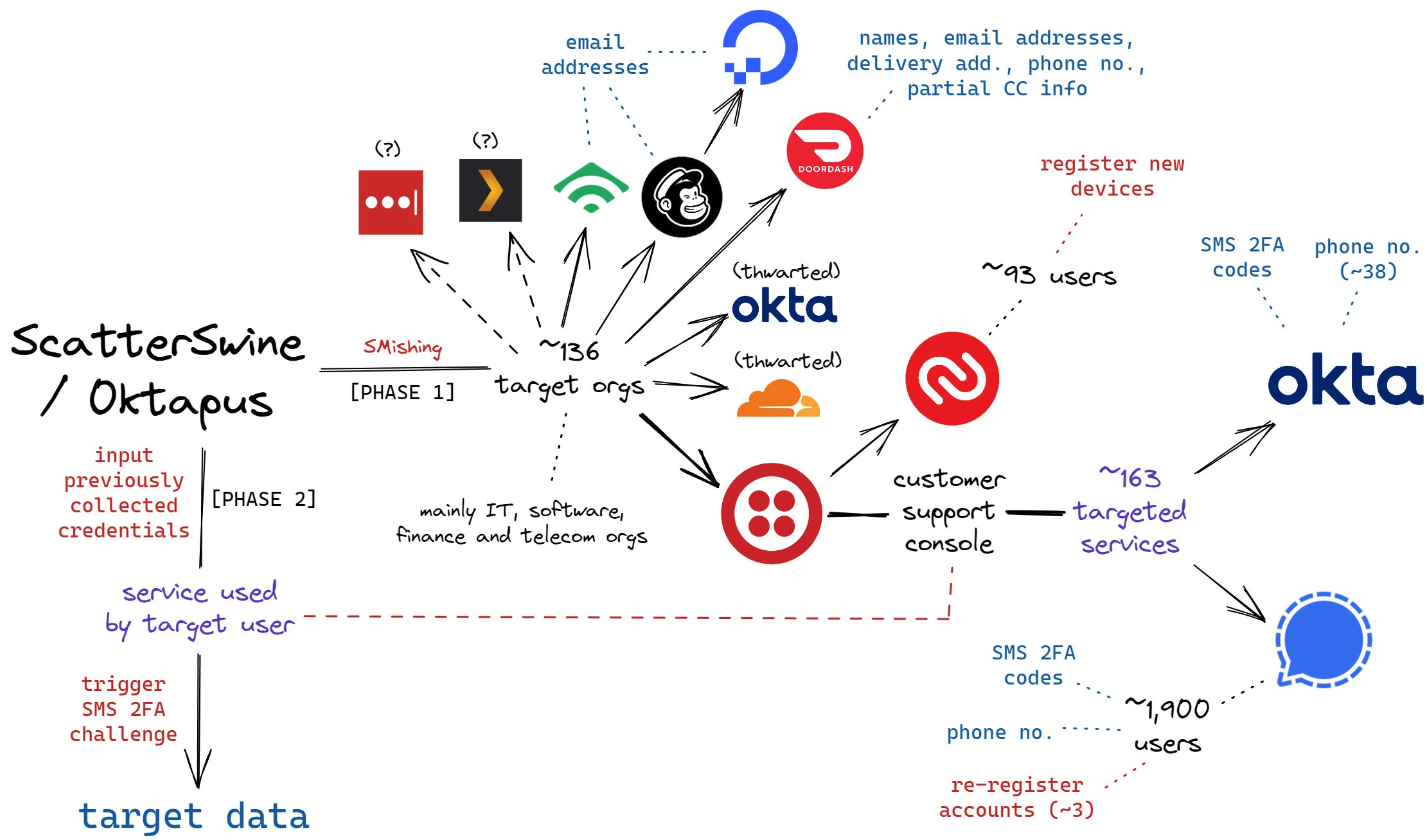

In mid-June 2022, a flood of SMS phishing messages began targeting employees at commercial staffing firms that provide customer support and outsourcing to thousands of companies. The missives asked users to click a link and log in at a phishing page that mimicked their employer’s Okta authentication page. Those who submitted credentials were then prompted to provide the one-time password needed for multi-factor authentication.

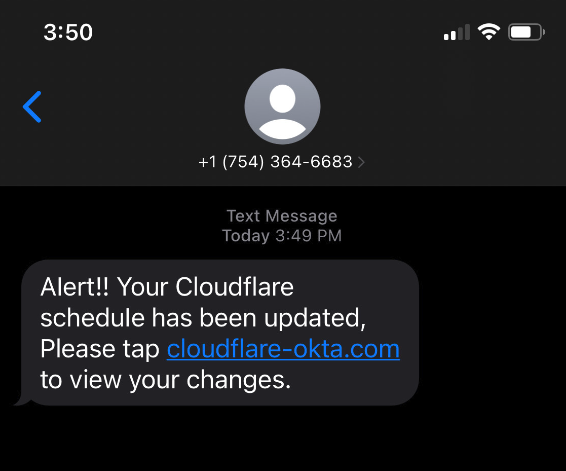

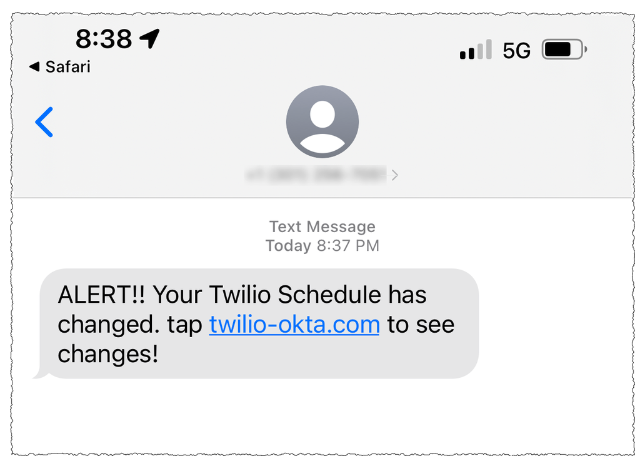

The phishers behind this scheme used newly-registered domains that often included the name of the target company, and sent text messages urging employees to click on links to these domains to view information about a pending change in their work schedule.

The phishing sites leveraged a Telegram instant message bot to forward any submitted credentials in real-time, allowing the attackers to use the phished username, password and one-time code to log in as that employee at the real employer website. But because of the way the bot was configured, it was possible for security researchers to capture the information being sent by victims to the public Telegram server.

This data trove was first reported by security researchers at Singapore-based Group-IB, which dubbed the campaign “0ktapus” for the attackers targeting organizations using identity management tools from Okta.com.

“This case is of interest because despite using low-skill methods it was able to compromise a large number of well-known organizations,” Group-IB wrote. “Furthermore, once the attackers compromised an organization they were quickly able to pivot and launch subsequent supply chain attacks, indicating that the attack was planned carefully in advance.”

It’s not clear how many of these phishing text messages were sent out, but the Telegram bot data reviewed by KrebsOnSecurity shows they generated nearly 10,000 replies over approximately two months of sporadic SMS phishing attacks targeting more than a hundred companies.

A great many responses came from those who were apparently wise to the scheme, as evidenced by the hundreds of hostile replies that included profanity or insults aimed at the phishers: The very first reply recorded in the Telegram bot data came from one such employee, who responded with the username “havefuninjail.”

Still, thousands replied with what appear to be legitimate credentials — many of them including one-time codes needed for multi-factor authentication. On July 20, the attackers turned their sights on internet infrastructure giant Cloudflare.com, and the intercepted credentials show at least three employees fell for the scam.

Image: Cloudflare.com

In a blog post earlier this month, Cloudflare said it detected the account takeovers and that no Cloudflare systems were compromised. Cloudflare said it does not rely on one-time passcodes as a second factor, so there was nothing to provide to the attackers. But Cloudflare said it wanted to call attention to the phishing attacks because they would probably work against most other companies.

“This was a sophisticated attack targeting employees and systems in such a way that we believe most organizations would be likely to be breached,” Cloudflare CEO Matthew Prince wrote. “On July 20, 2022, the Cloudflare Security team received reports of employees receiving legitimate-looking text messages pointing to what appeared to be a Cloudflare Okta login page. The messages began at 2022-07-20 22:50 UTC. Over the course of less than 1 minute, at least 76 employees received text messages on their personal and work phones. Some messages were also sent to the employees family members.”

On three separate occasions, the phishers targeted employees at Twilio.com, a San Francisco based company that provides services for making and receiving text messages and phone calls. It’s unclear how many Twilio employees received the SMS phishes, but the data suggest at least four Twilio employees responded to a spate of SMS phishing attempts on July 27, Aug. 2, and Aug. 7.

On that last date, Twilio disclosed that on Aug. 4 it became aware of unauthorized access to information related to a limited number of Twilio customer accounts through a sophisticated social engineering attack designed to steal employee credentials.

“This broad based attack against our employee base succeeded in fooling some employees into providing their credentials,” Twilio said. “The attackers then used the stolen credentials to gain access to some of our internal systems, where they were able to access certain customer data.”

That “certain customer data” included information on roughly 1,900 users of the secure messaging app Signal, which relied on Twilio to provide phone number verification services. In its disclosure on the incident, Signal said that with their access to Twilio’s internal tools the attackers were able to re-register those users’ phone numbers to another device.

On Aug. 25, food delivery service DoorDash disclosed that a “sophisticated phishing attack” on a third-party vendor allowed attackers to gain access to some of DoorDash’s internal company tools. DoorDash said intruders stole information on a “small percentage” of users that have since been notified. TechCrunch reported last week that the incident was linked to the same phishing campaign that targeted Twilio.

This phishing gang apparently had great success targeting employees of all the major mobile wireless providers, but most especially T-Mobile. Between July 10 and July 16, dozens of T-Mobile employees fell for the phishing messages and provided their remote access credentials.

“Credential theft continues to be an ongoing issue in our industry as wireless providers are constantly battling bad actors that are focused on finding new ways to pursue illegal activities like this,” T-Mobile said in a statement. “Our tools and teams worked as designed to quickly identify and respond to this large-scale smishing attack earlier this year that targeted many companies. We continue to work to prevent these types of attacks and will continue to evolve and improve our approach.”

This same group saw hundreds of responses from employees at some of the largest customer support and staffing firms, including Teleperformanceusa.com, Sitel.com and Sykes.com. Teleperformance did not respond to requests for comment. KrebsOnSecurity did hear from Christopher Knauer, global chief security officer at Sitel Group, the customer support giant that recently acquired Sykes. Knauer said the attacks leveraged newly-registered domains and asked employees to approve upcoming changes to their work schedules.

Knauer said the attackers set up the phishing domains just minutes in advance of spamming links to those domains in phony SMS alerts to targeted employees. He said such tactics largely sidestep automated alerts generated by companies that monitor brand names for signs of new phishing domains being registered.

“They were using the domains as soon as they became available,” Knauer said. “The alerting services don’t often let you know until 24 hours after a domain has been registered.”

On July 28 and again on Aug. 7, several employees at email delivery firm Mailchimp provided their remote access credentials to this phishing group. According to an Aug. 12 blog post, the attackers used their access to Mailchimp employee accounts to steal data from 214 customers involved in cryptocurrency and finance.

On Aug. 15, the hosting company DigitalOcean published a blog post saying it had severed ties with MailChimp after its Mailchimp account was compromised. DigitalOcean said the MailChimp incident resulted in a “very small number” of DigitalOcean customers experiencing attempted compromises of their accounts through password resets.

According to interviews with multiple companies hit by the group, the attackers are mostly interested in stealing access to cryptocurrency, and to companies that manage communications with people interested in cryptocurrency investing. In an Aug. 3 blog post from email and SMS marketing firm Klaviyo.com, the company’s CEO recounted how the phishers gained access to the company’s internal tools, and used that to download information on 38 crypto-related accounts.

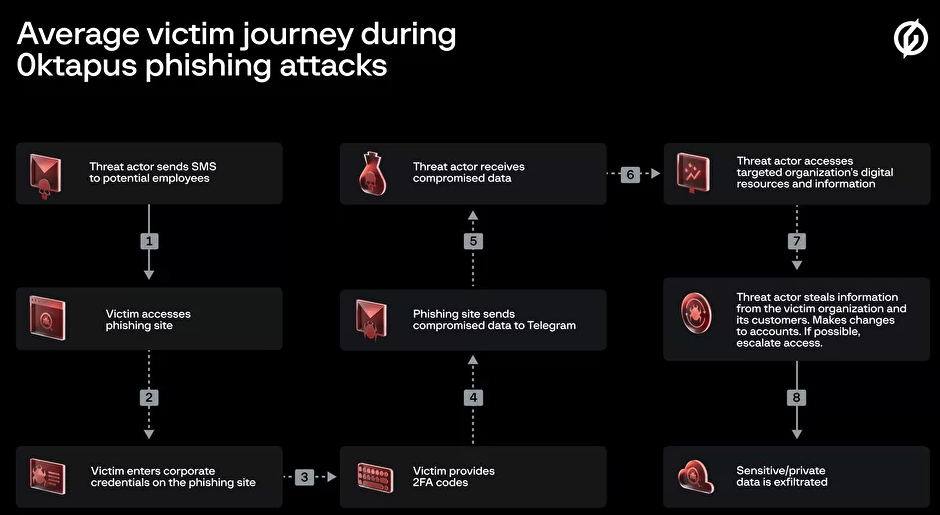

A flow chart of the attacks by the SMS phishing group known as 0ktapus and ScatterSwine. Image: Amitai Cohen for Wiz.io. twitter.com/amitaico.

The ubiquity of mobile phones became a lifeline for many companies trying to manage their remote employees throughout the Coronavirus pandemic. But these same mobile devices are fast becoming a liability for organizations that use them for phishable forms of multi-factor authentication, such as one-time codes generated by a mobile app or delivered via SMS.

Because as we can see from the success of this phishing group, this type of data extraction is now being massively automated, and employee authentication compromises can quickly lead to security and privacy risks for the employer’s partners or for anyone in their supply chain.

Unfortunately, a great many companies still rely on SMS for employee multi-factor authentication. According to a report this year from Okta, 47 percent of workforce customers deploy SMS and voice factors for multi-factor authentication. That’s down from 53 percent that did so in 2018, Okta found.

Some companies (like Knauer’s Sitel) have taken to requiring that all remote access to internal networks be managed through work-issued laptops and/or mobile devices, which are loaded with custom profiles that can’t be accessed through other devices.

Others are moving away from SMS and one-time code apps and toward requiring employees to use physical FIDO multi-factor authentication devices such as security keys, which can neutralize phishing attacks because any stolen credentials can’t be used unless the phishers also have physical access to the user’s security key or mobile device.

This came in handy for Twitter, which announced last year that it was moving all of its employees to using security keys, and/or biometric authentication via their mobile device. The phishers’ Telegram bot reported that on June 16, 2022, five employees at Twitter gave away their work credentials. In response to questions from KrebsOnSecurity, Twitter confirmed several employees were relieved of their employee usernames and passwords, but that its security key requirement prevented the phishers from abusing that information.

Twitter accelerated its plans to improve employee authentication following the July 2020 security incident, wherein several employees were phished and relieved of credentials for Twitter’s internal tools. In that intrusion, the attackers used Twitter’s tools to hijack accounts for some of the world’s most recognizable public figures, executives and celebrities — forcing those accounts to tweet out links to bitcoin scams.

“Security keys can differentiate legitimate sites from malicious ones and block phishing attempts that SMS 2FA or one-time password (OTP) verification codes would not,” Twitter said in an Oct. 2021 post about the change. “To deploy security keys internally at Twitter, we migrated from a variety of phishable 2FA methods to using security keys as our only supported 2FA method on internal systems.”

Update, 6:02 p.m. ET: Clarified that Cloudflare does not rely on TOTP (one-time multi-factor authentication codes) as a second factor for employee authentication.

It’s scandalous that US banks still rely on SMS-based 2FA for online banking. My bank finally allowed 2FA using an RSA security key (Let me guess: They were required to provide this for federal government employees?). Apparently using an authenticator app is beyond the capabilities of their underpaid IT staff. But the best part: Even if you get (pay for) the RSA key, you cannot turn off 2FA with SMS! What’s the freaking point of paying for a more secure 2FA, if they won’t turn off the insecure 2FA?

2FA codes from authenticator apps or hardware tokens are vastly superior to 2FA SMS codes. But would not have mitigated this attack. It’s just as easy to phish a code from another app or token as it is from the text messaging app.

The real mitigation is the “security key” FIDO devices that must be plugged into the computer/phone via USB or bluetooth. that two-way type communications allows the key to authenticate the website/service that is requesting 2FA authentication.

A big part of this problem is that physical keys are very difficult to administer and in most businesses the business side of the house can squash security efforts because they carry more clout within the organization and are not willing to deal with the discomfort of using physical keys such as FIDO/WebAuthn based tokens/apps.

Convenient methods of second Factor authentication are an unfortunate reality for most businesses and far superior to ID/PW only.

Note that push to accept second Factor mechanisms are subject to the same type of problem and in fact even more susceptible because most people are stupid enough to press accept even when not in a login sequence. This can be mitigated by using technologies like symbol to accept but still subject to the phishing exploit described in this article.

Deploying technologies such as geovelocity can often help with this type of problem but is by no means foolproof. Challenges exist when the user logs in infrequently and with determining a secondary challenge that is also phish proof.

One mechanism that can significantly help with this problem is to require a cookie on the client device prior to allowing a less secure second factor method to be used. The same issue with – what to do next in the authentication flow – is still a challenge. Informing the user of the geolocation of the login device, warning the user that the suspicious login is occurring via text or using some form of knowledge base secondary authentication mechanism (as horrible as kba is) may also help to thwart this type of exploit. Kba should never be a standalone second factor mechanism. It should only be used in conjunction with some other second factor.

For corporate based machines there’s really no excuse for not using certificate based authentication with cookies. It can be hard to deploy at first but once the infrastructure is in place it’s not bad. This all depends on having strict browser control on the client.

There’s no question that WebAuthn is the most secure mechanism available today and frankly can be easily deployed to phones as the token, but the challenge there is that some devices are too old to support the technology (a solution needs to work for all users) and you can’t make consumer users install applications on their phone.

Any more ideas from the gallery?

FaceBook gave me two computers, but only one tiny USB FIDO key… which is virtually impossible to move between the computers without breaking your fingernail. I asked for another key, they told me one was all I needed.

There should be a hole to put a lanyard string. Or you can get a 4 inch USB extender.

Trust a 4 inch USB extender with everything.

https ://en.wikipedia.org/wiki/BadUSB

Just get your company IT supplier to buy it. They may balk at buying another security key, but peripherals are a simple purchase.

It’s easy to avoid BadUSB just by buying from trusted sources. I doubt BadUSB would be sold from Best Buy. If you’re that paranoid, you should already be afraid about what’s inside your computer.

I am.

It’s generally not the bank’s fault, it’s more often the third party online banking provider who determines what methods of 2FA are available. Fortunately my bank (who I both use and work for) offers VIP 2FA (would rather it not be a proprietary system, but it beats SMS).

It’s the bank’s fault since they get to choose their online provider.

The RSA SecurID code generator is as phishable as apps like Google Authenticator. For real security you need a U2F key like the Yubikey. Unfortunately very few platforms support it, but Google does and so does Bank of America.

It may bring a higher level of security in regards to the authentication process but can you trust the device itself? It is a USB physical device you are plugging into a computer. In general sticking 3rd party devices into a computer wouldn’t be my first idea for how to approach improving security. Are you sure it hasn’t been tampered with? In a world where there might be many such devices which providers can you trust? Is there risk moving it from computer to computer? If nothing else you need to allow USB devices to be used on the system and load the drivers. This last point might not seem particularly valid since it is pretty much a given that USB is active on most desktop devices but that doesn’t have to be the case. I have actively disabled it in past on such systems that still had PS2 ports and always on servers.

If you bought the USB key, direct from the manufacturer and not from eBay, I think it’s trusted enough.

If you don’t trust the USB device you bought, can you even trust the computer hardware?

You can theorize about USB devices being tampered with, to install malware like keystroke injection (badUSB, O.MG, Ducky, etc.) But security keys from RSA, Yubikey and other trusted makers are fairly tamper resistant. Small form factor and small storage make it impractical to replace with malware. Much easier to put an O.MG inside a USB keyboard.

But if you’re extra paranoid, use Qubes which will segment the USB host PCI hardware to its own VM and manage it with rules like a firewall. It still allows yubikey to work as a security key, but will prompt or auto-reject if the USB device enumerates as something new, like a network adapter or HID.

90%-95% of MFA can be easily phished around. We need to do better. Buy or use only phishing-resistant MFA when you can. Here’s my list of all phishing-resistant MFA that I’m aware of: https://www.linkedin.com/pulse/my-list-good-strong-mfa-roger-grimes

When you read Mr Grimes’ book on MFA and then at the very same time you see the reply from someone whose name is Roger A. Grimes on another MFA issue 0_0

Amazing book btw so far!

90%-95% of MFA can be easily phished around. We need to do better. Buy or use only phishing-resistant MFA when you can. Here’s my list of all phishing-resistant MFA that I’m aware of: https: //www. linkedin.com/pulse/my-list-good-strong-mfa-roger-grimes

Just an idle thought while reading this: Isn’t this something that Blackberry handled by routing all phone traffic through a central server about twenty years ago? So all SMS messages were filtered and verified?

No. Blackberry only routed web traffic. SMS and Phone calls can’t be routed that way. The carrier controls that, SS7 stuff.

But this article isn’t even about SMS 2FA codes. It’s about the phishing of 2FA codes regardless of the transport method.

No. Blackberry only routed web traffic. SMS and Phone calls can’t be routed that way. The carrier controls that, SS7 stuff.

But this article isn’t even about SMS 2FA codes. It’s about the phishing of 2FA codes regardless of the transport method.

With SMS, hackers can SIM swap to get your SMS codes without you knowing.

Phishing is much more of a threat, because it attacks the human being. An attacker doesn’t need to swap your SIM, if they phish you, then you are tricked into giving up the credentials and 2FA codes.

No registration should record a cell ph# in the clear to begin with — without that critical datapoint SMS cannot reach their target.

What registration are you referring to?

SMS communications are spoofed to easily. The only way that zero trust protocols can be enabled is if the sender is 100% verifyable and authenticated by the receiving device all else are band aids on a festering wound. Once Quants are a reality hiding behind pseudo public key firewalls are going to have a lot of red eyes out there. The only way is symetric encryption

This is nonsense technobabble.

Delete this url from your bookmarks. It is beyond your ken.

I was fully on-board until the last two sentences about quantum pseudo public key firewalls. I have no idea what that is or why “symetric [sic] encryption” will fix it.

The bit about zero trust reads true but I’m afraid that it’s only a viable option for employees, not so much for customers. The article even mentions one stab at it.

[quote]

Some companies (like Knauer’s Sitel) have taken to requiring that all remote access to internal networks be managed through work-issued laptops and/or mobile devices, which are loaded with custom profiles that can’t be accessed through other devices.

[/quote]

Yeah, even the bit about “zero trust” didn’t make sense. It’s a thing, but is usually misunderstood and used only as a buzz word.

Yep, it most certainly is.

I regularly remind myself that whatever email or text notifications I get… I will ALWAYS visit by clicking my own bookmarked site… or if I need to call anyone back, I will use the contact info stored in my password manager. I also use the following as my layered defense:

1. The browser I use for reading email/text has noscript extension. Only specific whitelisted sites are allowed — Gmail for example. So even if I inadvertently clicked an email or text link… Noscrypt should stop fake sites from functioning.

2. I always sign in as ‘standard’ user – not admin. So again, if I inadvertently clicked a bad link, Windows should prevent anything accidentally downloaded from installing/running. And I take care to ensure that my OS and apps are up to date.

That is good hygiene that all users should be doing. Thank you.

Although I am not sure if client javascript was used on this phishing page, so noscript may not have blocked it.

Having JavaScript off probably wouldn’t have blocked it (modern sites have switched away from using JavaScript on login screens), but having images off by default should have been a good red flag.

In Chrome, this is controllable via: chrome://settings/content

In addition to:

* Don’t allow sites to use JavaScript

There’s:

* Don’t allow sites to show images

And if both of these are selected by default then it should be almost always apparent when you visit any site that isn’t one you’ve already visited and previously vetted.

—

If you let your browser autofill _drop_down_ suggest the username, then that’d also be a good hint when you visit a phishing site.

If you’re willing to take the risk of device compromise, you could also rely on the browser storing and completing the password, since your browser shouldn’t give the password to the wrong domain. But some people will object to that advice…

—

I thought I recently saw Google Chrome warning about visiting a domain that had a recent registration, but I can’t find it mentioned in search results or chrome://flags

Very good advice. I never considered the images that don’t load could be a dead giveaway. Is the “don’t allow sites to show images” setting unique to noscript? Does it block all images or just external images (referenced with a link)? Does it block same domain image references? What about images that are inline (base64 encoded within the HTML)? I know that’s how we bypass email clients that block the loading of images, we just put them inline for phishing exercises.

Certain malware could strip out stored creds from various in-browser mgrs.

“I always sign in as ‘standard’ user – not admin. So again, if I inadvertently clicked a bad link, Windows should prevent anything accidentally downloaded from installing/running.”

Yeah, no. It can still install/run, but only in your user context. It’s good practice, but certainly not a magik bullet.

I think this is a really handy method of taking your own actions into account and making sure the decision you are making is a safe and protective one ahead of time. It is also good to note that you have it bookmarked so the link is not leading to a different site with a different ending url.

I use a RSA type key for financial stuff. I was offered the choice of using a TOTP app but I said to send me a new key when the old battery gave out. Totally air gapped.

But reading the article it seems to me that the real problem was clicking on a link being offered to you. Rule number one is if you didn’t ask for it, don’t click it.

Next up I baffled that any company would use Telegram. Their security has long been known to be no good.

The RSA hard tokens that display 2FA codes are certainly protected from malware that could infect your phone. But they have had their share of breaches too. RSA seeds were leaked a few years back.

In corporate environments today, and especially in pandemic work from home times… it is now the reality that employees will be contacted directly from SaaS providers that their employee has onboarded. Okta is one example. In many cases, not all employees are aware of all the cloud providers that are being used by the company. In this era of “cloud first”, a company could be using hundreds, and each employee cannot know which ones and what the exact URL would be.

Lastly, the company doesn’t use Telegram. The attacker uses Telegram to backhaul the credentials from the phishing site to the attackers. The victim isn’t even aware Telegram is in use.

Unfortunately, an RSA key would not help in phishing situations like these. The problem is that code generation is completely independent from the place you’re entering the code. There’s nothing stopping you from entering that code into a completely unrelated site, e.g. a phishing site. And if the person running that site already has your other credentials, they can login as you can grab your authentication token.

RSA tokens don’t provide much additional safety over TOTP apps. They use a similar type of algorithm behind the scenes to generate the codes. The only advantage is that you don’t have to worry about phone-based malware or implants stealing your TOTP seeds.

The only solution to 2FA phishing to this is to have 2FA OTP generation linked to the site. If you go to a different site, there’s no way to generate a valid OTP code so you can’t get phished.

There are many different ways to provide security, but many of the truly secure ways require an inconvenience. The phishing scams get a lot of people. My college recently had a phishing scam happen. I do not know what the numbers of affected students was, but it was enough that they deleted the messages from our school emails.

Anyone open to learning more about the only MFA on the market without shared secrets in its design? Comment if yes.

(Yes, it’s real and exists — and is trusted by BofA, CVS Health Aetna, WellsFargo, Goldman Sachs, and more.)

Interested.

Yet another Yubi advert.

All 2fa methods including FIDO can be exploited because they do not have correct time out lock out routines to prevent exploitation of fall back to stop lockout of users.

What does time out lockout have to do with any of this? The hackers are not trying to brute force, and the credentials and OTP code is retransmitted immediately.

Fido cannot be exploited through this phishing method.

Maybe saying if you leave a keyed session open and are somehow hijacked there is no short duration default timer to end that session so for purposes it’s compromised until closed out and the user may not realize?

Still makes no sense to blame the authentication method for session control. “All 2fa methods including FIDO…” has nothing to do with session management. If a session is compromised, it is not the fault of 2FA or any other authentication method.

It’s like blaming the key/lock mechanism because of a faulty auto-close hinge on the door.

It isn’t blaming any one aspect in particular to note that a session can still be hijacked and the “secure authed system” sum of all parts is (sometimes trivially…) undermined despite a fancy lock. Any session per analogy is the door left unlocked until closed. That’s all (I think?) OP was getting at, and per the example of an auto-close hinge it would effectively increase the likelihood of the door remaining locked and secure only to the keyed user – that’s why they’re in use all over the place in physical security. Without the obligatory religious swordfight I think the observation can stand and whether or not it’s an “attack” in your view on specific keying methods or what is not particularly related to that point as it’s undermined by those circumstances.

Reading the OP…

“All 2fa methods including FIDO can be exploited because they do not have”

If the OP said “the system” can still be exploited, I’d be inclined to agree. But it seems he was focused on attacking the 2FA methods like FIDO as if to splash cold water on the obviously correct security control to prevent these kinds of phishing attacks.

Seems like you’re stretching the Louis Leahy’s contrarian comment to defend him.

And the OP doesn’t sound like he’s referring to session management as suggy claims. “prevent exploitation of fall back to stop lockout of users”.

To me, that sounds like the common practice of “fall back” authentication mechanism. Users who lose their tokens or get a new phone. That kind of thing. Not the session timing out. Which as Still No pointed out, doesn’t have anything to do with the authentication piece.

Going back to the OP’s claim. A vulnerability in a company’s process that may allow someone to socially engineer a technician to reset 2FA, the “fall back”, is still not an exploit of the 2FA.

So what the OP appears to be arguing is that doors are exploitable due to the existence of windows. If he claimed the room/building is still insecure because of the windows in spite of a strong door, I would agree with that. But alas, that is not what Louis said. He specifically targeted 2FA like FIDO keys as if they were the problem.

“is still not an exploit of the 2FA” – An unlocked door is not said to be “exploited” by someone unauthorized using it because it was propped open, but it is circumvented as a security feature for that period. I don’t know what OP’s intent was with his single sentence nor does it fundamentally matter to this consideration.

Security pros should be precise with language. Don’t be like politicians. It’s the difference between a lawful search with a warrant, and “they broke in and raided”.

+1 for Francis. Explains it well

Explains what?

I disagree. Not all organizations using 2FA have weak lock out routines and fallback methods. If I forget my PIN, lockout my PIN, or if I lose my 2FA smart card, they require that I show up in person to the ID card office and present my state issued photo ID, and they will then issue me another 2FA token or reset my PIN. I don’t see how you can generalize all 2fa methods including FIDO as exploitable since the fallback methods are a completely separate thing depending on the company or agency.

Nice writeup and very detailed!

Recently attackers have been using advanced phishing attacks to bypass latest security mitigations

I think it will also be good to highlight the increase in usage of adversary-in-the-middle (AiTM) phishing attacks which can bypass multi-factor authentication (MFA) as well

Here are good recent researches on this topic:

Bypassing Microsoft 2-factor authentication

https://www.zscaler.com/blogs/security-research/large-scale-aitm-attack-targeting-enterprise-users-microsoft-email-services

https://jeffreyappel.nl/protect-against-aitm-mfa-phishing-attacks-using-microsoft-technology/

Bypassing Gmail 2-factor authentication

https://www.zscaler.com/blogs/security-research/aitm-phishing-attack-targeting-enterprise-users-gmail

No registration should record a cell ph# in the clear to begin with — without that critical datapoint there is no attack vector.

Registrar WHOIS Server: whois.porkbun(.)com

Registrar URL: http://porkbun(.)com

Updated Date: 2022-07-20T23:19:14Z

Creation Date: 2022-07-20T22:13:04Z

Registry Expiry Date: 2023-07-20T22:13:04Z

It would also be a big help if Telco’s would stop letting people spoof numbers. But that has been a known issue for so long, its unlikely they ever will. Telco’s must be making money off of that some how or it would have been shut down years ago.

The FCC’s STIR/SHAKEN has no teeth.

Stop eating food off the floor people; to be safe prepare it yourself. Although there are better approaches, the heart of the issue here is simple. Never trust data “submitted” by others! This is true in programing when users submit data to a program. This is just as true for links and other items received in emails and texts. Can you trust the provider? From a security prospective, the answer is always no. In it’s most extreme it is even true for DNS responses you receive which makes things particularly ugly for the truly paranoid.

What I find disturbing is that financial institutions, corporations, and others that need to protect sensitive access continue to send out links to their sites in email and text notices that they must be expecting people to follow. This is convenient but they should really be encouraging individuals to bookmark sites and to NEVER access important sites like financial institutions and corporate sites from a login link received in text and email ever. People seem to have gotten the message not to call numbers they receive without verifying but people happily click on text and email links without even eyeballing it or thinking.

If you really want to be paranoid, in the extreme, also keep a copy of the website certificate fingerprint on hand and manually verify it before login. Yes it changes about yearly and it is a hassle but by confirming this through a couple different computers and networks ahead of time and whenever it changes you can have a pretty high degree of confidence DNS foul play isn’t a factor. Perhaps excessive hassle for for most even important everyday sites but if the stakes of an account compromise are extremely high this level of paranoia could save the day in rare cases.

1 – Do you have your Full Name in LinkedIN

2 – Do you have the company you work in on LinkedIn

3 – Do you have your position on LinkedIn Listed

IF you answer to any of these…..then you are a target

WE have given them ALL they need to find us, target us, then go after us

WE provided that information……they just used our insatiable appetite to be known…to move in on the issue

Stop tying to be famous….or even noticed…….be safe….be anon

It sucks to be so cynical but in this environment……what choice do we have

Yep. Opt-in for exposure like that and there is no going back.

Some will call this idea totally insane and paranoid. Sure. BFD.

Like you say what choice do we have, this exactly is the choice.

Well Said!!!!!!!!!!

I’m not getting it – victim provides username and password to phishing site, then receives an SMS token from – who? How would a SMS 2FA sent by the phishing site be valid for the victim’s real account?

Or does the phishing site use some API to generate a token that is also valid on the real account? Because that API would be bad.

The second ask is for the one-time code; both get relayed by the Telegram bot from the phishing site to the attackers. A lot of orgs use specific mobile apps to generate these, and so one-time password that you type in from an OTP app can be phished the same way.

They *can* phish the otp by logging in as the target with the target’s valid credentials, which will of course send the target an SMS prompt from the legit site, and meanwhile their bot is telling the target to repeat back the text they got. This is a slightly different approach I wrote about not long ago. https://krebsonsecurity.com/2021/09/the-rise-of-one-time-password-interception-bots/

Thanks for your integrity.

Ironic source is ironic.

Ironic source is ironic.

Impersonation in the open isn’t ironic, just dumb.

Impersonation in the open isn’t ironic, just dumb.

Thank you for demonstrating the accusation of impersonation is still valid and ongoing.

Thank you for demonstrating the accusation of impersonation is still valid and ongoing.

Thank you for demonstrating the accusation of impersonation is still valid and ongoing.

BK can see the email supplied JamminJ. Your impersonations only discredit yourself.

BK can see the email supplied JamminJ. Your impersonations only discredit yourself.

I assume you are referring to the locked article on ubiquiti. Yes Brian shows his integrity by admitting his mistakes. We’re all better for good journalism like this.

Amen to that. It wasn’t even a mistake per se but a correction.

Things come to light over time in a linear fashion.

Two things are awkward in the picture that portrays the sms alert:

1- the fact that it contains a link to click on with the name of two companies in the link itself – Very strange indeed.

2- A real alert message does not contain a clickable link but just simple text.

Once again here, users’ education is paramount: users should be educated to stay away from clickable links on text messages.

“Twitter confirmed several employees were relieved of their employee usernames and passwords, but that its security key requirement prevented the phishers from abusing that information by initiating a new password protection system!”

This article was very informative: As we move deeper and deeper into the world of cyber space there will always be a need for cyber security and passwords but like anything else computers cannot compare to human nature and with that said computers can only protect mankind from themselves and human nature. See a funny joke “click on it”, see an outrageous sale and coupons from Groupon, get a way travel plans, funny cat videos, etc. No matter where you are at home or especially in the office the company may tell their employees that their company email is for company use only and not for private use, but employees that spend half their time or more during the day in the office will ultimately fall victim in surfing the web and thereby putting their password and companies online cyber security at risk of being compromised

It amazes me that so many people are being caught by phishing scams. The thing that scares me the most is that people from large corporations are falling for these scams, and these corporations have tons of our data just stored. Anyone who succeeds in gaining access can take millions of people’s data and gain access to different accounts. Many people use the same password for different accounts, or a version of the same password, and that can be scary when a password gets leaked. Computers and the internet are a great thing, but with people able to gain access to sensitive information it can be a dangerous thing. There are many scams out there and fake websites that can take your information, so as users we all have to be careful.

And there’s no test to get a license to drive the internet.

It might not be a completely insane idea…