On any given day, nation-states and criminal hackers have access to an entire arsenal of zero-day vulnerabilities — undocumented and unpatched software flaws that can be used to silently slip past most organizations’ digital defenses, new research suggests. That sobering conclusion comes amid mounting evidence that thieves and cyberspies are ramping up spending to acquire and stockpile these digital armaments.

Security experts have long suspected that governments and cybercriminals alike are stockpiling zero-day bugs: After all, the thinking goes, if the goal is to exploit these weaknesses in future offensive online attacks, you’d better have more than a few tricks up your sleeve because it’s never clear whether or when those bugs will be independently discovered by researchers or fixed by the vendor. Those suspicions were confirmed very publicly in 2010 with the discovery of Stuxnet, a weapon apparently designed to delay Iran’s nuclear ambitions and one that relied upon at least four zero-day vulnerabilities.

Documents recently leaked by National Security Agency whistleblower Edward Snowden indicate that the NSA spent more than $25 million this year alone to acquire software vulnerabilities from vendors. But just how many software exploits does that buy, and what does that say about the number of zero-day flaws in private circulation on any given day?

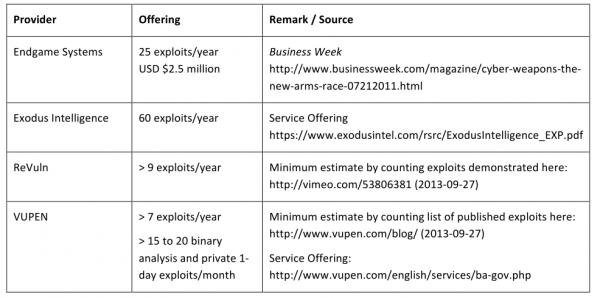

These are some of the questions posed by Stefan Frei, research director for Austin, Texas-based NSS Labs. Frei pored over reports from and about some of those private vendors — including boutique exploit providers like Endgame Systems, Exodus Intelligence, Netragard, ReVuln and VUPEN — and concluded that jointly these firms alone have the capacity to sell more than 100 zero-day exploits per year.

According to Frei, if we accept that the average zero-day exploit persists for about 312 days before it is detected (an estimate made by researchers at Symantec Research Labs), this means that these firms probably provide access to at least 85 zero-day exploits on any given day of the year. These companies all say they reserve the right to restrict which organizations, individuals and nation states may purchase their products, but they all expressly do not share information about exploits and flaws with the affected software vendors.

KNOWN UNKNOWNS

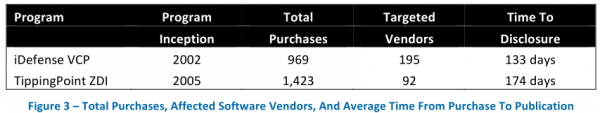

That approach stands apart from the likes of HP TippingPoint‘s Zero-Day Initiative (ZDI) and Verisign‘s iDefense Vulnerability Contributor Program (VCP), which pay researchers in exchange for the rights to their vulnerability research. Both ZDI and iDefense also manage the communication with the affected vendors, ship stopgap protection for the vulnerabilities to their customers, and otherwise keep mum on the flaws until the vendor ships an update to fix the bugs.

Frei also took stock of the software vulnerabilities collected by these two companies, and found that between 2010 and 2012, the ZDI and VCP programs together published 1,026 flaws, of which 425 (44 percent) targeted flaws in Microsoft, Apple, Oracle, Sun and Adobe products. The average time from purchase to publication was 187 days.

“On any given day during these three years, the VCP and ZDI programs possessed 58 unpublished vulnerabilities affecting five vendors, or 152 vulnerabilities total,” Frei wrote in a research paper released today.

Frei notes that the VCP and ZDI programs use the information they purchase only for the purpose of building better protection for their customers, and since they share the information with the software vendors in order to develop and release patches, the overall risk is comparatively low. Also, the vulnerabilities collected and reported by VCP and ZDI are not technically zero-days, since one important quality of a zero-day is that it is used in-the-wild to attack targets before the responsible vendor can ship a patch to fix the problem.

In any case, Frei says his analysis clearly demonstrates that critical vulnerability information is available in significant quantities for private groups, for extended periods and at a relatively low cost.

“So everybody knows there are zero days, but when we talk to C-Level executives, very often we find that these guys don’t have a clue, because they tell us, ‘Yeah, but we’ve never been compromised’,” Frei said in an interview. “And we always ask them, ‘How do you know?'”

Frei said that in light of the present zero-day reality, he has three pieces of advice for C-Level executives:

- Assume you are compromised, and that you will get compromised again.

- Prevention is limited; invest in breach detection so that you can quickly find and act on any compromises.

- Make sure you have a process for properly responding to compromises when they do happen.

- ANALYSIS

Although’s Frei’s study is a very rough approximation of the zero-day scene today, it is almost certainly a conservative estimate: It makes no attempt to divine the number of zero-day vulnerabilities developed by commercial security consultancies, which employ teams of high-skilled reverse engineers who can be hired to discover flaws in software products.

Nor does it examine the zero-days that are purchased and traded in the cybercriminal underground, where vulnerability brokers and exploit kit developers have been known to pay tens of thousands of dollars for zero-day exploits in widely-used software. I’ll have some of my own research to present on this latter category in the coming week. Stay tuned. Update, Dec. 6, 1:30 p.m. ET: Check out this story on the arrest of the man thought to be behind the Blackhole Exploit Kit. He allegedly worked with a partner who had a $450,000 budget for buying browser exploits.

Original story:

But Frei’s research got me to thinking again about an idea for a more open and collaborative approach to discovering software vulnerabilities that has remained stubbornly stuck in my craw for ages. Certainly, many companies have chosen to offer “bug bounty” programs — rewards for researchers who report zero-day discoveries. To my mind, this is good and as it should be, but most of the companies offering these bounties — Google, Mozilla, and Facebook are among the more notable — operate in the cloud and are not responsible for the desktop software products most often targeted by high-profile zero-days.

After long resisting the idea of bug bounties, Microsoft also quite recently began a program to pay researchers who discover novel ways of defeating its security defenses. But instead of waiting for the rest of the industry to respond in kind and reinventing the idea of bug bounties one vendor at a time, is there a role for a more global and vendor-independent service or process for incentivizing the discovery, reporting and fixing of zero-day flaws?

Most of the ideas I’ve heard so far involve funding such a system by imposing fines on software vendors, an idea which seems cathartic and possibly justified, but probably counterproductive. I’m sincerely convinced that a truly global and remunerative bug bounty system is possible and maybe even inevitable as more of our lives, health and wealth become wrapped up in technology. But there is one sticking point that I simply cannot get past: How to avoid having the thing backdoored or otherwise subverted by one or more nation-state actors?

I welcome a discussion on this topic. Please sound off in the comments below.

Start off knowing it’ll never be better than 99%:

– http://chart.av-comparatives.org/chart1.php

One percent of a million is 10,000…

Someone you know will get whacked, no matter what.

Ah, still looking for that “perfect world”.

.

It is for precisely this reason that I would like to see new versions of windows come with built in application whitelisting, recursive layers of sandboxing, further improvements on memory handling, and a better way to automate updates/patching/installs that doesn’t involve so much manual involvement. I’d like to see them make windows more modular like unix, where unwanted parts can be more easily stripped out (looking at you metroUI) and better security options/defaults ‘out of the box’. Looking at windows which is still the most commonly used OS out there, you have pretty much the exact same carbon copy list of problems you had 15 years ago – account elevation/improper rights, downloads/network, certs/encryption, updates, etc.

Dream on! Microsoft has been the target of hackers for over a quarter of a century, and they seem to have consistently behind the curve.

“teams of high-skilled reverse engineers”

What is a reverse engineer, pray tell? Is it someone who converts everything he touches into something with a poor design? Perhaps that should be “teams of highly-skilled software professionals with advanced reverse-engineering skills.”

“Most of the ideas I’ve heard so far involve funding such a system by imposing fines on software vendors, an idea which seems cathartic and possibly justified, but probably counterproductive.”

It could be a good solution, but it would need to be crafted appropriately.

Contracts often include language requiring parties to mitigate any damage. If fines are severe enough — they must be a significant percentage of income to inflict pain — companies will self-mitigate. They will not release products before their system test group has exhaustively run new software through the mill. This will also prevent management and/or marketing from releasing new products before they are ready. Since companies know they will be on the hook for defects, they will also employ a bounty scheme offering serious money. Sure, some defects will get through, but a combination of better system test and bounties will eliminate most of them. The fines will increase the price of products, but since all companies will be employing their due diligence, the playing field will be leveled.

Imposing fines on businesses who sell software products with bugs is a dumb-ass Marxist idea that will end up costing customers more money and it won’t prevent software from being exploited. People are imperfect and thus, we produce imperfect things. The free market will take care of this in one way or another. The last thing we need is greedy and power-hungry government bureaucrats screwing up the software industry while filling the pockets of trial attorneys and the collecting government agencies. That’s another recipe for lost liberty!

HSPCD, I agree completly.

Fines only will make products and services more costly and it will never solve problems. Firing the complete staff, not only the responsible ones, but the whole staff will get you an environment in which staff members will become critical towards each other and risc avoiding.

Could leave us with very conservative organizations though.

Why aren’t there any Universities addressering such problems…

Because in essence it’s just the human behaviour which is our problem.

Nowadays you can’t trust anyone with your data, the only thing one can do is organizing your life to avoid serious problems…

Education to avoid risc is THE long term solution…

“The free market will take care of this in one way or another.”

That is what is happening today. It’s not working very well, is it?

HSPCD, you sound like a loon. I actually mean to say, seriously, that you’re writing from such an off-the-rails partisan slant it actually comes off as nearly unhinged. Are you aware you’re full-on invoking the language of “true believer” types here? You are. You kind of literally come off here like you were raised in a cult or something. What gives? Are you conscious of the tics and fanaticism in your writing, or is this stuff totally opaque to you? Do people react to this stuff in you day to day? You and Clayton make quite the pair.

HJD,

I’m not sure what you’re getting at but I am a believer. I believe in the founding principals of our nation, I believe that the free market economy is not perfect but that it is the best, and I believe in God Almighty and in salvation through Christ alone. The great thing about truth is that it doesn’t require you to believe it to be so. You’re a dick. That’s the truth and you know it. We need to stop regulating the good guys so much and start regulating the bad guys. History shows us clearly that the Statists want power and wealth but the government only takes but never produces wealth. You and the talking mule are advocating granting more power and fine imposing abilities to the government which always ends up reducing personal liberty. And for what, or to what ends? Will software be more secure? Will we not still be at risk? Your way is folly. That it’s the truth. Again, we need more innovation not more regulation.

The government’s defense budget is in the billions, they can easily afford to create their own teams to chase down and if necessary even embed vulnerabilities where they want to. Whitelisting is about the only logical defense and even then problematic in some ways.

“How to avoid having the thing backdoored or otherwise subverted by one or more nation-state actors?”

The question should be on how to reduce the risk… It may be lowered to a 0.10% or even less, but will be impossible to avoid/eliminate it completely.

What I don’t understand is, why are there so many ways to have vulnerabilities in a software product?

Aside developer inresponsibility, like those D-Link losers and flaws in standards, all software is written with development tools… Which IMHO is responsible for the most attack vectors…

Why is nobody taking any action to improve the libraries of those tools?

Somebody?

Think about the software you’re using at the moment. There is something rendering HTML, something rendering CSS and something processing JavaScript as well as others things processing any other, more exotic, plugins / modules / whatever your browser may identify. They’re all running on top of your browser which is running on some sort of presentation layer which is running on some sort of window manager which is running on some set of “operating system” tools which is running on top of an operating system which is running on a kernel which is interacting with a number of firmware modules controlled by a couple of abstraction levels sitting on top of a processor following it’s own instruction set. Every entity: was written by a different group of people; has some sort of historical dependancy/ies behind it; was built using tools suitable to it (the tools all sit at the top of the stack proposed here and have exactly the same dependencies); interacts with a bunch of libraries written by yet more groups; interacts with a bunch of hardware created by a different set of people to those who wrote the interface (which are, of course, a different bunch of people to those writing whatever layer of software we’re talking about now). These people are spread over 50 years, 200 countries and millions of organisations. Where are you going to start your improvements?

Yes,

Most tools have had ‘actions’. Do some web searching…for IBM Pro Police and the like.

Microsoft has even improved its tools. One glimmering, (not quite shining …yet) example is the oddly name EMET (currently version 4.0). It is very strange that it is not installed by default. …but there is a very good reason. Any large corporation that wrote code using older, broken tools will very likely have their applications fail if EMET is implemented.

I hate to go down this path but…the security community sort of has its own whacked version of a Steve Jobs….Theo de’Raadt who runs the OpenBSD project. A long, long time ago they did what you want done: they did a code audit of their kernel and they modified tools and libraries based on their view of security and secure coding. Their operating system is often viewed as trailing edge (from a functionality/new feature perspective) since they have created custom, more secure versions of all key packages such as GCC, Apache, etc.

Also take note of the recent changes to string handling in the C programming language spec (was that C11 ?). These changes essentially mirror one the changes that Theo drove through Openbsd …years and years ago.

The other issue, and perhaps next biggest is the granularity of privs within an operating system. Windows only really has about 2.5 levels…some may argue for 4. If you look under the hood of some of the other operating systems you will see that a number of the networking services have their own user id (eg Linux, BSDs including OS X). In the Windows world what we want to see is way, WAY less of the SVCHOST.EXE processes and more specifically named network services …each with their own user id and priv level. This topic leads to a discussion on sandboxing, micro/process/function specific virtual environments (is that what Docker is in Red Hat 6.5 ?).

I know a large medical corporation with tens of thousands of patients that runs most of their apps in the browser… IE8, on XP and Windows 7. Their key payroll/timekeeping/scheduling app requires Java 6 update 29.

Oh, and everyone is local Administrator on every computer.

This kind of negligent stupidity will endure, and will overpower anything we accomplish.

“large medical corporation with tens of thousands of patients … everyone is local Administrator on every computer”

I’m just an old mule, but I think the solution for your scenario lies with sharpening HIPAA’s teeth. According to HHS’ website, fines are “$100 to $50,000 or more per violation” with fines being assessed for “willful neglect.” The cap of $1,500,000 per calendar year is the problem for large companies and needs to be proportional to company revenue, in other words, much larger.

Have you considered sending an anonymous letter to HHS?

I know a very highly rated local hospital system which still, to this day, uses Windows 98 as their corporate desktop platform. They mitigate the vulnerabilities to a certain extent by using isolated networks, completely disconnected from the internet, along with using a hardened Terminal Server/Citrix for access to HIPAA-relevant information.

Anyone with a tablet or otherwise newer device has the capability of wirelessly connecting to internal staff networks, but those networks are firewalled off from both corporate desktop networks and the internet… and have minimal access to much beyond an internal website that contains limited information.

The world contains a lot of companies with extremely unusual priorities, where the executive class has their every whim met while everyone else, even their supposed customers, get to suffer.

Another round of Microsoft patches this Tuesday (12/10/2013) I wonder if Bulletin 3,4,7 and 8 are patches for zero day attacks?

You asked how to incentivize the discovery and fixing of zero-day flaws. One possible way is make vendors legally liable.

Imagine that a car crashes because of an exploit. Imagine a judge finds that the vendor’s duty of care includes the discovery and fixing of bugs. The same principle then extends to medical equipment. Then to baby monitors. Then to home wifi used for life-critical purposes. Even before courts extend this principle to all software, many vendors would be worried enough to stir into action.

Groups like my Committee to Protect Vietnamese Workers, are constantly attacked by government hackers. We can justifiably claim that a buggy .doc, .pdf, etc., contributes to the jailing and torture of our workers’ rights advocates.

That’s another stupid idea ‘dvt’. We need creative and market-driven solutions, not more government control. Governments suck at almost everything. Look how Obama and his Marxist / Progressive regime has screwed up the best healthcare system ever known to mankind, and the unstable and insecure healthcare.gov web app isn’t even the worst part of that giant mess. I hope you and that freaking moron ‘talking mule’ aren’t in charge of anything important.

I bet you’re a hoot at parties.

Taking a break from Fox News? Let’s take a look at a little of the “wonderful” track record of private enterprise: the Therac 25, the Ford Pinto, Love Canal, LuluLemon, some of the many tainted food and drug scandals, the collapse of AIG, Bear Stearns, etc etc, etc. I’ve worked in both private industry and government and they both have levels of incompetence. And why is the US the only major industrial country not on the metric system? Even the English abandoned English measurements 🙂

As for the best medical system in the world, I think were around 26th (just behind Slovenia) by most measures (life expectancy, infant mortality, uninsured). Of course we do lead the world in Type 2 diabetes, obesity, and medical cost (by a factor of 2).

But I guess from the way you write, you have your own road system, police &, fire depts, water well and sewage treatment, library, defense force and school system.

Back to the couch and Fox!

You forgot the best example of them all: thalidomide, the drug which causes horrendous birth defects, e.g. flipper arms and legs. The world had 10,000-20,000 cases, but the USA only had a handful because of one person: Frances Oldham Kelsey M.D. of the FDA. She refused to allow it into the USA, butting heads with Richardson-Merrell in the process.

Yet teabaggers claim that government agencies like the FDA are Marxist / socialist and should be eliminated, allowing the “free market” to decide which drugs are safe for human consumption.

Arguing with a teabagger is like arguing with a two-year-old.

You can’t let ignorance go unchallenged sometimes. Otherwise it can become truth.

Wow Clayton, where did you get the idea that we have the best health care in the world?

You must be very healthy and have never used your health insurance or spoken to anyone who has health insurance in America. Good for you. Stay in that world, it’s safer for you.

I do agree that government does not do a good job at many things, but simply spewing a bunch of nonsense does not offer any room for a rational discussion of software vulnerability remediation.

I am not sure we can trust any government to not do bad things with zero-day exploits. By their very nature they are tasked with spying on each other, us, and anyone they feel is a threat.

Nothing new here, only the tools have changed. Perhaps the best way to manage it would be to make it illegal to profit from the sale of exploits for any purpose other than helping fix the bug.

Of course our own government would quickly allow itself the freedom to continue to use the bugs for spying but at least the criminals could be pursued and their assets taken.

“where did you get the idea that we have the best health care in the world?”

Teabaggers always use the British NHS system as their reference because of Fox News propaganda. NHS does have some flaws, so comparing the USA’s healthcare with it reinforces their preexisting notions. But there are many countries — for example, Switzerland, Germany, France, Finland, and Japan — which have healthcare just as good as the USA, but at a much lower cost with higher life expectancy.

Seriously people (I’m not only calling out Francis, the talking mule specifically, but also people in general), stop referring to people as “teabaggers”. Do you not have any idea how rude and insulting that is?! How is anyone supposed to take anything that you say seriously when you use such childish name calling?

Brian – please consider deleting posts with such flagrant namecalling.

C’mon now, who started the name calling? Obama a Marxist? Really? Add the typical rant against government, attacking dvt’s idea with no facts, just “stupid” and you have your stereotypical right wing diatribe that shows up on every board unrelated to politics. The only things missing were the references to Muslim, Kenya and a birth certificate. Had Brian had the time, Dillard’s response should have deleted immediately as irrelevant.

They picked the name themselves, not our fault they are too ignorant to know what it means, along with the basics of math, economics, geography, science, and myriad other areas of normal life.

” a role for a more global and vendor-independent service or process for incentivizing the discovery, reporting and fixing of zero-day flaws?”

This is basically what Bugcrowd does… Incentivizing reporting of unknown flaws in both unknown (i.e. bespoke) and know code on behalf of our clients.

At the moment the impact of most breaches is mostly financial or reputational. While this is bad, it’s not life or death. I worry about the day in the future when someone truly malevolent (or just a kid messing around) works out that they can change the config on dialysis machines over the network or stand up an RFID system in a public place which talks to the remote monitoring interface on passing pacemakers, or anything along these lines.

The bottom line is that developers (or their organizations) are primarily driven by what is *in* the specification and things such as considering vulnerabilities and external risks are way down the list if they are in the spec at all.

Human nature (writting buggy code) is human nature and it’s going to be very, very hard to change that. With a lot of practice you can reduce the amount of bugs, but it’s never going to zero if we rely on humans. All you need is for the 1 bad line of code in 100 million lines to be the really important one and you are in a world of pain.

I think the future lies in intelligent systems to weed out the 1 line in 100 million. Sadly the intelligence isn’t here yet. As someone said above – hello Universities, here’s your chance.

As I read it, every breach follows one of two paths. Either an end user is tricked into downloading and installing a malicious application, or an existing application is tricked into treating *data* as *code*. It is our love of the bells and whistles (e.g. ability to embed code) in our applications which leave us vulnerable. If the ones and zeroes that arrive over the internet were automatically treated only as data we would be free of the second method of infection – but we would have a much duller www experience.

So the question is how many cyber-criminals had exploiting this Microsoft zero day since November 5 2013 ?

[From below article]

“The zero day has sat unpatched since it was made public Nov. 5; Microsoft did release a FixIt tool as a temporary mitigation. The patch is one of 11 bulletins Microsoft said today it will release as part of its December 2013 Patch Tuesday security updates; five of the bulletins will be rated critical.”

Microsoft Zero day update for December 2013 Patch Tuesday

hxxx://threatpost.com/microsoft-to-patch-tiff-zero-day-wait-til-next-year-for-xp-zero-day-fix/103117

So much noise about a local vulnerability. Not exactly the same as a remotely exploitable browser or browser plugin flaw.

Brian,

Its Phase II of an exploit.

So whomever manages a Windoze system or even perhaps an intelligent end user, is operating with reduce privs. Malware attacks the system remotely essentially getting ‘local access’ …but only has access to the system with the privs of the current user. NOW the local privl. escalation can be triggered getting us administrator privs and THEN we upload the rest of our malware shotgun package across the system…

According to a new A/V Test report “Adobe’s Reader and Flash and all versions of Java are together responsible for a total of 66 percent of the vulnerabilities in Windows systems exploited by malware.” If you say four percent is for PDF and

JPEG files exploits which then that still leaves you a 3o percent margin against all version of Windows and Internet Explorer

I would break that thirty percent down to new or known exploits in the wild , undiscovered zero day exploits , and unpatched vulnerabilities against all current versions of Microsoft Windows along with Internet Explorer 8 through 11.

To me thirty percent is still a huge amount to risk when a good majority of internet users don’t practice defensive security measures.

heh, its like the lottery. Of the KNOWN vulnerabilities…it only takes one to hit the jackpot.

One vulnerability, on one device.

Think of it this way. The only way anyone finds out about something bad is by research. Then, the A/V companies jump on the bandwagon and figures out a signature and follows the bouncing malware.

I am sure there is alot of evil and “mutually agreed upon” null findings when it comes to antivirus signatures.

Software can be broken by other software. So what you are thinking about in today’s standards has already been thought about and done by the programmers and planners of the company that produces it. Do they work together on everything? Nope. I sense a HUGE change of direction. I see java getting the boot and alternate software picked up. Thats half the holes filled…for the moment… and I mean…moment…LOL.

Very Long story.

Pls, follow my reasoning:

Q:

Don’t the miscreants

_also_ use PC and are connected to the Internet?

If so, how do they protect their OWN machines and Internet connections from attacks?

Any Idea, Brian and all?

If I were a miscreant, I would have multiple systems. My software development system would never — NEVER — be used for email or surfing. I would have a second system for Internet use, probably a Linux one where if it got infected, I would wipe and reinstall. Or I could follow Brian’s advice and use a Live CD. I’d also use a wired router, a nice Cisco one.

All good points, Francis.

Yes! Linux in their PCs.

But the miscreants use

internet-connected Servers,

as “Command & Control” centers

to use / manage the bots.

How do they protect their OWN (vulnerable) servers,

from outside cyber-attacks?

Hmm…maybe LINUX servers

are 100% not vulnerable?

They usually would make them with some idea what they want to do, with a good idea how it would propagate, and also with full knowledge of where it would install. I’d imagine they test them out extensively with various OS before they finally release, many of them are fed by application packages or delivery systems that do the actual infecting seperately… They could also use an active and passive mode that would show up on their command servers well before it actively tried to do anything.

Your right, if all the miscreants are not using some version of Linux then each and every one of them would be vulnerable to any exploit that was out there. They would be in the same boat as everyone else.

All the talk about the N.S.A. breaking into computers with malware proves that anything is possible and everyone is susceptible to any type of attack including

browser plugin’s exploitation

They’re not going to dump a fully functional virus on their home network, they are probably working on a dedicated coding machine with virtual machines for testing and adding pieces at a time without making the code live until the last minute… until they add any payload to it the code would basically just spread to the virtual machines and do nothing major until they reboot/wipe.

And there was something a while ago about frankenviruses where one piece of malware can infect another with some weird results…

http://www.theverge.com/2012/1/28/2753951/computer-viruses-infect-worms-frankenmalware

If I am evil, I am going to use items that aren’t standard. one thing I am going to have is one system that is internet facing. I am going to use a DVD to boot that system daily, with no hard drive connected while online. Then all you have to do is hit the power button and the system is carted off, basically worthless.

The evil one would use a variety of Dynamic DNS routes, and change them on the fly. One has to relaize that the easiest way for the Feds to find you is to have a path back to you. Break the trail, and thus, break the chain of evidence.

If the evil one uses a free wifi connection, getting into a routine will get you into trouble. If they have a variety of wifi’s to use, using them randomly and sparingly will keep them alive longer.

Look, if they get to your house, hideout or otherwise, they – whomever they are – its way too late. Not letting them get that far is a science.

Thats where good ole Operating System software comes into the limelight.

Or you can run portable apps like Firefox, Thunderbird, or T.O.R . browser on a encrypted hard drive like a Aegis Padlock hard drive where you have to enter a password on a keyboard to access.

Then the miscreants and government agencies can’t access anything related to the browser function and or get access to your important emails. :–)

This is a great opportunity for innovation in the security field. I am very excited about new or improved products that will be developed to (a) prevent attacks from being so widely successful (b) detect the signs of compromise (c) connect users with the knowledge needed to clean up compromised devices. SRI has done some very interesting work in the attack prevention space with their Blade product, as one example. One of the chief objectives for those of us in the fight must be to innovate in such a way that it drives up the costs of developing exploits for the bad guys to the point where it becomes very expensive for them. That will reduce the number of people/groups who have the financial resources to create this kind of weaponry. Think about the cost of having to re-tool every 24 hours instead of every six months.

All of you are way off. (Particularly you Faux Newsers, lol)

ANY COMPLEX SYSTEM, WILL HAVE FLAWS.

That is called ‘Bill’s Law’.

Ever heard of Minix? Did you know that Linux was patterned after Minix? Except Linus chose to eschew the microkernel architecture, for a monolithic kernel. Any idea how bad a decision that was? Needless to say, Winduhs and OSX are monolithic kernels.

I remember a “few” years back when Microsoft got beat up by a hacker for not “fuzzing” their software. Then Microsoft did their part and they still got beat up for leaving at least half of issues still within the software.

If, and I say IF that was a way to find a back door into any “windows” machine, I am sure it worked. Like I said in the past, its called “windows” for a reason, as long as you have them, your “kingdom” isn’t as safe as you think it is, for a variety of reasons.

Hummm I wonder if Bill G and any other founder was ever approached by the NSA a long time ago…..

Wonder what kind of hush-hush agreements are actually intertwined between governments and software suppliers ?

Well, what is it worth to the NSA to have about 90% of the world’s machines wide open to them? Probably a few bucks.. or a few lives…

http://insecure.org/search.html?ie=ISO-8859-1&q=vupen+security&sa=SecSearch

You will see that VUPEN Security often puts the dates they discovered the vulnerablity in the advisories, and these dates are often a year or more before VUPEN makes it public after the vendor found it.

I just go with Secunia PSI, and if it discovers a vulnerable app with no patch available, I uninstall the application until a patch comes out. This has become fairly rare, now that the industry is more conscious about updating.