New and novel malware appears with enough regularity to keep security researchers and reporters on their toes. But, often enough, there are seemingly new perils that really are just old threats that have been repackaged or stubbornly lingering reports that are suddenly discovered by a broader audience. One of the biggest challenges faced by the information security community is trying to decide which threats are worth investigating and addressing. To illustrate this dilemma, I’ve analyzed several security news headlines that readers forwarded to me this week, and added a bit more information from my own investigations.

I received more than two dozen emails and tweets from readers calling my attention to news that the source code for the 2.0.8.9 version of the ZeuS crimekit has been leaked online for anyone to download. At one point last year, a new copy of the ZeuS Trojan with all the bells and whistles was fetching at least $10,000. In February, I reported that the source code for the same version was being sold on underground forums. Reasonably enough, news of the source leak was alarming to some because it suggests that even the most indigent hackers can now afford to build their own botnets.

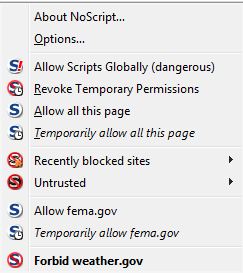

A hacker offering to host and install a control server for a ZeuS botnet.

We may see an explosion of sites pushing ZeuS as a consequence of this leak, but it hasn’t happened yet. Roman Hüssy, curator of ZeusTracker, said in an online chat, “I didn’t see any significant increase of new ZeuS command and control networks, and I don’t think this will change things.” I tend to agree. It was already ridiculously easy to start your own ZeuS botnet before the source code was leaked. There are a number of established and relatively inexpensive services in the criminal underground that will sell individual ZeuS binaries to help novice hackers set up and establish ZeuS botnets (some will even sell you the bulletproof hosting and related amenities as part of a package), for a fraction of the price of the full ZeuS kit.

My sense is that the only potential danger from the release of the ZeuS source code is that more advanced coders could use it to improve their current malware offerings. At the very least, it should encourage malware developers to write more clear and concise user guides. Also, there may be key information about the ZeuS author hidden in the code for people who know enough about programming to extract meaning and patterns from it.

Are RATs Running Rampant?

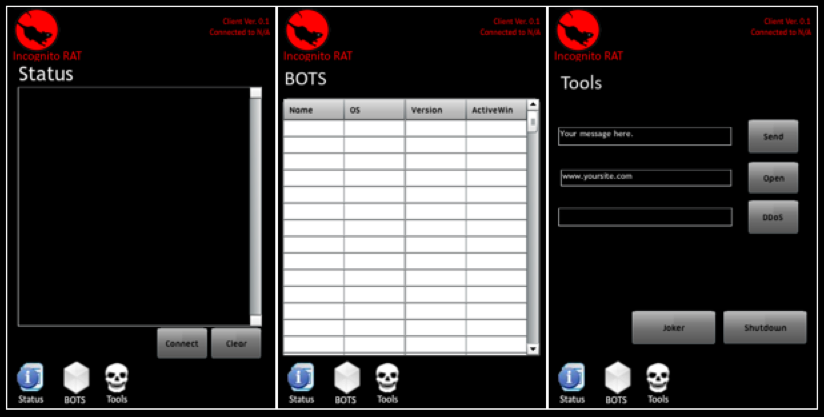

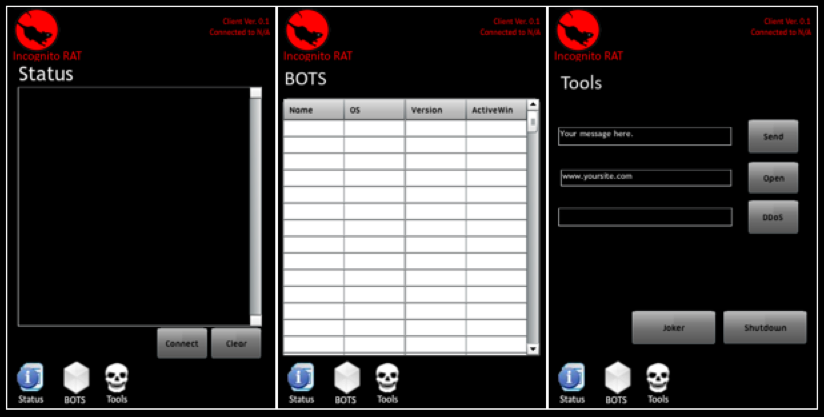

Last week, the McAfee blog included an interesting post about a cross-platform “remote administration tool” (RAT) called IncognitoRAT that is based on Java and can run on Linux, Mac and Windows systems. The blog post featured some good details on the functionality of this commercial crimeware tool, but I wanted to learn more about how well it worked, what it looks like, and some background on the author.

Those additional details, and much more, were surprisingly easy to find. For starters, this RAT has been around in one form or another since last year. The screen shot below shows an earlier version of IncognitoRAT being used to remotely control a Mac system.

IncognitoRAT used to control a Mac from a Windows machine.

The kit also includes an app that allows customers to control botted systems via jailbroken iPhones.

Incognito ships with an app that lets customers control infected computers from an iPhone

The following video shows this malware in action on a Windows system. This video was re-recorded from IncognitoRAT’s YouTube channel (consequently it’s a little blurry), but if you view it full-screen and watch carefully you’ll see a sequence in the video that shows how the RAT can be used to send e-mail alerts to the attacker. The person making this video is using Gmail; we can see a list of his Gchat contacts on the left; and his IP address at the bottom of the screen. That IP traces back to a Sympatico broadband customer in Toronto, Canada, which matches the hometown displayed in the YouTube profile where this video was hosted. A Gmail user named “Carlo Saquilayan” is included in the Gchat contacts visible in the video.

Continue reading →

Since the beginning of May, security firms have been warning Apple users to be aware of new scareware threats like MacDefender and Mac Security. The attacks began on May 2, spreading through poisoned Google Image Search results. Initially, these attacks required users to provide their passwords to install the rogue programs, but recent variants do not, according to Mac security vendor Intego.

Since the beginning of May, security firms have been warning Apple users to be aware of new scareware threats like MacDefender and Mac Security. The attacks began on May 2, spreading through poisoned Google Image Search results. Initially, these attacks required users to provide their passwords to install the rogue programs, but recent variants do not, according to Mac security vendor Intego.