Microsoft on Tuesday released updates to fix roughly four dozen security issues with its Windows operating systems and related software. All things considered, this first Patch Tuesday of 2019 is fairly mild, bereft as it is of any new Adobe Flash updates or zero-day exploits. But there are a few spicy bits to keep in mind. Read on for the gory details. Continue reading

Dirt-Cheap, Legit, Windows Software: Pick Two

Buying heavily discounted, popular software from second-hand sources online has always been something of an iffy security proposition. But purchasing steeply discounted licenses for cloud-based subscription products like recent versions of Microsoft Office can be an extremely risky transaction, mainly because you may not have full control over who has access to your data.

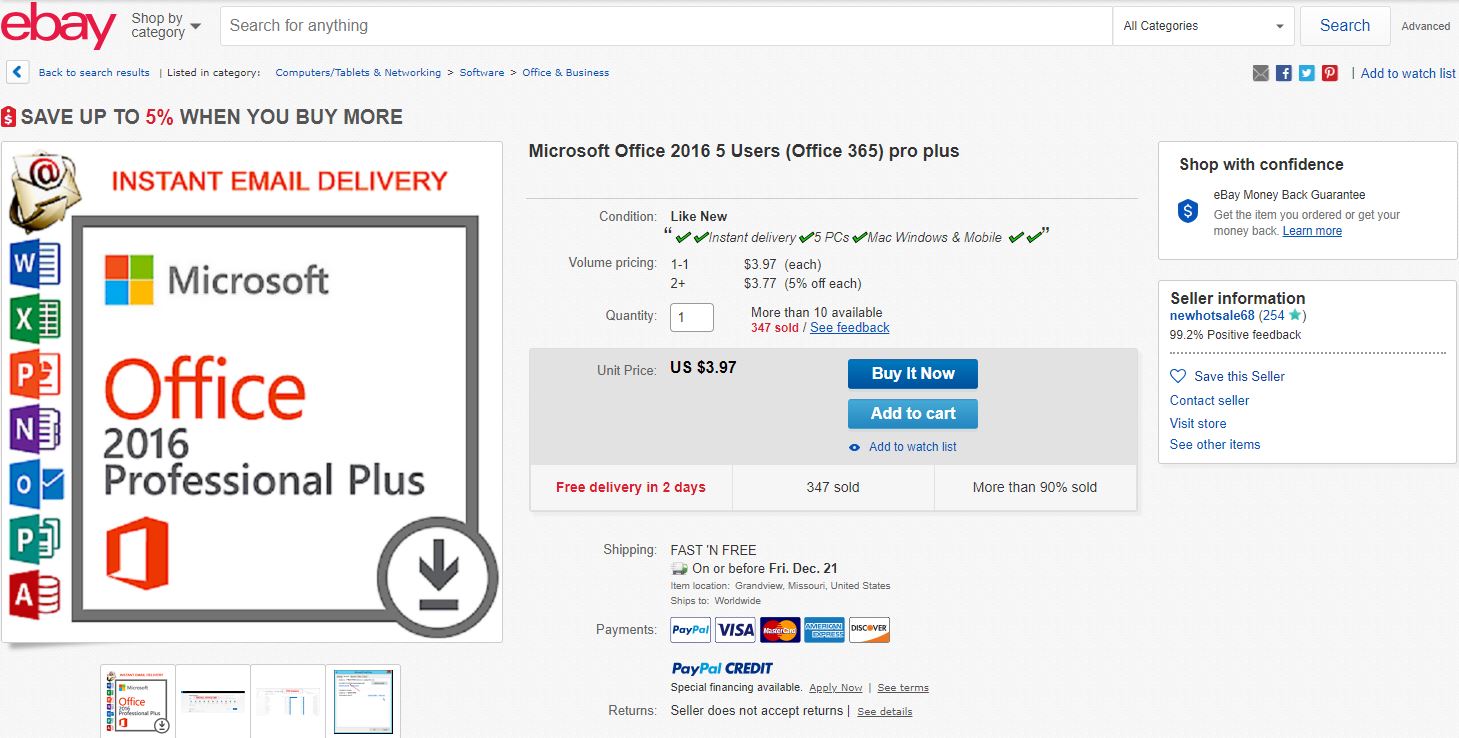

Last week, KrebsOnSecurity heard from a reader who’d just purchased a copy of Microsoft Office 2016 Professional Plus from a seller on eBay for less than $4. Let’s call this Red Flag #1, as a legitimately purchased license of Microsoft Office 2016 is still going to cost between $70 and $100. Nevertheless, almost 350 other people had made the same purchase from this seller over the past year, according to eBay, and there appear to be many auctioneers just like this one.

After purchasing the item, the buyer said he received the following explanatory (exclamatory?) email from the seller — “Newhotsale68” from Vietnam:

Hello my friend!

Thank you for your purchase:)Very important! Office365 is a subscription product and does not require any KEY activation. Account + password = free lifetime use

1. Log in with the original password and the official website will ask you to change your password!

2. Be sure to remember the modified new password. Once you forget your password, you will lose Office365!

3. After you change your password, log on to the official website to start downloading and installing Office365!

Your account information:

* USERMANE : (sent username)

Password Initial: (sent password)

Microsoft Office 365 access link:Http://portal.office.com/

Sounds legit, right?

This merchant appears to be reselling access to existing Microsoft Office accounts, because in order to use this purchase the buyer must log in to Microsoft’s site using someone else’s username and password! Let’s call this Red Flag #2.

More importantly, the buyer can’t change the email address associated with the license, which means whoever owns that address can likely still assume control over any licenses tied to it. We’ll call this Ginormous Red Flag #3. Continue reading

Apple Phone Phishing Scams Getting Better

A new phone-based phishing scam that spoofs Apple Inc. is likely to fool quite a few people. It starts with an automated call that display’s Apple’s logo, address and real phone number, warning about a data breach at the company. The scary part is that if the recipient is an iPhone user who then requests a call back from Apple’s legitimate customer support Web page, the fake call gets indexed in the iPhone’s “recent calls” list as a previous call from the legitimate Apple Support line.

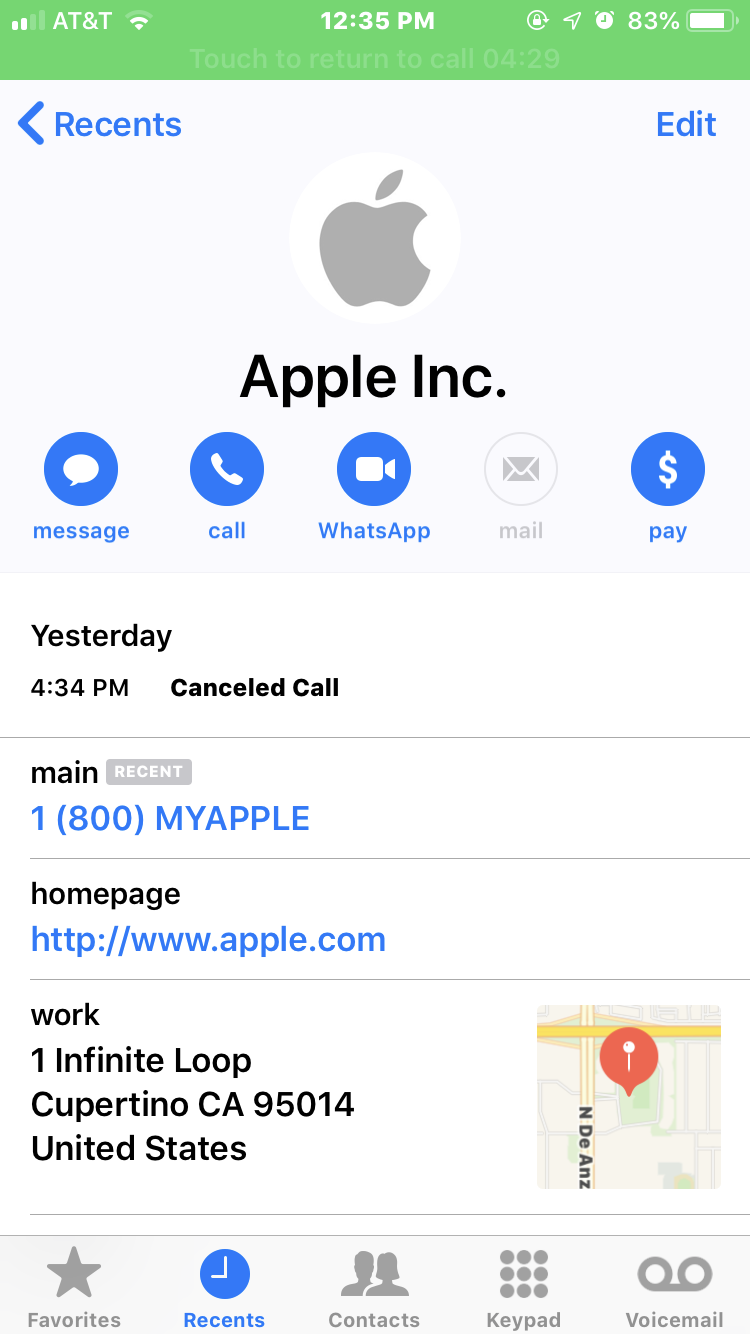

Jody Westby is the CEO of Global Cyber Risk LLC, a security consulting firm based in Washington, D.C. Westby said earlier today she received an automated call on her iPhone warning that multiple servers containing Apple user IDs had been compromised (the same scammers had called her at 4:34 p.m. the day before, but she didn’t answer that call). The message said she needed to call a 1-866 number before doing anything else with her phone.

Here’s what her iPhone displayed about the identity of the caller when they first tried her number at 4:34 p.m. on Jan. 2, 2019:

What Westby’s iPhone displayed as the scam caller’s identity. Note that it lists the correct Apple phone number, street address and Web address (minus the https://).

Note in the above screen shot that it lists Apple’s actual street address, their real customer support number, and the real Apple.com domain (albeit without the “s” at the end of “http://”). The same caller ID information showed up when she answered the scammers’ call this morning.

Westby said she immediately went to the Apple.com support page (https://www.support.apple.com) and requested to have a customer support person call her back. The page displayed a “case ID” to track her inquiry, and just a few minutes later someone from the real Apple Inc. called her and referenced that case ID number at the start of the call.

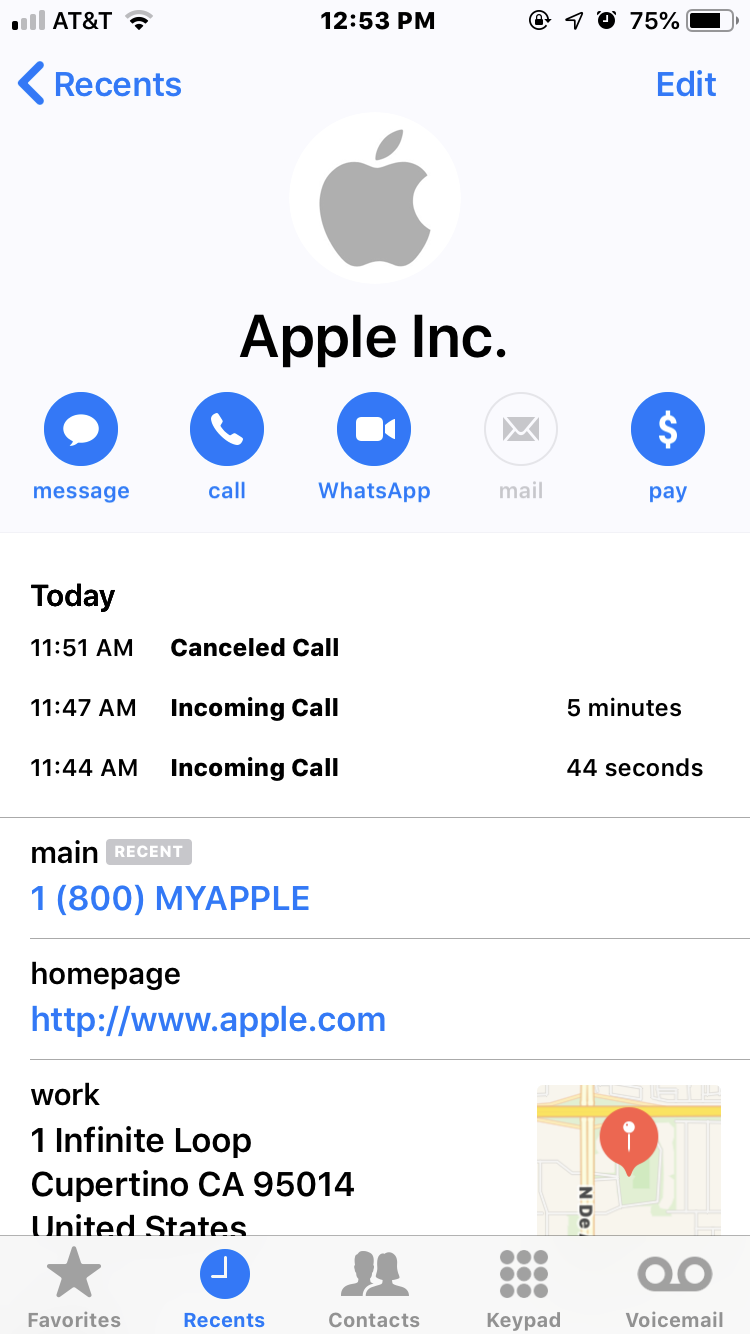

Westby said the Apple agent told her that Apple had not contacted her, that the call was almost certainly a scam, and that Apple would never do that — all of which she already knew. But when Westby looked at her iPhone’s recent calls list, she saw the legitimate call from Apple had been lumped together with the scam call that spoofed Apple:

The fake call spoofing Apple — at 11:44 a.m. — was lumped in the same recent calls list as the legitimate call from Apple. The call at 11:47 was the legitimate call from Apple. The call listed at 11:51 a.m. was the result of Westby accidentally returning the call from the scammers, which she immediately disconnected.

The call listed at 11:51 a.m. was the result of Westby accidentally returning the call from the scammers, which she immediately disconnected.

“I told the Apple representative that they ought to be telling people about this, and he said that was a good point,” Westby said. “This was so convincing I’d think a lot of other people will be falling for it.” Continue reading

Cloud Hosting Provider DataResolution.net Battling Christmas Eve Ransomware Attack

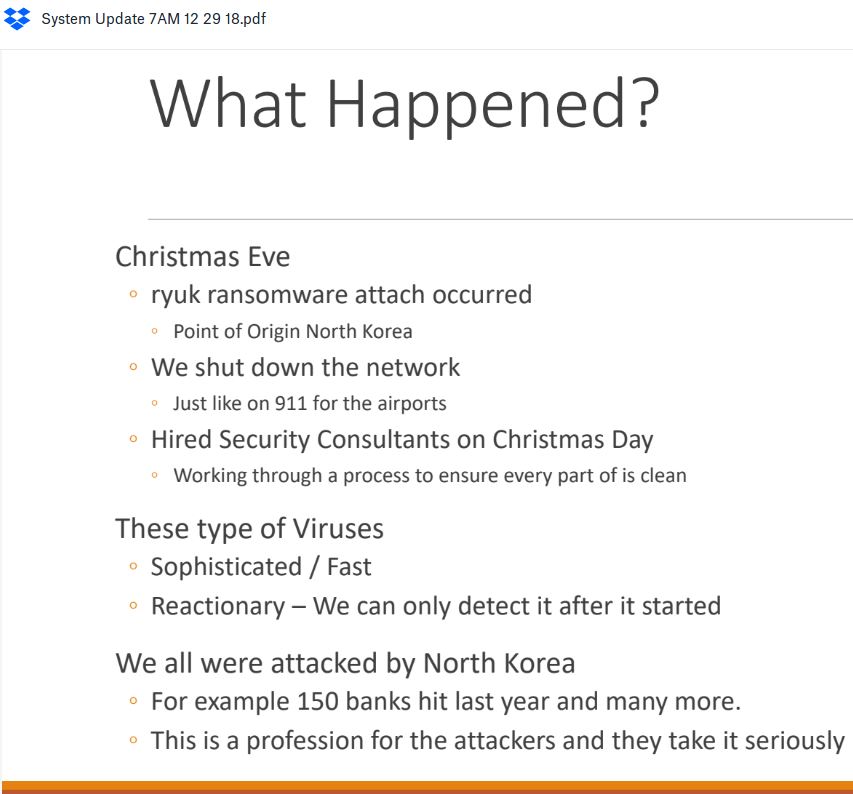

Cloud hosting provider Dataresolution.net is struggling to bring its systems back online after suffering a ransomware infestation on Christmas Eve, KrebsOnSecurity has learned. The company says its systems were hit by the Ryuk ransomware, the same malware strain that crippled printing and delivery operations for multiple major U.S. newspapers over the weekend.

San Juan Capistrano, Calif. based Data Resolution LLC serves some 30,000 businesses worldwide, offering software hosting, business continuity systems, cloud computing and data center services.

The company has not yet responded to requests for comment. But according to a status update shared by Data Resolution with affected customers on Dec. 29, 2018, the attackers broke in through a compromised login account on Christmas Eve and quickly began infecting servers with the Ryuk ransomware strain.

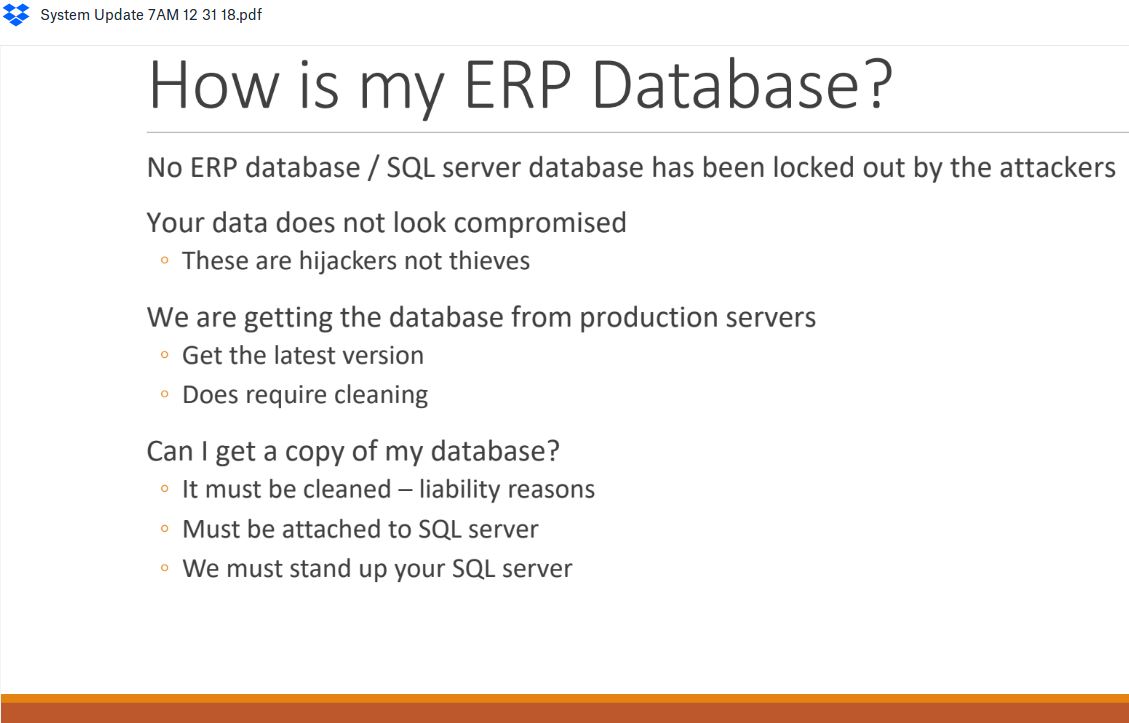

Part of an update on the outage shared with Data Resolution customers via Dropbox on Dec. 29, 2018.

The intrusion gave the attackers control of Data Resolution’s data center domain, briefly locking the company out of its own systems. The update sent to customers states that Data Resolution shut down its network to halt the spread of the infection and to work through the process of cleaning and restoring infected systems.

Data Resolution is assuring customers that there is no indication any data was stolen, and that the purpose of the attack was to extract payment from the company in exchange for a digital key that could be used to quickly unlock access to servers seized by the ransomware.

A snippet of an update that Data Resolution shared with affected customers on Dec. 31, 2018.

The Ryuk ransomware strain was first detailed in an August 2018 report by security firm CheckPoint, which says the malware may be tied to a sophisticated North Korean hacking team known as the Lazarus Group.

Ryuk reportedly was the same malware that infected the Los Angeles Times‘ Olympic printing plant over the weekend, an attack that led to the disruption of newspaper printing and delivery services for a number of publications that rely on the plant — including the Los Angeles Times and the San Diego Union Tribune.

A status update shared by Data Resolution with affected customers earlier today indicates the cloud hosting provider is still working to restore email access and multiple databases for clients. The update also said Data Resolution is in the process of restoring service for companies relying on it to host installations of Dynamics GP, a popular software package that many organizations use for accounting and payroll services. Continue reading

Happy 9th Birthday, KrebsOnSecurity!

Hard to believe we’ve gone another revolution around the Sun: Today marks the 9th anniversary of KrebsOnSecurity.com!

This past year featured some 150 blog posts, but as usual the biggest contribution to this site came from the amazing community of readers here who have generously contributed their knowledge, wit and wisdom in more than 10,000 comments.

This past year featured some 150 blog posts, but as usual the biggest contribution to this site came from the amazing community of readers here who have generously contributed their knowledge, wit and wisdom in more than 10,000 comments.

Speaking of generous contributions, more than 100 readers have expressed their support in 2018 via PayPal donations to this site. The majority of those funds go toward paying for subscription-based services that KrebsOnSecurity relies upon for routine data gathering and analysis. Thank you.

Your correspondence and tips have been invaluable, so by all means keep them coming. For the record, I’m reachable via a variety of means, including email, the contact form on this site, and of course Facebook, LinkedIn, and Twitter (direct messages are open to all). For more secure and discreet communications, please consider reaching out via Keybase, Wicker (krebswickr), or Signal (by request). Continue reading

Serial Swatter and Stalker Mir Islam Arrested for Allegedly Dumping Body in River

A 22-year-old man convicted of cyberstalking and carrying out numerous bomb threats and swatting attacks — including a 2013 swatting incident at my home — was arrested Sunday morning in the Philippines after allegedly helping his best friend dump the body of a housemate into a local river.

Suspects Troy Woody Jr. (left) and Mir Islam, were arrested in Manila this week for allegedly dumping the body of Woody’s girlfriend in a local river. Image: Manila Police Dept.

Police in Manila say U.S citizens Mir Islam, 22, and Troy Woody Jr., 21, booked a ride from Grab — a local ride hailing service — and asked for the two of them to be picked up at Woody’s condominium in Mandaluyong City. When the driver arrived the two men stuffed a large box into the trunk of the vehicle.

According to the driver, Islam and Woody asked to be driven to a nearby shopping mall, but told the driver along the way to stop at a compound near the Pasig River in Manila, where the two men allegedly dumped the box before getting back in the hailed car.

The Inquirer reports that authorities recovered the box and identified the victim as Tomi Michelle Masters, 23, also a U.S. citizen from Indiana who was reportedly dating Woody and living in the same condo. Masters’ Instagram profile states that she was in a relationship with Woody.

Update, 12:30 p.m. ET, Dec. 24: Both men have since been charged with murder, according to a story today at the Filipino news site Tempo, and the police believe there was some kind of violent struggle between Masters and Woody.

“Police eventually recovered the box that contained the naked body of the victim that was wrapped in duct tape,” Tempo reports. The local police station head was quoted as saying “the victim was believed to have been killed in the Mandaluyong condominium she shared with Woody, her alleged boyfriend. He said medical examination showed scratch marks all over Woody’s body.”

Original story:

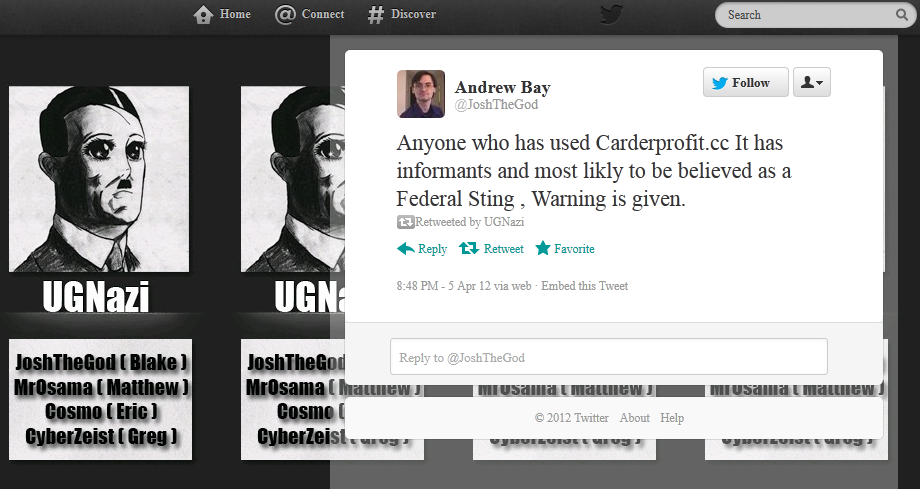

Brooklyn, NY native Islam, a.k.a. “Josh the God,” has a long rap sheet for computer-related crimes. He briefly rose to Internet infamy as one of the core members of UGNazi, an online mischief-making group that claimed credit for hacking and attacking a number of high-profile Web sites.

On June 25, 2012, Islam and nearly two-dozen others were caught up in an FBI dragnet dubbed Operation Card Shop. The government accused Islam of being a founding member of carders[dot]org — a credit card fraud forum — trafficking in stolen credit card information, and possessing information for more than 50,000 credit cards.

JoshTheGod’s (Mir Islam’s ) Twitter feed, in April 2012 warning fellow carding forum carderprofit members that the forum was being run by the FBI.

In June 2016, Islam was sentenced to a year in prison for an impressive array of crimes, including stalking people online and posting their personal data on the Internet. Islam also pleaded guilty to reporting phony bomb threats and fake hostage situations at the homes of celebrities and public officials (as well as this author). Continue reading

Feds Charge Three in Mass Seizure of Attack-for-hire Services

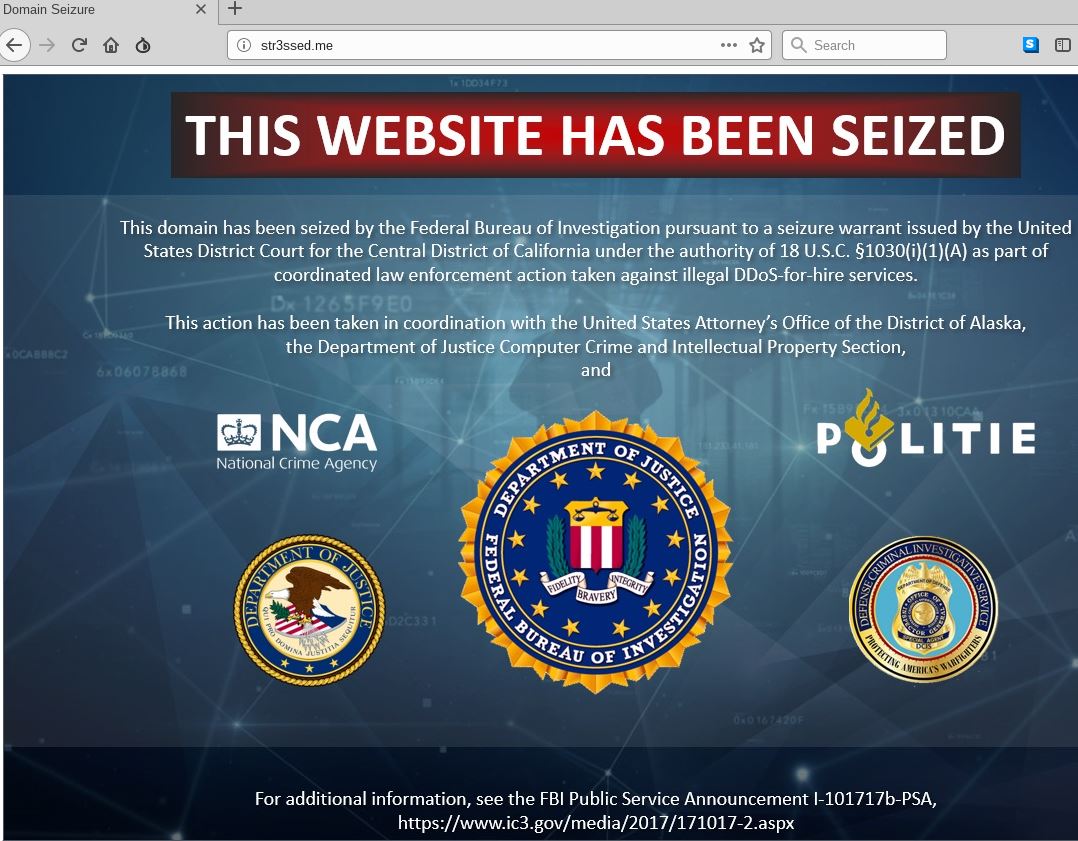

Authorities in the United States this week brought criminal hacking charges against three men as part of an unprecedented, international takedown targeting 15 different “booter” or “stresser” sites — attack-for-hire services that helped paying customers launch tens of thousands of digital sieges capable of knocking Web sites and entire network providers offline.

The seizure notice appearing on the homepage this week of more than a dozen popular “booter” or “stresser” DDoS-for-hire Web sites.

As of Thursday morning, a seizure notice featuring the seals of the U.S. Justice Department, FBI and other law enforcement agencies appeared on the booter sites, including:

anonsecurityteam[.]com

booter[.]ninja

bullstresser[.]net

critical-boot[.]com

defcon[.]pro

defianceprotocol[.]com

downthem[.]org

layer7-stresser[.]xyz

netstress[.]org

quantumstress[.]net

ragebooter[.]com

request[.]rip

str3ssed[.]me

torsecurityteam[.]org

vbooter[.]org

Booter sites are dangerous because they help lower the barriers to cybercrime, allowing even complete novices to launch sophisticated and crippling attacks with the click of a button.

Cameron Schroeder, assistant U.S. attorney for the Central District of California, called this week’s action the largest simultaneous seizure of booter service domains ever.

“This is the biggest action U.S. law enforcement has taken against booter services, and we’re doing this in cooperation with a large number of industry and foreign law enforcement partners,” Schroeder said.

Booter services are typically advertised through a variety of methods, including Dark Web forums, chat platforms and even youtube.com. They accept payment via PayPal, Google Wallet, and/or cryptocurrencies, and subscriptions can range in price from just a few dollars to several hundred per month. The services are priced according to the volume of traffic to be hurled at the target, the duration of each attack, and the number of concurrent attacks allowed.

Purveyors of stressers and booters claim they are not responsible for how customers use their services, and that they aren’t breaking the law because — like most security tools — stresser services can be used for good or bad purposes. For example, all of the above-mentioned booter sites contained wordy “terms of use” agreements that required customers to agree they will only stress-test their own networks — and that they won’t use the service to attack others.

But experts say today’s announcement shreds that virtual fig leaf, and marks several important strategic shifts in how authorities intend to prosecute booter service operators going forward.

“This action is predicated on the fact that running a booter service itself is illegal,” said Allison Nixon, director of security research at Flashpoint, a security firm based in New York City. “That’s a slightly different legal argument than has been made in the past against other booter owners.”

For one thing, the booter services targeted in this takedown advertised the ability to “resolve” or determine the true Internet address of a target. This is especially useful for customers seeking to harm targets whose real address is hidden behind mitigation services like Cloudflare (ironically, the same provider used by some of these booter services to withstand attacks by competing booter services).

Some resolvers also allowed customers to determine the Internet address of a target using nothing more than the target’s Skype username.

“You don’t need to use a Skype resolver just to attack yourself,” assistant U.S. Attorney Schroeder said. “Clearly, the people running these booter services know their services are being used not by people targeting their own infrastructure, and have built in capabilities that specifically allow customers to attack others.”

Another important distinction between this week’s coordinated action and past booter site takedowns was that the government actually tested each service it dismantled to validate claims about attack firepower and to learn more about how each service conducted assaults.

In a complaint unsealed today, the Justice Department said that although FBI agents identified at least 60 different booter services operating between June and December 2018, they discovered not all were fully operational and capable of launching attacks. Hence, the 15 services seized this week represent those that the government was able to use to conduct successful, high-volume attacks against their own test sites.

“This is intended to send a very clear message to all booter operators that they’re not going to be allowed to operate openly anymore,” Nixon said. “The message is that if you’re running a DDoS-for-hire service that can attack an Internet address in such a way that the FBI can purchase an attack against their own test servers, you’re probably going to get in trouble.” Continue reading

Microsoft Issues Emergency Fix for IE Zero Day

Microsoft today released an emergency software patch to plug a critical security hole in its Internet Explorer (IE) Web browser that attackers are already using to break into Windows computers.

The software giant said it learned about the weakness (CVE-2018-8653) after receiving a report from Google about a new vulnerability being used in targeted attacks.

The software giant said it learned about the weakness (CVE-2018-8653) after receiving a report from Google about a new vulnerability being used in targeted attacks.

Satnam Narang, senior research engineer at Tenable, said the vulnerability affects the following installations of IE: Internet Explorer 11 from Windows 7 to Windows 10 as well as Windows Server 2012, 2016 and 2019; IE 9 on Windows Server 2008; and IE 10 on Windows Server 2012.

“As the flaw is being actively exploited in the wild, users are urged to update their systems as soon as possible to reduce the risk of compromise,” Narang said. Continue reading

A Chief Security Concern for Executive Teams

Virtually all companies like to say they take their customers’ privacy and security seriously, make it a top priority, blah blah. But you’d be forgiven if you couldn’t tell this by studying the executive leadership page of each company’s Web site. That’s because very few of the world’s biggest companies list any security executives in their highest ranks. Even among top tech firms, less than half list a chief technology officer (CTO). This post explores some reasons why this is the case, and why it can’t change fast enough.

KrebsOnSecurity reviewed the Web sites for the global top 100 companies by market value, and found just five percent of top 100 firms listed a chief information security officer (CISO) or chief security officer (CSO). Only a little more than a third even listed a CTO in their executive leadership pages.

The reality among high-tech firms that make up the top 50 companies in the NASDAQ market was even more striking: Fewer than half listed a CTO in their executive ranks, and I could find only three that featured a person with a security title.

Nobody’s saying these companies don’t have CISOs and/or CSOs and CTOs in their employ. A review of LinkedIn suggests that most of them in fact do have people in those roles (although I suspect the few that aren’t present or easily findable on LinkedIn have made a personal and/or professional decision not to be listed as such).

But it is interesting to note which roles companies consider worthwhile publishing in their executive leadership pages. For example, 73 percent of the top 100 companies listed a chief of human resources (or “chief people officer”), and about one-third included a chief marketing officer.

Not that these roles are somehow more or less important than that of a CISO/CSO within the organization. Nor is the average pay hugely different among all three roles. Yet, considering how much marketing (think consumer/customer data) and human resources (think employee personal/financial data) are impacted by your average data breach, it’s somewhat remarkable that more companies don’t list their chief security personnel among their top ranks.

Julie Conroy, research director at the market analyst firm Aite Group, said she initially hypothesized that companies with a regulatory mandate for strong cybersecurity controls (e.g. banks) would have this role in their executive leadership team.

“But a quick look at Bank of America and Chase’s websites proved me wrong,” Conroy said. “It looks like the CISO in those firms is one layer down, reporting to the executive leadership.”

Conroy says this dynamic reflects the fact that revenue centers like human capital and the ability to drum up new business are still prioritized and valued by businesses more than cost centers — including loss prevention and cybersecurity.

“Marketing and digital strategy roles drive top line revenue for firms—the latter is particularly important in retail and banking businesses as so much commerce moves online,” Conroy said. “While you and I know that cybersecurity and loss prevention are critical functions for all types of businesses, I don’t think that reality is reflected in the organizational structure of many businesses still. A common theme in my discussions with executives in cost center roles is how difficult it is for them to get budget to fund the tech they need for loss prevention initiatives.” Continue reading

Spammed Bomb Threat Hoax Demands Bitcoin

A new email extortion scam is making the rounds, threatening that someone has planted bombs within the recipient’s building that will be detonated unless a hefty bitcoin ransom is paid by the end of the business day.

Sources at multiple U.S. based financial institutions reported receiving the threats, which included the subject line, “I advise you not to call the police.”

The email reads:

My man carried a bomb (Hexogen) into the building where your company is located. It is constructed under my direction. It can be hidden anywhere because of its small size, it is not able to damage the supporting building structure, but in the case of its detonation you will get many victims.

My mercenary keeps the building under the control. If he notices any unusual behavior or emergency he will blow up the bomb.

I can withdraw my mercenary if you pay. You pay me 20.000 $ in Bitcoin and the bomb will not explode, but don’t try to cheat -I warrant you that I will withdraw my mercenary only after 3 confirmations in blockchain network.

Here is my Bitcoin address : 1GHKDgQX7hqTM7mMmiiUvgihGMHtvNJqTv

You have to solve problems with the transfer by the end of the workday. If you are late with the money explosive will explode.

This is just a business, if you don’t send me the money and the explosive device detonates, other commercial enterprises will transfer me more money, because this isnt a one-time action.

I wont visit this email. I check my Bitcoin wallet every 35 min and after seeing the money I will order my recruited person to get away.

If the explosive device explodes and the authorities notice this letter:

We are not terrorists and dont assume any responsibility for explosions in other buildings.

The bitcoin address included in the email was different in each message forwarded to KrebsOnSecurity. In that respect, this scam is reminiscent of the various email sextortion campaigns that went viral earlier this year, which led with a password the recipient used at some point in the past and threatened to release embarrassing videos of the recipient unless a bitcoin ransom was paid.

I could see this spam campaign being extremely disruptive in the short run. There is little doubt that some businesses receiving this extortion email will treat it as a credible threat. This is exactly what happened today at one of the banks that forwarded me their copy of this email. Also, KrebsOnSecurity has received reports that numerous school districts across the country have closed schools early today in response to this hoax email threat.

“There are several serious legal problems with this — people will be calling the police, and they cannot ignore even a known hoax,” said Jason McNew, CEO and founder of Stronghold Cyber Security, a consultancy based in Gettysburg, Pa.

This is a developing story, and may be updated throughout the day.

Update: 4:46 p.m. ET: Added bit about school closings.