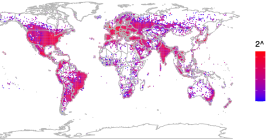

The Global Surveillance Free-for-All in Mobile Ad Data

Not long ago, the ability to remotely track someone’s daily movements just by knowing their home address, employer, or place of worship was considered a powerful surveillance tool that should only be in the purview of nation states. But a new lawsuit in a likely constitutional battle over a New Jersey privacy law shows that anyone can now access this capability, thanks to a proliferation of commercial services that hoover up the digital exhaust emitted by widely-used mobile apps and websites.